TRAFFIC MONITORING: Optical sensing system improves traffic-flow evaluation

BEATE MEFFERT, ROMAN BLASCHEK, and UWE KNAUER

Governments worldwide are investing much time and effort into developing efficient traffic-management capabilities to overcome growing problems with traffic congestion, accident rates, long travel times, and air pollution from auto emissions. One approach is to use optical sensors to monitor traffic and establish a dynamic road traffic-management system that operates in near real time.1 Such a system requires the design of new software and hardware components for signal processing.

Traffic-data measurement systems often used today are induction loops embedded in the pavement and the so-called floating-car-data technique, in which the desired data—such as the position and velocity of the vehicles—are collected by several mobile units at the same time and transmitted to a central system via mobile communication.2 These types of systems have disadvantages, including the inability to calculate all the necessary traffic parameters, such as object-related features (location, speed, size, and shape) and region-related features (traffic speed and density, queue length at stop lines, waiting time, and origin-destination matrices), or to evaluate the behavior of nonmotorized road users.

Optical systems can overcome these limitations and thus optimize traffic flow at intersections during busy periods, identify accidents quickly, and provide a forecast of changes in traffic patterns. The variety of optical sensors offers greater flexibility in system development and application, with a variety of resolution and spectral ranges. In addition, several cameras can be installed at multiple locations to compensate for occlusions. Optical systems are also able to observe and analyze local and wide-area traffic and automatically generate traffic data, which enhances traffic simulation and planning. In addition, image sequences acquired by these systems can be used to track all the objects that take part in the traffic flow.

The Institute of Computer Science at the Humboldt-Universität zu Berlin (Germany), in conjunction with the German Aerospace Center, is testing an integrated optical system for image analysis in traffic monitoring. The main goal of the research is to develop algorithms for characterizing traffic patterns that can be implemented in programmable logic arrays or in digital signal processors in the camera system itself to support real-time signal processing. Such a system must be able to acquire and evaluate traffic image sequences at intersections and along lanes or roads. The system has to work continuously and reliably, with the ability to extract traffic data at night and under bad weather conditions, not just under optimal daytime conditions. The image-processing algorithms thus must be efficient, robust, and scalable, and the resolution of the sensors has to support these requirements.

Another requirement is sensor-data fusion—that is, the synchronization of time and correlation of space for different images from different sensors and data from other sources. Such a fusion process requires high-speed networking, which again means the signal processing has to be very high-performance. These requirements have to be taken into account in the design of all parts of the system, from image-signal acquisition via image processing and traffic-data retrieval to traffic control.

Hardware and software components

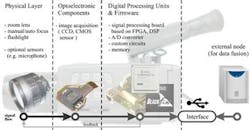

The system we have developed consists of mechanical and optical components, an image-acquisition system, a hardware-based image-processing unit, and image-processing software implemented in a standard microprocessor (see Fig. 1). There are three different levels of image and data processing: the processing in the camera itself (detection and extraction of traffic objects), the further processing as tracking and calculating features for describing the actual traffic flow, and the fusion of all these information and additional data to evaluate the overall situation.

The sensor system combines complementary technologies (stereo camera, infrared and visible sensors) that offer a variety of radiometric and geometric resolution and spectral sensitivity. The sensors are connected within a network; the availability of specific hardware solutions at low-cost allows special image processing and avoids some basic bottlenecks. One possibility of a flexible hardware design is the implementation of image-processing procedures on a field-programmable gate array (FPGA). The advantages of FPGA solutions are a high degree of parallelism, a flexible structure, low clock frequency, and low power loss. The programmability makes it possible to adapt the structure to the problem (especially the degree of parallelism in the limit of available gates). A high-density FPGA provided by Altera or Xilinx, for example, includes more than 1 million gates as well as embedded 32-bit processor cores.3

An important step in implementing this system is to build up the hardware accelerator code, which is located in the programmable logic storage of the FPGA. We found that memory-mapped communication gives the best performance and lowest cost. If the system is intended to use FPGA structures for real-time signal processing, algorithms that are suitable for parallel processing should be used. The degree of parallelism is determined by the amount of hardware resources (gates, slices, and modules), so algorithms have to be adapted to these hardware resources for fast operation. In addition, the use of programmable hardware and software allows an early system test without increasing hardware costs, which means the same platform can be used from prototype to full-scale implementation.

In addition to the sensor system and other hardware and networking components, low- and high-level image-processing algorithms are needed to enable the traffic-management system to operate intelligently. The performance of the system depends on the reliable recognition of all road users, their adequate description, and the knowledge of how to combine these parameters with information from other sources to solve the problems of traffic management. In our institute a number of algorithms have already been developed, implemented, and tested to account for changes in weather, lighting, and traffic-density conditions by varying camera positions, geometrical resolutions, and viewing directions.

Sensor-data fusion

One problem we have specifically addressed is sensor-data fusion. Analyzing a traffic scene often requires observing the scene from different viewpoints using two or more cameras. In this case it is useful to know the spatial relationship between the camera sensors. Knowing the mutual geometric position can help an image-processing algorithm track an object in the images. One kind of linking or fusion of different camera images is made between two optical area sensors working in the visible spectral range (VIS sensor). The spatial relation between the camera sensors can be determined by using epipolar geometry, which supplies a method to map an image point from one image onto an epipolar line in the other image (see Fig. 2).4 Taking advantage of the fact that this calculation is not unique (especially using algorithms such as RANSAC, or Random Sample Consensus), one can find two appropriate solutions such that the intersection of the resulting lines leads to direct point-to-point mapping. This mapping can be calculated pair-wise for each camera sensor that is used.To measure distances in merged images, at least one of the cameras has to be calibrated by estimating the intrinsic parameters (geometric resolution, focal length) and the extrinsic ones (translation and rotation in world coordinates; see Fig. 3). Because of the higher spatial resolution, normally the VIS camera is calibrated and the TIR image is rectified—that is, the infrared image is adjusted so that it looks as though it were taken from the same position as the VIS camera. If control points in the VIS and TIR images are available, this can be done by a nearly affine transformation.

Another problem our system addresses is the issue of calculating amounts for characterizing traffic flow—the so-called "traffic active area." Object movement in urban scenarios is not a random process. In fact, it is strongly restricted by the structure of the environment. Objects move within predefined areas (streets and sidewalks), avoid obstacles, and are affected by traffic regulations. Although the movement of pedestrians is less restricted compared to vehicles, they tend to restrict themselves in the manner they choose their paths. Even on public spaces where people are free to walk anywhere, most people follow invisible routes that are the optimal connections between their points of interest. They are subconsciously chosen in the same way by most individuals (see Fig. 4).As a result, the direction of motion at every position is limited to just a few options. The same applies to the speed of motion but here there are fewer restrictions. Observing such a scene with a rigid camera, one would observe the existence of one or more motion vectors for every image pixel, describing the typical object motions for this particular point. It is possible to retrieve this information for every point of the scene automatically, based on low-level features of the images. The result is a valuable description of the motion situation in the scene. Furthermore, information on the structure of the scene can be derived, and this information can be used to identify and qualify regions of interest (such as lanes) and their properties (such as driving directions and speeds).

Our work at Humboldt-Universität zu Berlin has demonstrated that using an optical sensor system for traffic monitoring enables real-time surveillance of large areas. The use of complementary sensors provides a variety of images that can be processed to extract features and to track all kinds of objects. Future developments should deal with scalable procedures for time-consuming tasks and with methods for optimal partitioning of hardware and software implementation of the developed algorithms.

REFERENCES

1. R.D. Kühne, R.-P. Schäfer, J. Mikat, K.-U. Thiessenhusen, U. Böttger, and S. Lorkowski, Proc. 10th IFAC (Int'l. Fed. of Automatic Control) Symp. on Control in Transportation Systems, Tokyo, Japan (2003).

2. S. Lorkowski, P. Mieth, R.-P. Schäfer, CTRI Young Researcher Seminar, Den Haag (NL), Nov. 11-13, 2005.

3. Xilinx Corp. www.xilinx.com/publications/xcellonline/partners/xc_pdf/xc_nuvation43.pdf. 2005

4. Z. Zhang, Int'l. J. Computer Vision 27, 2, 161, Kluwer Academic Publishers, Boston (1998).

Beate Meffert is head of the signal-processing and pattern-recognition group at the Institute of Computer Science, Humboldt-Universitaet zu Berlin; Roman Blaschek and Uwe Knauer are graduate students at Humboldt-Universitaet zu Berlin, Unter den Linden 6, 10099 Berlin, Germany; e-mail [email protected].