OPTICAL COMMUNICATIONS: Are 100G networks ready for prime time?

The ever-increasing need for higher-bandwidth and higher-speed optical data and communications transmission is driving the development of 100 gigabit per second (Gbit/s) or simply 100G technology. While much of today's network traffic is encoded at 1 Gbit/s or 10 Gbps speeds, 40 Gbit/s networks are already being installed and in fact, Verizon just demonstrated successful 100 Gbit/s optical data transmission on existing optical fibers that were originally conditioned for 10 Gbit/s service.

But while much of the buzz at communications conferences surrounds the technology developments and standards activities necessary to future implementation of 100 Gbit/s networks, many are asking: "Why can't I have 100G today?" The answer may lie in the efforts of several industry groups that are exploring whether 100G networks are possible using existing fiber infrastructures and whether unique optical or electronic tricks can bridge the gap from 10G to 40G to 100G systems.

Defining 100G

Technology for 100G systems includes both 100G transport and 100G Ethernet.1 The 100G transport side typically refers to long-distance communication links, with metro, regional, and long-haul dense-wavelength-division-multiplexing (DWDM) networks sending data at 100 Gbit/s speeds thousands of kilometers over existing 10G or 40G infrastructures, with standards being established by groups like the Optical Internetworking Forum (OIF; www.oiforum.com). Alternatively, 100G Ethernet speeds data between routers, servers, and switches in data-center environments over shorter distances up to 40 km, with standards in development by the IEEE (www.ieee.org). While both technologies involve different optical components and network architectures, both are necessary for faster computer connectivity, speedier data transport, and increased bandwidth.

Who wants it and when?

In 2008, Qwest Communications (Denver, CO) CTO Pieter Poll said that Qwest's Internet traffic was doubling every 16 months. Poll is not alone in his desire for a 100G Ethernet standard now. Facebook (Palo Alto, CA) network architect Donn Lee says that his company needs 100 Gbit/s core switching just to keep up with the millions of new Facebook users being added each week to the current 300 million active users. Although Facebook continues to fill the gap by adding multiples of 10G Ethernet ports connected by fiber, it will need 50 to 100 terabytes in its data centers to meet projected traffic needs in 2010. "Compute machines in data centers can push a nearly infinite amount of bandwidth. These are just estimates of how much might be adequate," says Lee. "Today's challenge is to build more, a lot more, within the envelope of current technology while controlling complexity."

At least one institution has not only demanded 100G, but is actually implementing it. The New York Stock Exchange (NYSE) Euronext, which traditionally used 10 Gbit/s service-provider connections, was driven by its increased network traffic to look at new alternatives. Andy Bach, senior vice president and global head of communications for NYSE Euronext, was recently quoted in Telecom Magazine as saying, "He who connects the order faster gets the order, so anything we can do to speed that up is a good thing."2 Foregoing the traditional carrier services route, NYSE Euronext is building two network centers. These two data centers, which will be network-live but not ready for servers initially, were to be completed by November 2009, and will support 100 Gbit/s.

"The Amsterdam Internet Exchange [AMS-IX] has shown that their peak bandwidth requirement has reached 600 Gbit/s, and it was recently announced that there is as much as a zetabyte of data in transit in the Internet," says Brad Booth, director of technology for AppliedMicro (www.appliedmicro.com) and chairman of the board of the Ethernet Alliance (Mountain View, CA; www.ethernetalliance.org), a not-for-profit consortium committed to the success and expansion of Ethernet technology. "At the highest point of aggregation within the data centers and carrier networks, there is a growing need for equipment to operate at 100G or faster speeds to prevent bottlenecks. The IEEE P802.3ba Task Force is writing the first 100G Ethernet standard, and the Ethernet Alliance members champion activities that help those standards discussions along and bring the standard to life," says Booth. "It's our job through the standards bodies and the Ethernet Alliance to make sure that bottleneck is broken."

Booth says that although current 10G Ethernet can be supported by copper cabling up to 100 m, for 100G Ethernet to achieve this and longer reaches, multimode and single-mode fiber is required. Current work in 100G Ethernet is considering four wavelengths operating at 25 Gbit/s each over single-mode fiber, although single-wavelength 100 Gbit/s Ethernet is under discussion in the hallways and back rooms. The IEEE P802.3ba draft standard is now in Phase II (working group ballot), with a goal to move to its final phase in November for an anticipated 100G Ethernet standard ratified by June 2010.

The 100G hurdle—technology versus cost

"Commercial network operators cite a long-term trend of traffic growth at rates of 50% per year or higher," says Joe Berthold, vice president of network architecture at Ciena and former president of the OIF who recently drafted a "100G [ultra-long-haul] ULH DWDM Project Overview" document for OIF and its members. The document's motivation is to "develop a consensus among a critical mass of module and system vendors on the requirements for specific 100G technology elements so as to create a larger market for these components. Such a consensus will improve the business case for the required base technology investments."

Berthold says that the 100G guidelines require that the optical channel spacing in DWDM networks, typically 50 GHz, needs to be maintained. And, in addition, the 100G upgrades need to function with currently deployed systems, without requiring additional fibers or new common equipment, such as optical line amplifiers. So contrary to the desires of optical-fiber manufacturers, who would like to see a robust market for new fiber installations, single-mode fibers originally designed to handle 10G data streams can indeed handle 100G transport under the OIF recommended guidelines. He says the system requires a more spectrally efficient modulation format than the typical differential phase-shift keying (DPSK) method used for 40G networks; even though optical techniques can compensate for chromatic dispersion (CD) and polarization mode dispersion (PMD) in these systems, the compensation costs are too high.

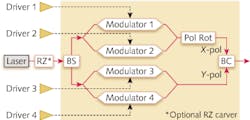

The proposed modulation format from the OIF is dual-polarization quadrature phase-shift keying (DP QPSK) with a coherent receiver (the beam from the laser transmitter is split into two orthogonal polarization states). A coherent receiver preserves the phase information of the optical signal, meaning that an electronic equalizer can be used to recover both polarizations and to compensate for a number of signal impairments, including CD and PMD. The method, however, now means that a DP QPSK transmitter module requires four modulators rather than the one used in a 10G transmitter (see Fig. 1). "Even though our modulation scheme increases the number of signal components by a factor of four, economies of scale brought forth by integrated component technologies will eventually make this method cost-effective," says Berthold. "Manufacturers choosing an 'integrated' manufacturing approach will win in the long term. Because first-generation 100G doesn't make use of these integrated components, we see cost parity of ten 10G systems with 100G systems in 2012."

Simon Poole, director of new business ventures at Finisar (Sunnyvale, CA), sees 100G system deployments being at least 18 months away. "Even though there are some early deployments, no one has really nailed the electronic signal processing required for the receiver side, and 100G transceivers are just not at a price point that makes 100G installation cost-effective right now." Indeed, Poole has hit on an issue with the receiver recommendations from the OIF; namely, the forward-error-correction (FEC) designs needed to function properly in the 100G DWDM network are running into theoretical limits. In response, the OIF is working on a new project: "Forward Error Correction for 100G DP-QPSK LH Communication."

While the FEC issues are too complicated for this article, Karl Gass, vice chair of the OIF Physical Layer Group, says that the OIF will continue to guide network carriers and component manufacturers with its project documents, noting that the primary goal of the OIF is to create Implementation Agreements developed cooperatively by end-users, service providers, equipment vendors, and technology providers that are aligned with worldwide standards organizations such as the International Telecommunication Union (ITU; Geneva, Switzerland). The OIF industry members work together to develop these Implementation Agreements for both hardware and software. "If you're going to implement a security protocol, for example, carriers can work with each other to develop an interoperability standard so that different networks use the same protocol," Gass says.

Creating a test bed

Ralph notes that advances in the optical-networking space require a multidisciplinary team with expertise in optics/photonics, silicon electronics, and signal processing. At the GaTech Networking consortium we bring together students, faculty, and industry personnel to help define next-generation optical networking technologies."

Ralph credits the joint academic and industry structure of the Georgia Tech 100G Networking Consortium as the reason for the successful creation of the test bed (see Fig. 2). "Having this research conducted at a reputable and business-neutral institution like Georgia Tech has allowed us to develop a test bed for transmission at 100 Gbit/s per wavelength with minimum cost," he says. "This is due to multiple donations in equipment and components from the participants, which would not have been possible if we were to conduct this experimental research at any of the member companies. Having to purchase all the components (fiber, optical amplifiers, wavelength-selective switches, laser sources, modulators, balanced receivers) required for the 100G test bed would have increased the cost of this research significantly." The Consortium also benefits from support of Georgia Tech and the Georgia Research Alliance (www.gra.org).

The Consortium is exploring a variety of modulation formats, network environments, and fiber types using simulation tools and the experimental test-bed hardware. Recently, the performance of 112 Gbit/s differential quadrature phase shift keying (DQPSK) was quantified on a range of network configurations representing different dispersion compensation per span. Modulation techniques such as DQPSK improve resilience to impairments encountered in older fiber and are an integral feature of some currently available products like Sierra Monolithics' (Redondo Beach, CA) Delta chipset (SMI4025/SMI4035). "Optimum and robust dispersion maps have been identified and these results have been supported by accurate simulations," says Ralph. "Also in simulation we have quantified the robustness of alternative formats that may enable us to demonstrate 448 Gbit/s per wavelength. Current investigations focus on coherent detection with receiver-based digital signal processing for impairment mitigation and signal recovery."

REFERENCES

1. Dave Parks, "100G vs. 100G Ethernet," xchange magazine online blog at www.xchangemag.com/blogs/parks/blogdefault.aspx (August 5, 2009).

2. Sean Buckley, "NYSE Euronext: network latency is not an option," Telecom Magazine online, www.telecommagazine.com/article.asp?HH_ID=AR_5397 (June 18, 2009).

About the Author

Gail Overton

Senior Editor (2004-2020)

Gail has more than 30 years of engineering, marketing, product management, and editorial experience in the photonics and optical communications industry. Before joining the staff at Laser Focus World in 2004, she held many product management and product marketing roles in the fiber-optics industry, most notably at Hughes (El Segundo, CA), GTE Labs (Waltham, MA), Corning (Corning, NY), Photon Kinetics (Beaverton, OR), and Newport Corporation (Irvine, CA). During her marketing career, Gail published articles in WDM Solutions and Sensors magazine and traveled internationally to conduct product and sales training. Gail received her BS degree in physics, with an emphasis in optics, from San Diego State University in San Diego, CA in May 1986.