Cornell researchers invent alternate approach to Lytro’s “light-field” camera technology

Ithaca, NY--A camera taking digital images that can be focused at any time after they’re taken has been invented by researchers at Cornell University. While the results of the camera are similar to the "light-field" camera now commercially produced by Lytro (Mountain View, CA), the technique behind the operation of the Cornell camera is different.

A group led by Alyosha Molnar is building image sensors that give detailed readouts of both the intensity and the incidence angle of light as it strikes the sensor. The technique is applicable to 3-D cameras, as well as cameras that can focus photos after they're taken.

Lytro is marketing a camera that employs similar light-field technology, but Molnar thinks Cornell's technology may prove better in many applications. Molnar's methods provide angular information in a pre-compressed format and allow for depth mapping with minimal computation. This could be especially good for video, which generates very large files, says Molnar.

Stacked diffraction gratings

To measure the all-important incidence angle, Molnar and graduate student Albert Wang invented a uniquely designed pixel for a standard CMOS (complementary metal-oxide semiconductor) image sensor. Each 7-micron pixel, an array of which is fabricated on a CMOS chip, is made of a photodiode under a pair of stacked diffraction gratings.

Direct light at the pixel and the top grating allows some light through, creating a diffraction pattern. Changing the incident angle of light changes how this pattern interacts with the second grating, changing how much light gets through to the diode below. All this can be done in standard CMOS manufacturing process, at no added cost.

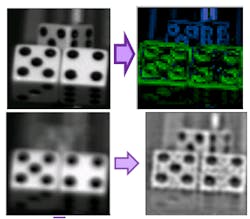

All the pixels, each of which record a slightly different angle, produce an image containing both angular and spatial information. This information can be analyzed via a Fourier transform, extracting the depth of objects in an image as well as allowing for computational refocusing. So far, with the help of a standard Nikon camera lens, their chip can capture an image at 150,000 pixels. This low number can be improved by building larger chips.

Molnar shared the most updated design of an angle-sensitive pixel for image capture at the IEEE International Electron Devices Meeting (Washington, DC) late last year, and he detailed the math behind the device at SPIE's Photonics West 2012 (San Francisco, CA).

About the Author

John Wallace

Senior Technical Editor (1998-2022)

John Wallace was with Laser Focus World for nearly 25 years, retiring in late June 2022. He obtained a bachelor's degree in mechanical engineering and physics at Rutgers University and a master's in optical engineering at the University of Rochester. Before becoming an editor, John worked as an engineer at RCA, Exxon, Eastman Kodak, and GCA Corporation.