Herke Jan Noordmans, Pieter van den Biesen, Rowland de Roode, and Rudolf Verdaasdonk

Looking for differences between two highly similar drawings may be a pleasant childhood task, but looking for differences between many highly complex images can be tedious, not to mention strenuous. A common way to bring out differences between images is to subtract one image from the next or to sequentially alternate images to trigger the recognition of movementa task the human eye is good at. This works for images taken in a lab environment, where the object and the camera have fixed positions, commonly seen, for example, in the familiar movies of growing plants and flowers. However, when the camera is not fixed or if the object moves, this technique fails. In the case of medical photos that follow the progression of disease or the effect of treatment, one image with respect to the next usually suffers severe motion artifacts that prohibit image subtraction or image-alternating approaches.

To correct these motion errors, one typical approach is the use of a so-called registration algorithm. Such an algorithm compares a sequence of “floating” images to a reference image and calculates the image differences for different positions of the floating image. The floating image is said to match with the reference image when a position has been found with the lowest image difference. Then the program presents the result to the user for verification or rejection of that match. If the match fails, the user must adjust parameters of the matching algorithm and rerun the algorithm.

This approach works well for low-complexity images that are simple to interpret, such as Earth observation or in neuroscans. However, when the image complexity increases, such as when the image consists of many repetitive patterns, the algorithm often stops too early as it arrives at a “local minimum” in parameter space. Such complexity requires lengthy processing if the algorithm gets stuck chugging through a threshold of higher differences before it reaches a true minimum.

User-assisted matching

To overcome this time-consuming process of parameter tuning and rerunning of the registration algorithm, we developed a simple, intuitive, but advanced match program that efficiently combines the accuracy and speed of a computer with the knowledge of the user to recognize important features in the image. The program takes advantage of a user’s ability to quickly see when a match is headed in the wrong direction.

To start, the user has to load the reference and the floating image. The images are overlaid at 50% transparency for simultaneous viewing. The user can interactively place, rotate, and scale, the floating image over the reference image to get a good starting position for the following automatic step.

Next, the user initiates an “affine transformation matrix” function that automatically matches the floating image to the reference. This function translates, rotates, zooms, slants, and otherwise deforms the floating image in a perspective way to the reference image. The graphic processor card (GPU) performs the drawing and resampling operations in an average of two to four seconds for 1-megapixel images. If the match appears to go in the wrong direction, the user may try another starting position, size, or orientation to redirect the affine step. The final matched result can then be inspected by image subtraction or image alternation.

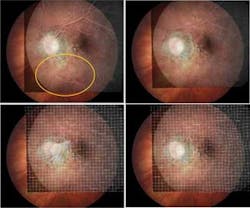

FIGURE 1. Interactive image matching is used to couple findings in fluorescein angiography to color fundus images. The yellow circle denotes a large displacement between images (top left). The automatic matching step uses an affine matrix transformation to eliminate the displacement between images (top right). Users can elastically deform an overlapping image using a single control point to match up the images (bottom left). The images match sufficiently after automatic elastic matching (bottom right). For clinical use, affine matching is often sufficient. Elastic image matching is only needed for highly accurate scientific studies. (Courtesy of UMC Utrecht)

Often the match is sufficient after the affine step, but sometimes motion errors persist that can disturb quantitative measurements. To correct these, the match program continues with an elastic step, in which the floating image is subdivided into multiple smaller squares individually matched to the reference image. When the match goes wrong, the user can move individual control points to easily correct it.

To illustrate the advantage of accurate image matching, we applied the software to several cases of ophthalmologic treatment in which age-related macular degeneration with angiogenesis inhibitors was evaluated with fluorescein angiography.1 The common side-to-side comparison of angiography images was very tedious and time-consuming, often requiring an expert panel to interpret the results. Especially for lesions that were leaking blood inside, the leakage could not be perceived or determined with sufficient certainty. Fortunately, by using the match software the ophthalmologist was able to match the two images in 15 seconds (on a rather outdated machine) and inspect the differences by alternating between the two matched fluorescein angiograms. He noticed that nearly all the patients who came back with recurrent vision loss already exhibited blood leakage in their angiograms that was not observed in the side-by-side views. However, leakage was also observed in some patients with no recurrent vision loss, which was an intriguing mystery.

As a second example, the match software was used to correct for motion in multispectral dermatoscope images of the patient’s skin to track the effect of laser treatment over time.2 In addition to correcting motion errors during acquisition, the match software corrects image displacement between views of the patient’s skin obtained weeks apart. After matching the 1 cm2 images, the view of the changes in vasculature and melanine after treatment is clear. From these experiments, the vasculature appears to remain constant over several years, but in coagulated skin, revascularization takes place and new patterns become visible.

Observation of subtle differences in moving images is a difficult and tedious task, but appropriate motion-correction software makes it feasible, increasing treatment efficacy, and saving clinical time and ultimately medical costs. The time to see animated movies of growing hairs, moles and wound recovery is not far off.

References

1. H.J. Noordmans, et al., Proc. SPIE Int. Soc. Opt. Eng., 6844A-14 (2008).

2. R. de Roode, et al., Proc. SPIE Int. Soc. Opt. Eng., 6842A-10 (2008).

Tell us what you think about this article. Send an e-mail to [email protected].

HERKE JAN NOORDMANS is a clinical physicist, PIETER VAN DEN BIESEN is an ophthalmologist, ROWLAND DE ROODE is an assistant clinical physicist, and RUDOLF VERDAASDONK is a clinical physicist at the University Medical Center, Utrecht, Heidelberglaan 100, 3584 CX Utrecht, The Netherlands; e-mail: [email protected]; www.umcutrecht.nl.