ULTRAFAST NONLINEAR OPTICS: ‘Time telescope’ squeezes more data into the same duration

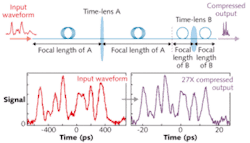

A data-pulse-compression technique that borrows ideas wholesale from classical optics has been shown to vastly increase the data-carrying capacity of telecom-wavelength pulses. Mark Foster, a researcher at Cornell University (Ithaca, NY), and his colleagues demonstrated a “time telescope” that in effect allows the direct modulation of a pulse at a speed 27 times higher than the modulation electronics supports.

The method hinges on the nonlinear properties of silicon waveguides used as “temporal lenses.” At the start of the process is a continuous-wave laser emitting at 1580 nm, modulated at 10 Gbit/s by an electro-optic modulator. The resulting waveform—representing 24 bits of data—is passed through a silicon waveguide alongside an unmodulated pulse from a modelocked fiber laser of 7 nm bandwidth operating at around 1545 nm.

The high bulk nonlinearity of silicon allows a parametric four-wave-mixing process to occur even at low powers, radically modifying the phase velocity of the signal beam and imparting a strong temporal “chirp”—even with only about a centimeter of interaction length.

Two lenses make a telescope

While temporal lenses have been demonstrated in the past, the crucial step in the new approach is to use two of them—each with a “focal length” defined by the propagation distance after which a pulse’s wavelength extremes meet in the time domain. In essence, each lens performs a Fourier transform of the input waveform: the first frequency-into-time, and the second performs the reverse. In perfect analogy with classical optics, separating the two lenses by the sum of their focal lengths produces a “time telescope,” complete with magnification factor defined by the ratio of the lenses’ focal lengths.The current work demonstrated a temporal magnification of 27, effectively making for direct modulation of a 270 GHz wave using 10 GHz electronics.1 Foster says that the approach exceeds earlier demonstrations of single temporal lenses, making it potentially revolutionary for the telecom industry. “The primary reason we can build this more complex temporal imaging systems is that our method for generating the temporal lens is robust and keeps all the interacting laser wavelengths in the telecommunication bands,” he explains.

However, he concedes that this first demonstration requires some work before it can be considered a contender for speeding up the Internet. “The primary limitation of this approach right now is the length of the packet that can be compressed,” he says. “Typical packets used in Internet communications are much longer than 24 bits; therefore, extending the time window over which compression occurs is the primary problem to be solved in future generations of this device. However, we have a number of ideas on how to increase the number of bits—for example, with better dispersion management in the system and by starting at a clock rate of 40 Gbit/s instead of 10 Gbit/s.”

Arbitrary-waveform generation

Foster goes on to suggest that modulation at such rates could prove immensely useful in the design of arbitrary-waveform generators. Ultrafast shaped pulses are powerful tools in, for example, driving chemical reactions or probing the “potential energy surface” of biological systems like proteins.

The team has gone on to implement a similar system using an ultrafast pulsed source that can work at a range of repetition rates—so that the waveform modulation in the ultrafast domain is no longer limited by the cavity length of a modelocked laser.

“We can now derive the ultrafast pulses necessary for the lens without using a laser cavity and therefore they can be generated at a wide variety of repetition rates easily synchronizable with incoming waveforms,” says Foster. This will make the system even more attractive for telecom applications. And although any industrial implementation would require both a compression step at source and a decompression step at the receiving end, Foster says the simplicity and robustness of the system could well make the potential gains in data rates worth the effort.

REFERENCE

- M. Foster et al., Nature Photonics, DOI: 10.1038/nphoton.2009.169 (2009).

D. Jason Palmer | Freelance writer

D. Jason Palmer is a freelance writer based in Florence, Italy.