Photonics Applied: Transportation: Lidar advances drive success of autonomous vehicles

JOHN JOSEPH

While radar, sonar, and video processing can work together in autonomous vehicle applications (self-driving cars), by themselves they have limitations. Radar and sonar lack the accuracy of object positioning and spatial resolution that can enable better software-controlled decision making. The cameras are limited by environmental factors, such as a bright flash of sunlight, nighttime conditions, or a tunnel. Alternatively, light detection and ranging (lidar) provides accurate and high spatial resolution data and can be a fallback system in the case of video sensor saturation or low-resolution radar reflections or confusion.

There are mandates in the automobile industry for higher degrees of autonomy, driving the adoption of automotive lidar among automobile manufacturers and component suppliers. Consensus is growing among experts that an integration of lidar, radar, and video will be the eventual solution for the sensor needs that enable autonomous vehicles.

Presently, lidar systems that use diode-pumped solid-state (DPSS) or fiber lasers and/or mechanical scanning systems are high-performance, but also high-cost. They also do not lend themselves easily to cost-reduction paths that have enabled the semiconductor industry to advance so rapidly in performance while lowering costs and reducing device sizes.

Lasers, cameras, and 3D point clouds

To enable processors to perform the split-second decision making required for assisted-driver and self-driving cars, the sensor package must provide accurate three-dimensional (3D) information for the surrounding environment with object identification, motion vectors, collision prediction, and avoidance strategies.

Lidar provides this information through the use of high-speed, high-power pulses of light that are timed with the response of a detector to calculate the distance to an object from the reflected light. Arrays of detectors or a timed camera can be used to increase the resolution of this 3D information. The speed of the pulse is important in determining the depth resolution, and the resulting data is used to compile a 3D point cloud that is analyzed through high-speed processing algorithms that transform the data into volume identification and vector information relative to the position, speed, and direction of travel of your vehicle to determine the probability of a collision.

The computer in the car is, in essence, modeling a 3D action movie of where you and your vehicle are, your speed, and direction. In the same movie it brings in objects from the lidar data and they engage in a game in which the future is calculated by vector projections. This game constitutes an early warning system by weighing vector diagnostics and predictive behavior through software algorithms.

Current lidar techniques

It is becoming increasingly common to see the cylinder-shaped scanning lidar on the roof of the self-driving "Google car". Such rotating-mirror systems are high performance and have to date provided the primary test bed and development platform for the use of lidar in autonomous vehicles. In order to transition from these types of systems to low-cost, compact lidar systems that can fit within the body panels of vehicles, developers are considering a number of alternatives.

Smaller scanning mirror systems are one alternative. Another alternative is found in MEMs-based scanning lidar. These scanning systems need a high-brightness laser that produces sufficient power from a small aperture in a small solid angle, producing higher radiant intensity in order to reach long distances. Such high-brightness sources must either be high-powered edge-emitting semiconductor laser diodes or solid-state lasers. Eye safety is maintained for the high-brightness laser beams by low-duty-cycle pulsing and by the motion of the beam as it scans the field of view (FOV).

High-power, high-speed flash lidar

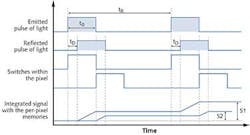

To manufacture cheaper, smaller lidar systems, the emphasis of some OEMs is turning away from high brightness sources and mechanical movements to produce timed illumination of the FOV by taking advantage of rapidly maturing focal-plane-array technology. This lidar technique is known as "flash" lidar wherein a high-speed pulsed laser "flashes" and triggers a timer that counts the time it takes for each pixel of a focal plane array or timed camera to respond to the infrared (IR) light pulse.

Flash lidar allows the exact distance to the object from each pixel to be calculated to produce the 3D depth map that is often integrated with a video image scene and/or other sensors to give full characterization of the surrounding environment (see Fig. 1). Several companies are pursuing this approach in order to reduce the complexity, size, and cost of lidar systems. Variations of the flash concept are also under development where multiple, smaller fields of view are used to reduce the complexity of the receiver.

In flash lidar, each pixel (x, y) has its own range data (z), resulting in the 3D point cloud. A computer can update and track multiple objects that are identified by distance information and vectors. The resolution in x and y depend on the camera resolution, and the z range resolution is dependent on the pulse width or rise time of the laser and the speed of the sensor.

Current state-of-the art sensors that are suitable for autonomous driving can resolve 200 ps pulse widths. Ideally, a flash lidar laser would have a pulse width in the single nanosecond range while having enough peak power to illuminate objects at the distance and FOV required. In most realizations, the beam divergence of the single "flash" from the laser is optically matched to the receiver FOV so that the entire FOV is illuminated at once (hence the term "flash" lidar).

Automotive lidar applications can be grouped into two primary distance zones of interest: a medium distance of about 20–40 m for side and angular "warning zones" and a long-distance range of 150–200 m for the front and rear.

The medium-distance applications require placement on multiple locations and need to see a much larger FOV; over 100° in the horizontal axis. These are the most cost-sensitive sensors due to their number. Size is also an issue, as they need to fit within the body panels of the vehicle.

Since the spatial resolution of the lidar system is determined by the camera properties and the beam is deliberately spread over a large FOV, flash lidar is an ideal application for lower-brightness, high-power lasers such as semiconductor diode lasers—and specifically vertical-cavity surface-emitting laser (VCSEL) arrays. Flash lidar systems use modest repetition rates for relatively low duty cycles. Such an approach favors use of semiconductor lasers with modest heat-sinking requirements and smaller-sized electronic components in the driver circuits.

Until recently, only high-brightness sources such as DPSS and fiber lasers were capable of producing the high peak powers and short pulses required for flash lidar. Now, however, lower-cost semiconductor lasers can replace these costly emitters and allow autonomous driving technology to advance into the mainstream.

Changing industry timing

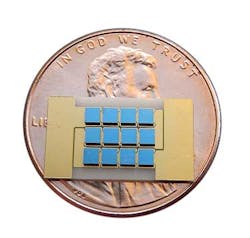

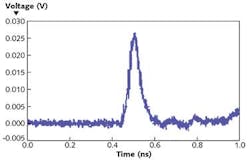

The TriLumina laser architecture is ideally suited for flash lidar applications. The laser itself has very high bandwidth, and smaller-array versions have performed well in 10 Gbit/s free-space optical links.1 When packaged specifically for high-pulsed-power operation, larger arrays can emit peak powers in the range of 1–5 kW and produce single-digit-nanosecond pulse widths.

In our VCSEL configuration, the light passes through the substrate in a back-emitting design that is efficiently coupled with high-speed circuits to produce a smaller, less-expensive laser source well-suited to requirements of flash lidar (see Fig. 2). When configured for flash illumination, the VCSEL arrays are spatially extended sources that reduce the eye safety concerns of high-power operation.When well-matched to short-pulse-current drivers, our lasers produce short-pulsed operation (see Fig. 3). There are also other scanning techniques that can use sub-grouped arrays to turn on and off different segments of the field of view, reducing the emphasis on higher-cost, high-resolution focal plane arrays.

TriLumina continues to improve the driver designs and matching of the lasers to the driver properties and expects to demonstrate rise times of less than a nanosecond. Furthermore, since the technology is very scalable, we expect to meet the peak power requirements for medium-range automotive flash lidar. Semiconductor-based technology of both the laser source and driver electronics allows for economy of scale in large volume production for affordable placement in multiple locations on a vehicle.

REFERENCE

1. R. F. Carson et al., "Compact VCSEL-based laser array communications systems for improved data performance in satellites," Proc. SPIE, 9226, 92260H (Sept. 17, 2014); doi:10.1117/12.2061786.

John Joseph is founder and VP of product development at TriLumina, 800 Bradbury Dr. SE, Suite 116, Albuquerque, NM 87106; email: [email protected]; www.trilumina.com.