Detectors & Imaging: Fast 3D imaging for industrial and healthcare applications

Over the last decade, 3D imaging and sensing have advanced in both industrial and consumer applications. In nondestructive testing and advanced industrial automation, 3D imaging promises to provide the detailed manufacturing data required by Industry 4.0. At the same time, 3D sensors have conquered consumer electronics. Microsoft introduced the Kinect camera for the Xbox in 2010—and today, face recognition based on 3D imaging technology is standard on many smartphones. Likewise, 3D sensing will likely enable autonomous driving, regardless of whether a full lidar system or a 3D camera system is used.

3D imaging system development continues as imaging systems are deployed beyond quality control and into security and healthcare applications. A team from Friedrich Schiller University (FSU; Jena, Germany) and from the Fraunhofer Institute for Applied Optics and Precision Engineering (Fraunhofer IOF; also in Jena) has been developing camera systems for such different 3D imaging applications since 2014.

A 3D imaging system with robust illumination

The core system consists of two cameras and a robust illumination unit. In 2014, the system was optimized for high-speed imaging with up to 12,500 fps in each camera. This system required considerable illumination power and was designed for industrial measurements such as crash test analysis in automotive applications.

With two Photron FASTCAM SA-X2 cameras, the system could capture up to 1333 3D data sets, each with 1 Mpixel resolution. The cameras have to be synchronized, but the projector needs no external trigger. Typically, a sequence of about 10 images from the cameras is needed to calculate one 3D data set. This allows for a 3D acquisition in less than 1 ms per 3D data set.

Active illumination technology enables the unambiguous assignment of 3D coordinates to the pixels. The illumination unit projects a rapidly changing sequence of stripe patterns (also known as structured light) onto the object. In such structured light setups, a LED projects a pattern onto a target surface. When imaged from an observation perspective other than that of the light source, the pattern appears distorted. The pattern is acquired by a camera and used for geometric reconstruction of the surface shape.

The stripe patterns resemble conventional sine patterns—however, the stripe width varies aperiodically (that is, with varying period lengths and amplitudes). This is crucial for the unambiguous assignment of 2D image points acquired with the stereoscopic camera system to 3D coordinates.

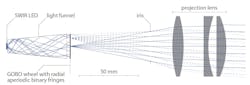

The idea for the technical implementation of the high-speed projection of such patterns was inspired by theater stage technology. There, a heat-resistant mask made of glass or metal is rotated in front of a spotlight to illuminate the stage with variable images and effects. As the lens for projection comes after this rotating mask, the system is often referred to as GOBO (GOes Before Optics). Based on the GOBO setup, a glass mask with a special aperiodic binary pattern rotates in the illumination unit for the 3D imaging system (see Fig. 1).

Eye-safe operation in public areas

While intense lighting can be tolerated in industrial inspection, there are some applications where the illumination must be harmless or even invisible to the human eye. This is particularly important for surveillance applications. For such purposes, a new and completely eye-safe system has recently been developed (see Fig. 2) that applies illumination and image acquisition in the shortwave-infrared (SWIR) at 1.4 µm.It may appear more obvious to deploy near-infrared (NIR) illumination for face recognition. This is tolerable for low-power applications—but in 1962 it was shown that at 850 nm, 80% of the light arrives at the retina.3 At 950 nm, it is 50%, while at 1450 nm, no transmission was recorded. Therefore, this wavelength is preferred for eye-safe high-power illumination. A further benefit of this wavelength is its low contribution in the solar spectrum—therefore, SWIR measurements experience less disturbances from ambient daylight.

The final SWIR 3D imaging system as rendered in Figure 2 included a GOBO projector with a 70 mW LED light source and two Goldeye G-008 SWIR cameras from Allied Vision (Stadtroda, Germany). The camera’s indium gallium arsenide sensor provides a resolution of 320 × 256 pixels and a frame rate of up to 344 fps at maximum resolution.

With a working distance of 1.5 m, the system was optimized for a measurement volume of 300 × 300 × 300 mm3 and a data point distance of 1.2 mm. The main purpose of the SWIR 3D sensor is to measure and analyze human faces (see Fig. 3). With about 80,000 stereoscopic data points, this system is several times more precise than a typical smartphone 3D sensor with only 30,000 points.Adding 2D color data on a 3D point cloud

While the GOBO can be modified for other wavelengths or other acquisition rates via camera or illumination wavelength retrofits, another approach is to align the 3D acquisition system with additional cameras for more demanding applications. The data from these additional cameras can be mapped over the 3D point cloud, adding color information from a RGB camera to the 3D point cloud, as shown in Figure 3.

In one particular project, the research team at Fraunhofer IOF added a fast-thermal camera to the 3D imaging setup to get time-resolved 3D thermal information (see Fig. 4). For this purpose, a FLIR X6900sc SLS with a strained-layer superlattice detector was used. This sensor allows for frame rates of up to 1 kHz at a resolution of 640 × 512. In the temperature range between -20° and 100°C, which is of interest for the investigations, the accuracy is 1°C. The camera operates in the spectral range between 7.5 and 12 µm and is insensitive to the radiation from the GOBO illumination, so that the projection pattern has no influence on the thermal image.Using a special software algorithm, the thermal image was spread over the 3D data cloud and temperature values are assigned to the space coordinates using a bilinear interpolation method. With this technology, the temperature profile of an inflating airbag can be measured with a temporal resolution of 1/1000 s.

Contact-free measurement in healthcare applications

A new and promising field for optical measurements is the remote sensing of vital parameters such as pulse frequency, respiration, or the oxygen level in blood. Measuring such parameters without physical contact to the patient has several obvious benefits—for example, adhesive sensors would be obsolete and the number of false alarms in intensive care units could be reduced.

The physicists at FSU have a long history of sharing ideas for imaging systems with sports scientists from the local hospital University Clinic Jena within the research alliance 3Dsensation. Initially, a high-speed camera system was used to record motion sequences of athletes. However, a recent upgrade adds a regular RGB camera and a multispectral NIR camera to the 3D imaging system (see Fig. 5) to provide much more information.With this new system, a procedure was developed for precise observation of vital parameters. These parameters are derived from spectrally filtered images. The imaging system uses the 3D measurement for motion correction of the 2D images from the cameras in the top row of Figure 5. This procedure provides a secure and reliable basis for deducing vital parameters from spectral data. Furthermore, the 3D data is available for other calculations.

Using the spectrally filtered cameras, the system measures the color variation of hemoglobin in blood-filled capillaries in the test person’s skin. In conjunction with 3D data, it is possible to estimate not only the heart rate and the heart rate variation, but also the respiration of the test person. Oxygenated and deoxygenated hemoglobin have different spectral characteristics in the NIR and, therefore, the oxygen saturation level can be measured with the NIR cameras.

In a next processing step, this data is used to measure physical strain or stress of the test person. So far, the gold standard for such measurements has been a combination of cardiac sensors and a breath gas analysis, which required the test person to wear a mask-like sensor. The large number of sensors made such measurements uncomfortable and added stress to the athletes’ strain (see Fig. 6).Nanofilters for multispectral detection

The development of the camera system originated from an industry-related project. Thus, reliability and simplicity have been major goals in the development process. The detection of blood oxygen saturation required the observation of two separate NIR spectral bands. This could be done with two cameras and appropriate filters.

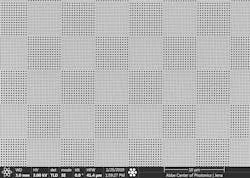

The final solution was much more elegant—at FSU, a special nanostructured filter was developed that enables the simultaneous acquisition of both wavelengths. The nano-optical filter (see Fig. 7) consists of a thin gold layer with alternating hole structures. The holes are smaller than the wavelength of the applied light. For spectral filtering, they exploit the excitation and interference of so-called surface plasmon polaritons (SPPs). The foil was transferred and bonded to the camera chip.The NIR detection uses two separate illumination LEDs with wavelengths in the hyper-red and the NIR. They are spectrally well separated from the GOBO illumination at 850 nm. Both illuminators are well within the eye-safe region.

Beyond sports medicine

A technology to determine the stress level based on vital parameters may have several applications beyond sports medicine. For example, it would allow for an automated stress-level detection in the waiting room of a hospital and could also serve in various security applications or monitor the stress level of pilots.

The team in Jena has plans for additional tests in a typical healthcare setting. Beginning in February 2020, a system will be hospital-tested on newborn children. There, the remote sensing of vital parameters could free patients from painful adhesive sensors. Furthermore, it could detect additional spectral parameters such as the bilirubin concentration in the baby’s skin. Due to the modular character of the sensing system, additional color filters or cameras can be easily added.

REFERENCES

1. M. Landmann et al., Opt. Lasers Eng., 121, 448–455 (2019).

2. S. Heist et al., Proc. SPIE, 10991, 109910J (2019); https://doi.org/10.1117/12.2518205.

3. E. A. Boettner and J. R. Wolter, Investig. Ophthalmol. Vis. Sci., 1, 6, 776–783 (1962).

4. J. Sperrhake et al., “Monitoring system for the classification of stress using non-contact, real-time nano-optical 3D-imaging of vital signs,” in preparation for NPJ Digital Medicine.

Andreas Thoss | Contributing Editor, Germany

Andreas Thoss is the Managing Director of THOSS Media (Berlin) and has many years of experience in photonics-related research, publishing, marketing, and public relations. He worked with John Wiley & Sons until 2010, when he founded THOSS Media. In 2012, he founded the scientific journal Advanced Optical Technologies. His university research focused on ultrashort and ultra-intense laser pulses, and he holds several patents.

Stefan Heist | Researcher, Fraunhofer Institute for Applied Optics and Precision Engineering

Stefan Heist is a researcher at the Fraunhofer Institute for Applied Optics and Precision Engineering (Fraunhofer IOF; Jena, Germany).

Jan Sperrhake | PhD student, Institute of Applied Physics at Friedrich Schiller University

Jan Sperrhake is a PhD student at the Institute of Applied Physics at Friedrich Schiller University (Jena, Germany).