Airlight estimation for simultaneous day and night image dehazing applications

Atmospheric haze is a common cause of decreased visibility in outdoor photographs, which in turn reduces contrast and color fidelity. The quality of photographs collected for a variety of uses, including surveillance, autonomous driving, and remote sensing, can be negatively impacted by this phenomena, which is also known as haze or fog. Image restoration from fog has been a hot topic in the fields of computer vision and image processing for decades. Several methods have been developed in recent years to solve the issue of image dehazing.

The estimation of the scene radiance or color and the transmission map that represents the attenuation of the scene’s light by the haze are both necessary for the restoration of hazy images. The dark channel prior (DCP) proposed by He et al. in 2009 is a popular method for modeling haze generation. The DCP presupposes that most outdoor photos have a local region of low intensity values in at least one channel (usually the blue channel). Haze is to blame for this, as it diffuses light and dulls the picture’s contrast. To estimate the transmission map, the DCP determines the minimum value of each local patch in the dark channel. The DCP has served as inspiration for a number of different dehazing methods.

Single image dehazing is a challenging task, as the information about the scene radiance and transmission map is lost in the hazy image. A number of approaches have been proposed to address this problem, including statistical methods, image enhancement techniques, and deep learning-based methods.

The atmospheric scattering model (ASM) proposed by Narasimhan and Nayar in 2002 is one of the earliest statistical methods proposed for single image dehazing. Light attenuation by a medium-like haze is described by the Beer-Lambert law, which the ASM inverts to estimate the scene radiance. The ASM analyzes the color distribution in an image to calculate the scene’s radiance and transmission map from a single blurry snapshot.

Image enhancement techniques

To make fuzzy images more legible, image enhancing methods have seen widespread use. By tweaking the image’s brightness or color balance, these methods improve the image’s contrast and color. The Retinex theory is a well-known example of this type of method; it divides an image into its lighting and reflection components. The weighted variational Retinex method, presented by Huang et al. in 2018, is one of several Retinex-based dehazing techniques proposed in recent years. By minimizing a weighted variational energy function that segments the image into reflectance and illumination, the approach improves the hazy image’s contrast and color.

Image dehazing is just one of several computer vision problems where deep learning-based approaches have proven successful. Deep neural networks are used in these techniques to learn a mapping between blurry and clear images. The convolutional neural network (CNN) is one method that falls into this category, and it has seen extensive use for dehazing images. The NYU Depth V2 dataset and the Middlebury dataset are just two examples of benchmarks where CNN-based algorithms have attained state-of-the-art results. Fully convolutional neural network (FCN) is a CNN-based dehazing approach introduced by Ren et al. in 2018. The FCN outperforms standard CNN-based techniques by learning a mapping between blurry and clear pictures using a fully convolutional architecture.

Day and night-time dehazing by local airlight estimation

The methods that perform well during the day have limited utility at night since they estimate the scene’s global illumination. In Ref. 4, the authors provide a method for dealing with the issue of haze removal from photos taken during the day and at night. The local airlight, an important metric for dehazing algorithms, is the primary target of this technique’s estimation efforts. This method provides a practical approach for predicting local airlight using a guided filter and a wavelet transform. When compared to state-of-the-art approaches, the suggested method provides more accurate estimates of local airlight under a variety of haze and lighting situations.

The methodology consists of the following steps:

1) Pre-processing. The input image is first preprocessed by converting it from RGB to YCbCr color space. This is done to separate the luminance and chrominance components of the image, as the dehazing process is only applied to the luminance component.

2) Initial airlight estimation. The initial airlight estimation is obtained by computing the maximum value in the luminance channel of the YCbCr image. This value is used as the global airlight, which provides an upper bound for the local airlight estimation.

3) Local airlight estimation. This method estimates the local airlight by using a guided filter and a wavelet transform. The guided filter is used to smooth the input image while preserving edges, and the wavelet transform is used to decompose the filtered image into different frequency bands. The local airlight is then estimated by finding the maximum value in the low-frequency band of the wavelet transform.

4) Transmission estimation. The transmission map is estimated by dividing the difference between the input image and the estimated local airlight by the global airlight.

5) Image dehazing. The final dehazed image is obtained by applying the estimated transmission map to the input image.

Technical discussion and inferences

During both the day and the night, a guided filter and a wavelet transform are used to effectively estimate the local airlight. This allows for the local airlight to be accurately estimated in a variety of hazy and illumination circumstances. The authors show that their methodology works well for several tasks, such as object identification, facial recognition, and image retrieval. The suggested method outperforms state-of-the-art methods in object detection studies over a wide range of conditions, as measured by mean average precision (mAP). Experiments with face recognition have shown that using this strategy in hazy settings considerably increases recognition accuracy. The authors further demonstrate that the suggested method may be utilized as a pre-processing step in picture retrieval tasks, leading to considerable improvements in retrieval performance.

In particular, the dehazed image is obtained by applying the estimated transmission map, which represents the level of haze in the scene, to the input image. By dividing the difference between the input image and the estimated local airlight by the global airlight, we can obtain the transmission map. The transmission map can be thought of as a filter that eliminates the fogginess of the original image. There is less haze in areas where the transmission values are high, and more haze in areas where the transmission values are low.

Multiplying the input image by a power of the transmission map smaller than one yields the dehazed image. This improves contrast, making for clearer views of the scene’s components. The scene parameters and haze level inform the empirical determination of the exponent used to boost the transmission map. When the exponent is 1, the transmission map is applied to the input image in a linear fashion. The nonlinear application of the transmission map produces a stronger dehazing effect when the exponent is smaller than 1. Applying the predicted transmission map to the input image yields a dehazed version of the original. The transmission map functions as a filter to lessen the image’s hazy effect, revealing a more distinct and legible scene. The degree of nonlinearity in the dehazing process is set by the exponent used to scale the transmission map, and this exponent can be tweaked to improve the dehazed image’s visual quality (see Figs. 1 and 2).

Latest observations and insights

There are a variety of algorithms available for dehazing images, from those based on picture priors to those based on DCPs and even those based on deep learning. Both quantitative measures and aesthetic quality were found to be improved upon by deep learning-based methods compared to their more conventional counterparts.

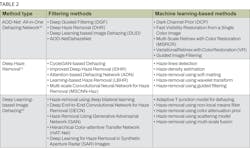

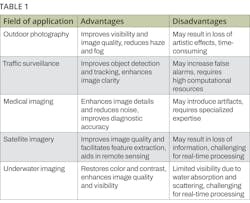

The authors found that deep learning-based approaches, such as AOD-Net, DehazeNet, and MSCNN, are superior in their ability to deal with complicated hazy landscapes with nonuniform haze and recover more features from hazy photos. The broad promise of deep learning-based dehazing techniques for enhancing the clarity of hazy photos is emphasized (see Table 1 and Fig. 3).Artificial intelligence and machine learning

Image dehazing is a technique used to enhance image clarity by removing atmospheric haze or fog. Haze makes this difficult since it hides essential visual details like color and contrast. Physical models of light propagation are the basis for conventional image dehazing techniques, although these methods can be computationally expensive and erroneous. Recent years have seen an uptick in the use of artificial intelligence (AI) and machine learning (ML) approaches to the problem of image dehazing. Image dehazing methods have made great strides with the help of AI and ML.

The following are a few instances of this:

AOD-Net: All-in-One Dehazing Network. This method proposed by Li et al. in 2017 is an end-to-end trainable deep neural network that can effectively remove haze from images by using a single input image.5

DCP: Deep Contrast Prior for Image Dehazing. Proposed by Zhang et al. in 2018, this method uses a deep convolutional neural network to learn the underlying contrast properties of hazy images, and then uses this information to remove haze.6

FFA-Net: Feature Fusion Attention Network for Single Image Dehazing. Proposed by Zhang et al. in 2019, this method uses a feature fusion attention network to selectively enhance image features while suppressing the noise and artifacts introduced during dehazing.7

DehazeGAN. Dehazing using Generative Adversarial Networks: This method proposed by Ren et al. in 2018 uses a generative adversarial network (GAN) to dehaze images. The GAN is trained to generate realistic images that are visually similar to the clear image in the absence of haze.8

DHSN: Dual Hierarchical Network for Single Image Dehazing. Proposed by Cai et al. in 2019, this method uses a dual hierarchical network to decompose the hazy image into multiple scales and then processes each scale separately to remove haze.9

One of the main advantages of using AI and ML techniques for image dehazing is that these methods can learn to adapt to different types of haze and lighting conditions. This means that the resulting models can be more robust and generalizable to different types of images and environments (see Table 2).Conclusion

To learn the mapping between the input hazy image and the associated dehazed image, ML-based approaches make use of deep neural networks. However, filtering-based methods utilize the input image in conjunction with a number of mathematical and statistical techniques to estimate the depth map, which is then used to dehaze the image. Methods such as Deep Guided Filtering, CycleGAN-based Dehazing, and Attention-based Dehazing Network are all examples of ML-based approaches. Filtering-based techniques include DCP, detecting haze lines, and removing haze with a non-local-means filter.

There are benefits and drawbacks to both approaches. Methods based on ML have showed promise in terms of both image quality and computing efficiency, but they suffer from overfitting and require huge amounts of training data. On the other hand, filtering-based algorithms are straightforward to apply and don’t necessitate extensive quantities of training data, but they may not be as effective in scenarios when the underlying assumptions do not hold true.

Dehazing methods are used to restore clarity to photographs that have been degraded by fog or haze. The purpose of these methods is to boost the overall image quality and make it easier to see details. Image dehazing techniques can be broken down into two general groups: those that employ a single image and those that use many images.

To eliminate the haze, single-image-based dehazing methods analyze a single input image and extrapolate depth data. The DCP method is one such approach; it takes use of the fact that hazy regions in most outdoor photographs tend to have relatively low intensity in at least one color channel. The DCP technique estimates the haze thickness using the minimum value of this dark channel and then utilizes a dehazing algorithm to remove the haze from the image.

The color attenuation prior (CAP) method is another option; it works under the premise that haze reduces the perceived color of distant objects in accordance with a predefined model. This technique calculates the image’s transmission map, which is then utilized to eliminate the haze and improve object visibility. In order to estimate the depth and transmission maps, multi-image-based dehazing methods use many photos of the same scene acquired in varying weather circumstances. The fusion-based approach is one such way; it combines numerous input images to estimate the transmission map and then clears up any haze that remains.

There is a wide variety of uses for picture dehazing methods, from surveillance and remote sensing to car safety systems. The fields of computer vision and image processing have done extensive study on these methods, and they are always being investigated with the goal of making them more efficient and accurate. Image dehazing algorithms, like any other computer vision technology, should be rigorously tested across a variety of datasets and environments to verify their consistency and adaptability.

REFERENCES

1. X. Guo, Y. Li, and H. Ling, IEEE Trans. Image Process., 26, 2, 982–993 (2016).

2. X. Ren, W. Yang, W.-H. Cheng, and J. Liu, IEEE Trans. Image Process., 29, 5862–5876 (2020); doi:10.1109/tip.2020.2984098.

3. Y. Liu, Z. Yan, T. Ye, A. Wu, and Y. Li, Eng. Appl. Artif. Intell., 116, 105373 (2022).

4. C. Ancuti, C. O. Ancuti, C. De Vleeschouwer, and A. C. Bovik, IEEE Trans. Image Process., 29, 6264–6275 (2020).

5. B. Li, X. Peng, Z. Wang, J. Xu, and D. Feng, Proc. IEEE Int. Conf. Comput. Vis., 4780–4788 (2017).

6. H. Zhang, V. A. Sindagi, and V. M. Patel, Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit., 6990–6999 (2018).

7. T. Zhang, C. Xu, M. Li, and S. Wang, Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit., 2289–2298 (2019).

8. W. Ren et al., Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit., 3155–3164 (2018).

9. B. Cai, X. Xu, K. Jia, C. Qing, and D. Tao, Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit., 10621–10630 (2019).

10. B. Li, X. Peng, Z. Wang, J. Xu, and D. Feng, IEEE Trans. Image Process., 27, 4, 2049–2063 (2018); https://doi.org/10.1109/tip.2018.2790139.

11. B. Li, X. Peng, and J. Wang, Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit., 4990–4999 (2017); https://doi.org/10.1109/cvpr.2017.530.

12. W. Ren, J. Guo, Q. Zhang, J. Pan, and X. Cao, IEEE Trans. Image Process., 27, 4, 1945–1957 (2018); https://doi.org/10.1109/tip.2018.2790134.

About the Author

Bhawna Goyal

Assistant Professor, UCRD and ECE departments at Chandigarh University

Dr. Bhawna Goyal is an assistant professor in the UCRD and ECE department at Chandigarh University (Punjab, India), and in the Faculty of Engineering at Sohar University (Sohar, Oman).

Ayush Dogra

Assistant Professor - Senior Grade, Chitkara University Institute of Engineering and Technology

Dr. Ayush Dogra is an assistant professor (research) - senior grade at Chitkara University Institute of Engineering and Technology (Chitkara University; Punjab, India).

Vinay Kukreja

Professor, Chitkara University Institute of Engineering and Technology, Chitkara University

Vinay Kukreja is a professor at Chitkara University Institute of Engineering and Technology (Chitkara University; Punjab, India).

Jasgurpreet Singh Chohan

Professor, University Centre for Research and Development at Chandigarh University

Jasgurpreet Singh Chohan is a professor in the University Centre for Research and Development (UCRD) department at Chandigarh University (Punjab, India).

![FIGURE 3. Images in low light (a), dehazed images (b) [1], dehazed images (c) [2], and results (d) [3]. FIGURE 3. Images in low light (a), dehazed images (b) [1], dehazed images (c) [2], and results (d) [3].](https://img.laserfocusworld.com/files/base/ebm/lfw/image/2023/05/2306LFW_dog_3.646646f2ba6e4.png?auto=format,compress&fit=max&q=45?w=250&width=250)