Collaborating with teams from Concordia University (Montreal, QC, Canada) and Meta Platforms Inc. (Menlo Park, CA), researchers from the Institut National de la Recherche Scientifique (INRS; Quebec City, Quebec) built a diffraction-gated real-time ultrahigh-speed mapping (DRUM) camera that uses off-the-shelf parts to capture dynamic events such as molecular activities with a single exposure at nearly five million frames per second (see video).

DRUM’s development began with the study of ultrafast imaging systems based on pulse front tilt, which involves time-varied broadband ultrashort pulses. This phenomenon couples time with space, making it particularly intriguing to researcher Jinyang Liang, an associate professor at INRS and Canada Research Chair in Ultrafast Computational Imaging.

But Liang also discovered limitations. “For example, pulse front tilt can image the dynamics of just a point or a line but not a two-dimensional (2D) plane,” he says. “Also, the dynamic scene must move at the light-speed level, which does not really apply to most scenarios. And the pulse with a tilted front is a femtosecond pulse, so if we use it to probe, it may pose the risk of sample damage.”

How it works

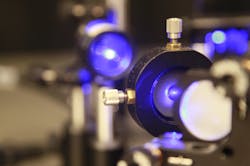

The DRUM camera uses time-varying optical diffraction, a time-gating method. Gates are used to control the point at which light hits a camera sensor. With time-gating, those gates open and close in rapid succession before the sensor leads to creation of an image.

Working on a project at the California Institute of Technology (Caltech; Pasadena, CA), Liang was introduced to a concept called space-time duality and how it is linked to pulse front tilt.

“I thought maybe I could try to find the spatial equivalent of pulse front tilt, so I started to write the corresponding equations,” Liang says. At that point, he discovered it was indeed possible.

“I needed an optical grating whose blazed angle can be rapidly changed,” Liang explains. “During this change, the diffraction envelope sweeps through diffraction orders at different spatial positions. This coupling can gate out frames at different time points. By putting these frames together, I can get an ultrafast movie!”

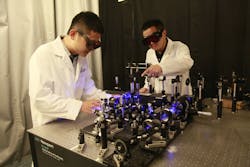

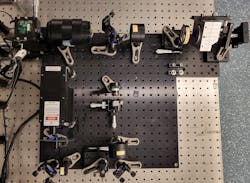

Liang’s next step after figuring this concept out was to implement it with the appropriate tools, including a digital micromirror device (DMD)—a binary amplitude spatial light modulator widely used in projectors. But its use was unconventional.

“To leverage the rapid change of the blazed angle, we operate during the transient of one DMD pattern to the other DMD pattern,” Liang says. “This is how we embody the concept in the DRUM photography system.”

Liang compares the DRUM photography technique to standing outside a seven-room building with a window in each room. The same movie is playing in all rooms simultaneously, as someone runs in front of each room “very, very, very quickly.”

“In the end, when the person runs in front of all seven rooms, they watch the entire movie,” Liang explains. “In this analogy, the movie is the dynamic scene we are trying to image. The seven rooms are the seven diffraction orders produced by the DMD. This means the dynamic scene is duplicated in each diffraction order. The fast-running person is the sweeping diffraction envelope of the DMD.”

While operating, the diffraction envelope rapidly moves through each diffraction order. This sweeping diffraction envelope gates out a frame at a specific time point.

Beating the DRUM

The INRS-led team is already eyeing real-world applications for this imaging approach. DRUM uses active illumination to probe a dynamic scene, which Liang says makes it well suited for LiDAR, “with certain technical adjustments.”

“The ultrahigh-speed 2D imaging indicates that we could get a depth map in one acquisition,” Liang says. “Both the deployed DMD and the camera work at kilohertz-frame rates, indicating the depth map can be updated at a high rate.”

This would allow DRUM photography to perform highly accurate wide-field ranging with a high refresh rate and, in turn, early detection of danger and on-time danger avoidance of vehicles.

The DRUM camera also shows potential for biomedical-related applications such as ultrasound-stimulated early-disease molecular detection and therapeutic targeted drug delivery. It could someday be used for light-matter interactions, as well.

What’s next?

The researchers are now working to boost DRUM’s performance.

“There is ample room for improvement in imaging speed and sequence depth—for example, how many frames can DRUM photography capture in a movie?,” Liang says. Using an advanced microelectromechanical system (MEMS) micromirror array with nanosecond-level flipping time, DRUM photography’s imaging speed could be pushed toward the billion-frame-per-second level.

“We now have a general-purpose and economical ultrahigh-speed imager for the future,” Liang adds. “We expect more insights into physical, chemical, and biological processes to be generated from the unprecedented observations made by DRUM photography.”

FURTHER READING

X. Liu, P. Kilcullen, Y. Wang, B. Helfield, and J. Liang, Optica, 10, 9, 1223–1230 (2023); https://doi.org/10.1364/optica.495041.

Justine Murphy | Multimedia Director, Digital Infrastructure

Justine Murphy is the multimedia director for Endeavor Business Media's Digital Infrastructure Group. She is a multiple award-winning writer and editor with more 20 years of experience in newspaper publishing as well as public relations, marketing, and communications. For nearly 10 years, she has covered all facets of the optics and photonics industry as an editor, writer, web news anchor, and podcast host for an internationally reaching magazine publishing company. Her work has earned accolades from the New England Press Association as well as the SIIA/Jesse H. Neal Awards. She received a B.A. from the Massachusetts College of Liberal Arts.