Neuroscience yields new tools for image processing

James O. Gouge, Sally B. Gouge, and Peter Krueger

Current neurobiological research is providing new insights into how the brain stores knowledge, which is in turn providing interesting possibilities in the field of image processing. BioComputer Research (BCR; Summerville, GA) has put some of these recent biological insights to use in developing a processing method called Junctives/Image Metamorphosis that provides a degree of flexibility in machine learning that has not yet been implemented with more-traditional biocomputing methods such as neural networks.

The desire for a biocomputing method for image processing stems from the need to ultimately get beyond conventional mathematics-based methods. In standard approaches based on edge/contrast enhancement, filtering algorithms, convolutions, histograms, and fast Fourier transforms, images are dealt with as an array of numbers. In contrast to such traditional methods, an ideal image-processing system would be based on less computation-intensive and more-intuitive methods that do not simply process arrays of pixels but actually assess visual relationships between various picture elements. Initial work on this at BCR resulted in a patented system of machine-based algorithms for selecting distinctive pattern information from a pixel-generated image (see Laser Focus World, Feb. 1996, p. 146).

The smooth functioning of the human brain involves more than just sophisticated relational processing, however. It also involves a highly fluid data-storage method in which information or knowledge is not stored within the brain cells themselves--as was once thought--but is actually stored in the pattern of synaptic connections between cells. In fact, experiments have shown that the brain`s image-processing method is so fluid that the brains of two identical insects viewing the same scene will devise different synaptic patterns to store the same image data. BCR`s approach to implementing this method in an image-processing system has been to develop a system of machine synapses called "junctives" and to integrate it with the relational "image-metamorphosis" system described below.

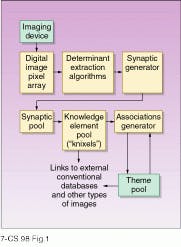

In the junctives technology, the first step is the extraction and encoding of image pixel-pattern components to form determinants that are then fed into a synaptic generator (see Fig. 1). In a manner similar to the way the human brain forms synapses or junctions during learning, the machine also forms synapses as it is taught to recognize features and objects. This formation is displayed on the computer screen as a report of the number of new synapses formed based on the training on the region-of-interest window in the current image. These synapses become a part of the synaptic network that makes up the basal interconnective paths to the knowledge elements or "knixels." The knixels become the basic element learned by the computer and are taken from the "knixel pool" and assigned via the synapses generated as a basis for their interconnection. Knixels can be associated together to form themes. These can be linked to external databases.

This machine neuroscience imaging process was demonstrated in a feasibility study of various features and objects in radar images from an airborne Environmental Research Institute of Michigan (Ann Arbor, MI) synthetic-aperture-radar device. The study was done in conjunction with the National Imagery and Mapping Agency (NIMA; Chantilly, VA) in late 1996.

NIMA provided unclassified images of various airports in the USA and Bosnia that were shown to the computer, which was taught to recognize various features and objects deemed to be of interest. Features in the images then became knixels. Most airports have basic structures or knowledge elements that will be similar for any airport. So these types of elements became the knixels and, as the machine learned differences between the images of the various airports, it became possible to form themes. The themes allowed the machine to actually identify which airport it was processing and give the airport identification on the computer screen.

A southern California airport was the first airport used to train the device (see Fig. 2). It was trained on features such as aircraft, control towers, runways, taxiways, vegetation, and metal buildings such as hangars. Each one of these became a knixel, and they were combined to form the theme airport. The machine was then told the name of the particular airport so that its identity was complete.

When the system was tested on other types of airports (commercial, military, and with and without control towers), it recognized these images using the knowledge base. It identified each of the images with the theme airport, but could also differentiate each of the other airports from the training image taken in southern California. Eventually the machine was taught to recognize and identify each airport by name or geographical location and repeatedly named these airports correctly each time it was tested.

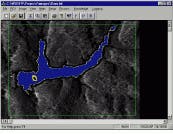

Other studies by BCR included the recognition of objects that had not been evident in the original or unprocessed radar image. For example, a Bosnian lake and dam were studied (see Fig. 3). In the un processed image, there appears to be no land mass in the lake itself. However, in the processed image there appears to be a mass that could be a small island in the lake. The water is highlighted in blue and the shoreline in yellow. The machine training also produced a context-sensitive quality of image analysis that could detect any change to the image it was shown previously.

The training algorithms in the junctives technology are robust and can be used for feature recognition on any digital imagery, not just for features formed from reflected energy such as ultrasound and radar. Imaging modalities other than radar and ultrasound studied by BCR have included electro-optical, x-ray, infrared, intraluminal ultrasound, raw magnetic-resonance data, and computed tomography. The algorithms are independent of the imaging modality chosen and allow the machine to learn quickly and efficiently.

Applications explored to date have included tracking of vessel blood flow, analysis of infrared images from military airborne sensors, analysis of x-ray images for security applications (identification of threat materials and contraband), and analysis of images for biometric purposes (face recognition). Upcoming applications include analysis of multispectral data from an airborne probe for features such as precious minerals and rare plant species. A presentation of the data from the NIMA radar study was made at the AeroSense conference (Orlando, FL; April 1998) by NIMA senior scientist Peter Krueger.1

Beyond the research arena, BCR has collaborated successfully in medical studies that show the ability of its system to track temperature and tissue changes in the human prostate gland using ultrasound during the course of thermal therapy. In conjunction with a major medical company, BCR expects to have a product on the market by the end of the year (see www.biocomputer.com for more information). o

REFERENCE

1. P. G. Krueger, S. B. Gouge, and J. O. Gouge, "Radar Image Analysis Utilizing Junctive Image Metamorphosis," Proc. SPIE AeroSense `98, Vol. 3370 (1998).

FIGURE 1. In the synaptic process flow, the junctive image-analysis method generates synaptic pathways based upon pattern information generated by preselected determinants and thus forms the pathways to the basic knowledge elements for machine learning.

FIGURE 2. Unprocessed radar image of southern California airport provided initial data for pilot study with the National Imagery and Mapping Agency.

FIGURE 3. Unprocessed radar image shows no land mass in lake (top); junctive image processing was able to pick it out (bottom).

JAMES O. GOUGE is vice president of R&D and SALLY B. GOUGE is CEO at BioComputer Research, Summerville, GA 30747; e-mail: [email protected]. PETER KRUEGER is senior scientist at the National Imagery and Mapping Agency, MSC-3, Chantilly, VA 20151-1715; e-mail: [email protected].