Virtual Reality Technology: Digital holographic tomography creates true 3D virtual reality

Holography and its promise to create realistic three-dimensional (3D) scenes instead of flat 2D images continues to be a major subject of photonics research.

To obtain not only the intensity information of an object but also its depth profile, Partha Banerjee's research group at the Holography and Metamaterials Lab at the University of Dayton (Dayton, OH) is using a Mach-Zehnder interferometer setup to scan different faces of an object and record a series of holograms that all contain both intensity and phase (or depth) information of the illuminated surface. These are then reconstructed and tomographically combined to create a true 3D rendering of the object that is stored in a point cloud.1 Using commercial Microsoft HoloLens software, the object can then be viewed in full 3D detail for a variety of virtual reality (VR) applications.

Recording, retrieving, and displaying phase

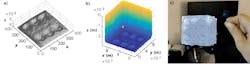

To record holograms of the selected object (six-sided dice, in this case), the object is illuminated by a 514.5 nm wavelength argon-ion laser in a Mach-Zehnder configuration as it is rotated about its x- and y-axis (see figure). The laser beam is spatially filtered and expanded so that it illuminates the entire face of the object. Light reflected or scattered from the object is combined with the reference beam, completing the Mach-Zehnder setup.

After a charge-coupled device (CCD) sensor records the holograms resulting from the interference between the reference and the object beams from different faces of the die, standard Fresnel propagation techniques are used to reconstruct intensity and phase data from each of the recorded holograms. Specialized graphical user interfaces (GUIs) have been developed by the research group for efficiently recording and reconstructing holograms.

The next step in the process is to find the true phase value of each pixel in the reconstructed hologram images by using a phase-unwrapping technique available in MATLAB software called PUMA. From this phase data, the depth information on each face can be retrieved. Information of the intensity and depth from all reconstructed holograms is used to generate a point cloud from which the true 3D image can be formed, as in tomography, and missing information about the object can be numerically extrapolated. Median filters are used to minimize noise, and specially developed numerical algorithms are used to correct for surface (or phase) curvatures resulting from the optical system.

Finally, the point cloud file is imported into the HoloLens using two open-source VR software products: Blender (www.blender.org) and Unity (https://unity3d.com). Once imported, the HoloLens can be used to manipulate the true 3D image in virtual space using hand gestures or voice commands, or be programmed for other forms of user manipulation and viewing.

"The combination of digital holography and tomographic imaging provides a powerful method of capturing and visualizing the complete details of a 3D object in a virtual reality environment with no restriction on viewing angles," says Paul McManamon of Exciting Technology (http://excitingtechnology.com) and research professor at the University of Dayton. "In the future, the point cloud data from holographic tomography could also be imported to a 3D printer for exactly replicating the 3D object." Similar 3D visualization of DNAs in VR could be a powerful tool in biological applications.

This work was the topic of a University of Dayton MS thesis by Alex Downham, who was partly funded by Protobox (http://protobox.com)—Protobox kindly provided the HoloLens that was used for the VR display.

REFERENCE

1. A. Downham et al., SPIE Optics + Photonics paper 10396-39, San Diego, CA (Aug. 8, 2017).

Gail Overton | Senior Editor (2004-2020)

Gail has more than 30 years of engineering, marketing, product management, and editorial experience in the photonics and optical communications industry. Before joining the staff at Laser Focus World in 2004, she held many product management and product marketing roles in the fiber-optics industry, most notably at Hughes (El Segundo, CA), GTE Labs (Waltham, MA), Corning (Corning, NY), Photon Kinetics (Beaverton, OR), and Newport Corporation (Irvine, CA). During her marketing career, Gail published articles in WDM Solutions and Sensors magazine and traveled internationally to conduct product and sales training. Gail received her BS degree in physics, with an emphasis in optics, from San Diego State University in San Diego, CA in May 1986.