Photonic Frontiers: Cameras and Arrays: Looking Back/Looking Forward: An electro-optical revolution—the transformation of cameras

Film was the state of the art in cameras when Laser Focus began publication in 1965. Even top-secret spy satellites used film, which had to be packaged into special canisters and dropped from orbit to be caught in the air by military jets. Electronic imaging was limited to television-type cameras based on vacuum tubes and image intensifiers. They came in a wide variety; the 1975 edition of the RCA Electro-Optics Handbook tabulated 29 types of imaging tubes.1

But Laser Focus was launched as a laser magazine, and the images that captured the imagination of founding editor Bill Bushor were laser-based holograms.The holography boom

Our January 1, 1965 first issue devoted five out of its 20 pages to '3D Lasography.' Emmett Leith and Juris Upatnieks of the University of Michigan (Ann Arbor), shown in Fig. 1, had reported their first three-dimensional laser holograms in April 1964 at the Optical Society of America's spring meeting. A long line queued up after their talk to see a 3D holographic image projected by a helium-neon laser in a Spectra-Physics hotel suite (see Fig. 2).

The early 1950s had seen a wave of stereoscopic 3D movies based on inexpensive polarizing filters from the Polaroid Corporation. Holography was fundamentally different because it reproduced the wavefront of light from a 3D object and delivered it to the eye. The coherence of laser light was vital for recording the hologram, and the eye accepted the wavefront as a true 3D image that you could move around.Holography intrigued the public and the optics community, and quickly became a major research field. A five-year index in our April 1970 issue lists more articles on holography than on any other laser application. We covered an early effort to make holographic movies in the September 15, 1965 issue (p. 5–6), and nondestructive testing with holographic interferometer in September 1966 (p. 30–32). All 9000 copies of our April 1967 issue (p. 12) included a hologram, the first distributed in any magazine. (It came from a new company in Ann Arbor, MI, Photo Technical Research, now part of the Optometrics Corporation, which now occupies the same Littleton, MA address that Laser Focus World did for much of the 1980s.)

Holography was big in mid-1970s entertainment. Our May 1976 cover featured a scene from the film Logan's Run with six 360-degree holograms of Logan's head being interrogated in the background. MGM said it was the first movie to use a hologram (see Fig. 3). Our December 1976 issue reported the Museum of Holography opened in a 5000-sq-ft. space in Manhattan, featuring historic holograms by Leith and Upatnieks, Stephen Benton, and others (p. 28). Our July 1977 issue described the booming business of white-light holographic displays, and showed Lloyd Cross's famed multiplex hologram 'The Kiss' in which fellow holographer Pam Brazier blew a kiss as the viewer walked around it (see Fig. 4). The most famous movie "hologram," of Princess Leia in the first Star Wars movie released in May 1977, was not a hologram at all.The solid-state imaging revolution

We did not cover the revolution in solid-state imaging that in 1969 began when Willard Boyle and George E. Smith of Bell Labs invented the charge-coupled device as an electronic shift register for data processing. Imaging expert Michael Tompsett, also at Bell Labs, adapted CCD arrays for imaging.2 He demonstrated CCD color imaging in 1973, and reached the 525-line resolution of the NTSC analog television standard in 1976. The National Reconnaissance Office launched the first spy satellite to use electronic imaging in December 1976, reportedly with an 800 × 800 pixel CCD array.

The impact of the new technology was clear. "The simplicity of the basic CCD combined with the fact that it can be operated as an imager and/or shift register has resulted in a remarkably versatile device," said the 1978 edition of The Infrared Handbook. The book devoted a full chapter to CCDs, focused largely on indium arsenide, indium antimonide, and mercury-cadmium telluride for infrared applications.3The only cameras the magazine covered in those days were specialized types for laboratory measurements. A full-page ad in the August 1977 issue from Hadland-Photonics Ltd. offered picosecond streak cameras for optical, vacuum ultraviolet, or x-ray wavelengths (see Fig. 5). A particular interest was laser fusion.

The Magazine of Electro-Optics Technology

Electronic imaging came into focus when we acquired Electro-Optical Systems Design in 1983 to become Laser Focus,the Magazine of Electro-Optics Technology. "Computed tomography and NMR techniques as well as digital radiography...are solidly built" on digital imaging technology, wrote Andrew Tescher of the Aerospace Corporation (Los Angeles, CA) in our October 1984 issue (p. 139–146). Digital image archiving "has seen early implementation in medicine and broadcast television." He predicted a coming era of 'personal digital image processing' with phone companies soon to provide "one missing link, an inexpensive high-speed digital communication network" transmitting 56 kbit/s.

In a December 1989 imaging review, senior contributing editor Leonard Ravich predicted growth in automated document processing ranging from medical images to government documents. With "super-workstations" starting to replace dedicated imaging computers, estimates of the potential document automation market ranged from $300 million to $1 billion. "Paper will not disappear from the office, yet if only 10% to 20% of previously paper-bound information is captured by imaging processing, there is a potential to more than double the worldwide base of computer technology," he wrote.Our December 1994 cover story told how CCDs were starting to replace film. CCD arrays 'offer an ingenious solution to a fundamental problem of detector arrays: reading the image off the array,' wrote contributing editor Thomas Higgins (p. 53). "Arrays containing 64 million pixels are within reach today." The same issue told how 256 × 256 pixel InSb focal plane arrays from Hughes Santa Barbara Research Center (Goleta, CA) had allowed ground-based infrared observations of the fragments of Comet Shoemaker-Levy 9 hitting Jupiter (see Fig. 6). Hughes Santa Barbara was developing 1024 × 1024 InSb arrays, and Rockwell International (Anaheim, CA) was developing 1024 × 1024 arrays of HgCdTe.

Our June 1995 issue (p. 34) reported that the new Wide Field/Planetary Camera recently installed on the Hubble Space Telescope had achieved effective resolution of 0.07 arcsec when imaging the asteroid Vesta, showing dark and light spots at a distance of 252 million kilometers. In the same issue (p. 89), Steve Montellese of Industrial Perception Systems (Allison Park, PA) wrote that advances in CCD technology, computers, and software "have enabled the recent introduction of true color-vision systems," to replace black and white inspection. Applications included inspecting pill packaging, verifying portions and placement of food in frozen dinners, counting live chickens, and examining multicolor printing.

From camera phones to terahertz

By the early 1990s, developers were testing digital cameras in mobile phones. In June 1997, entrepreneur Philippe Kahn used one to snap a picture of his newborn daughter and share it with some-2000 people. The year 2000 brought the first commercial camera phone, made by Sharp using a 110,000-pixel chip and selling for $500 in Japan by J-Phone (now SoftBank Mobile).

We largely ignored more sophisticated cameras. In March 2000 we wrote that industrial CCD cameras "are typically capable of capturing up to 1800 frames per minute," equal to the 30 frames/s of interlaced analog television. Progressive scanning could reach 60 frames/s. Our April 2000 issue told how high-sensitivity CCDs could monitor changes in thousands of spots of DNA on a microarray slide.

In July 2005 (p. 109), contributing editor Yvonne Carts-Powell wrote that terahertz imaging systems "are providing information that isn't available in other parts of the spectrum." Terahertz antennas used time-of-flight imaging to achieve depth resolution of 8 to 10 μm, compared to lateral resolution of 100 to 200 μm. They could record internal surfaces and interfaces, but turnkey systems cost $200,000 to $400,000.

In November 2009, senior editor Gail Overton described how 320 × 240 bolometer arrays in cars can spot pedestrians or animals at night at three times the distance they became visible in low-beam headlights. Autoliv (Stockholm, Sweden) said its night-vision system gave drivers "an extra set of eyes on the road at night." (See Fig. 7.)Looking forward

The past half-century has seen a revolution in consumer cameras. Today, complemetary metal oxide semiconductor (CMOS) webcams with video quality rivaling that of a massive television camera from the 1960s sell for less than $10. Cameras smaller than pencil erasers have become standard in mobile phones, so people can pull out their phone, snap a picture, and share it with friends and family in a moment.

New technology lets us put cameras almost anywhere. Outdoor webcams let us watch birds nest, lay their eggs, and raise their young in real time. Cameras read the license plates of vehicles speeding through highway tool booths, and show drivers what's lurking in the blind spots behind their cars before they back up.

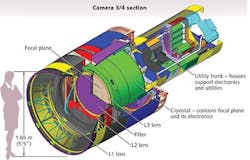

On a larger scale, powerful astronomical cameras are expanding our universe. The 16 Mpixel ultraviolet/visible camera on Hubble's Wide Field Camera 3, installed in 2009, is dwarfed by the 3.2 Gpixel camera being assembled for the Large Synoptic Survey Telescope (LSST; see Fig. 8). The f/1.2 telescope uses the huge array to stare 30 s at a field 3.5° wide-3000 times the area of the WFC/3 camera.

Paul O'Connor of the Brookhaven National Laboratory (Upton, NY), head of the LSST camera team, says that massive parallelism can extract a 3.2 Gpixel image in just two seconds after the exposure, collecting 10 to 30 Tbytes per night. Optimized to spot transient events, the cryogenic camera is expected to spot about a million supernovas during its 10-year survey, helping to study dark energy. It also will watch for asteroids and other faint objects in the solar system from a Chilean mountaintop. First light for the 8 m telescope is planned in 2019, with the survey to start in 2022.

LSST won't collect spectral data, but O'Connor is excited about a new generation of high-sensitivity superconducting detectors that should record the color of each photon arriving at the focal plane as well as its location and time of arrival. "A lot of opportunities open up" with that capability, says O'Connor, but so far the largest superconducting arrays contain only a few hundred pixels.

Cameras also can do more than take pictures. Computational photography and computational imaging are diverging fields, says Ramesh Raskar, head of the Camera Culture group at the MIT Media Lab (Cambridge, MA). Computational photographs produce photographs. But computational imaging can extract much more information than we see in a photo.

Medical diagnostics are one example. Ophthalmologists have long examined the eye to diagnose eye disease, but looking into the eye also offers a noninvasive way to look for early warning of other disease. Observation of blood flow in the retina, for example, can reveal signs of diabetes or high blood pressure. Today, such observations require expensive equipment and a highly trained operator. But Raskar's group has shown that a smartphone equipped with a plastic eyepiece can measure refraction and detect cataracts in the eye. They also have shown that a special pair of glasses called eyeMITRA can image the retina. At SIGGRAPH, to take place Aug. 9-13, 2015, in Los Angeles, Tristan Swedish of the Media Lab will describe an optical system called "eyeSelfie," which helps users align their eyes properly for self-examination, a key step in developing simple portable and inexpensive instruments for use in developing countries. The resulting images could be sent to a cloud server for analysis.

It sounds a bit like magic, and Raskar says "we should use camera phones to do other magical things." He envisions using different types of optical data to "make the invisible visible," from looking around corners and to seeing into the body without x-rays or reading a book that is closed. And he wants to make that technology broadly accessible at low cost.

We need such bold ideas to reap the benefits of new camera technology. We also need to ponder the social impact of the proliferation of cameras. Many came to fear Google Glass, a wearable computer with a built-in camera, as an invasion of privacy. The Electronic Privacy Information Center (Washington, DC) worried that Glass could become "always-on" surveillance. Google itself blocked the user of face-recognition software on the prototype version. Cameras should be to play and help, not to fear.

REFERENCES

1. RCA Electro-Optics Handbook, EOH-11, RCA Solid-State Division, Lancaster, PA (1975).

2. M. F. Tompsett et al., IEEE Transact. Electron Devices, 18, 11, 992996 (Nov. 1971); doi:10.1109/t-ed.1971.17321.

3. W. I. Wolfe and G. J. Zissis, The Infrared Handbook, Office of Naval Research, Washington (1978).

Jeff Hecht | Contributing Editor

Jeff Hecht is a regular contributing editor to Laser Focus World and has been covering the laser industry for 35 years. A prolific book author, Jeff's published works include “Understanding Fiber Optics,” “Understanding Lasers,” “The Laser Guidebook,” and “Beam Weapons: The Next Arms Race.” He also has written books on the histories of lasers and fiber optics, including “City of Light: The Story of Fiber Optics,” and “Beam: The Race to Make the Laser.” Find out more at jeffhecht.com.