Optoelectronic Applications: Image Fusion - Satellite sensors zero in on resource and disaster planning

Satellite and airborne remote sensing have become indispensable tools in the analysis and monitoring of the Earth and its many resources. These technologies—and the associated image-processing algorithms necessary to make sense of the multitudes of data they can acquire—are playing an increasingly critical role in our ability to manage both natural and manmade resources and disasters.

In recent years, advances in high-resolution satellite imaging capabilities have opened new doors to the environmental monitoring community. In particular, the development of new visualization and data-fusion software tools has led to the generation of images, computer simulations, and data sets that offer valuable insight into environmental processes occurring at cellular to global scales.

Satellite-based remote sensing has long been used for mapping, monitoring, planning, and development of urban sprawl, urban land use, and related resource-management studies. Satellite images with moderate to high resolution have facilitated scientific research activities at both landscape and regional scales. In addition, hyperspectral sensors from orbiting satellites such as EO-1, LANDSAT, ASTER, MODIS, IKONOS, and QuickBird provide multispectral data for further analysis of environmental conditions, land cover, and the impact of urban growth and related transportation development. Other applications benefiting from government-sponsored and commercial efforts involving these technologies include disaster response, public health, agricultural biodiversity, conservation, and forestry.

One of the most widely used satellite-based remote-sensing systems is synthetic aperture radar (SAR). Synthetic aperture radar systems are active sensors that offer unique high-spatial resolution over wide areas—up to 500 km (310 miles) across—regardless of weather or other conditions, which makes them well-suited to near-real-time operational applications such as disaster monitoring. In addition, SAR images have high spatial resolution and can be acquired with great reliability and at little-to-no cost because the data is coming from existing satellites already in orbit and already continuously monitoring the Earth’s surface.

Fusion advantages

Despite these advantages, however, SAR data does have limitations. In particular, while each SAR image can cover a wide area, the resolution is not optimal and distortions can creep in. Understanding and accounting for these imperfections can be critical in real-time operational and assessment situations such as post-seismic intervention and rescue, according to Fabio Dell’Acqua, a professor of electronics engineering at the University of Pavia (Italy) who works closely with Paolo Gamba, head of the university’s remote-sensing group.

“If you use only interferometric SAR data to determine the 3-D shape of buildings or other structures, you get a number of inherent geometric distortions that are not easy to correct,” he says.

This is where image fusion (also referred to as data fusion) comes in. Fusion is the process by which two or more streams of image data of different temporal and spatial resolution are combined to produce images of a higher quality than would be possible from a single discrete image. It offers a number of advantages for environmental monitoring and remote sensing; in the case of post-seismic assessment, for example, combining SAR and geographic information system (GIS) data of an area yields much better accuracy of the damage to that area. Image fusion also allows for more-complete imaging of an area intermittently covered by clouds and better analysis of movement or time-dependent change in targets and/or environmental conditions.

In addition, while SAR imagery illuminates target elevations or directional wave spectra, optical imagery identifies targets by shape and color. Combining the targeting and identification of these different data types provides target recognition and environmental characterization with high confidence and lower false-alarm rates than may be found from single source imagery. In the spatial domain, objects that are identifiable on the basis of size and shape in panchromatic imagery are often unidentifiable by their geometric dimensions in lower resolution multispectral or hyperspectral imagery. At the same time, spectral imagery provides the ability to identify targets or characterize the environment based on unique spectral signatures (unavailable in panchromatic imagery), but may not be able to resolve the shape or dimensions of small targets.

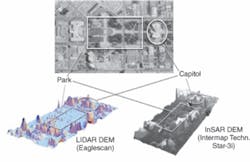

“When you talk about fusion, you are talking about combining data of a different nature from different sensors and intersecting that with (often) optical data,” Dell’Acqua says. “For example, if you use SAR data in conjunction with lidar data, the lidar data gives much more precise information but is more expensive. The interesting thing to do is acquire the SAR data on a large region and lidar data on a much smaller area that overlaps that region (see Fig. 1). By comparing the lidar data with the distorted SAR data you get information about the type and size of distortions and you can use this to correct the distortions on the rest of the data.”

Resource and disaster management

The European Union (EU) has been particularly proactive in using cutting-edge remote-sensing and information technologies to enhance the ability to monitor the Earth for environmental and security purposes. The EU’s Global Monitoring for Environment and Security (GMES) initiative, for example, established in 2004, will utilize a combination of data from Earth Observation satellites and other air- and ground-based sources for a variety of remote-sensing projects. The image data collected from the various satellite sensors (including hyperspectral and polarimetric imagers) and aerial photography (including lidar) will be integrated with additional information from a GIS for analysis, modeling, and map production.For example, engineers from the Electronics Laboratory at the University of Patras (Rio, Greece) are working with raw polarimetric multispectral data from ENVISAT—a polar-orbiting Earth observation satellite launched in March 2002 by the European Space Agency to provide measurements of the atmosphere, ocean, land, and ice—to detect environmental degradations, identify waste disposal regions, and enhance flood plain disaster mapping and modeling.

“Our group has developed special techniques that maximize the information of the final representation derived from the fusion techniques,” says Vassilis Anastassopoulos, professor in the Electronics Laboratory at the University of Patras. “Our fusion algorithms are very promising, especially for fusing color images and images from different sensors, such as SAR and infrared.”

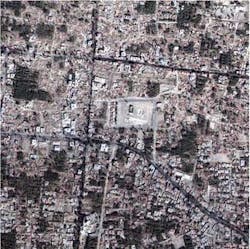

Through their affiliation with GMES and related initiatives such as Global Monitoring and Observation for Security and Stability (GMOSS), Dell’Acqua and his colleagues at the University of Pavia have been working with high-resolution satellite image data for environmental risk and disaster management applications, such as monitoring oil spills and assessing the impact of pre- and post-seismic events on urban areas (see Fig. 2). They are particularly interested in combining SAR data with lidar data to better assess road networks before and after a natural disaster or manmade event such as war.

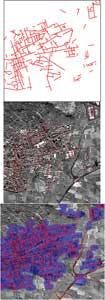

“We have been involved in practical simulations of crisis situations in which there was a simulation of a potential conflict between neighboring countries and real high-resolution data was used with fictitious geographic areas,” Dell’Acqua says. “In a situation like this you are trying to squeeze out of the images the best data you can to make a related humanitarian effort effective. So Gamba focused on extracting the road network. This is critical because in the case of war or natural disaster you might have elements of the road networks that are no longer usable and you wouldn’t want to send help down dangerous or impassable roads. You can do it more efficiently and effectively by taking a satellite image of the area and extracting the road network. We have been developing and perfecting algorithms to extract road network data from high-resolution satellite images, both optical and SAR images, and even fusing these data with GIS for additional information (see Fig. 3).”

The next step, he adds, is figuring out how to get all the image data gathered from the numerous satellites now orbiting the Earth to “synch up.” This is particularly important in trying to coordinate and compare pre- and post-event data that is gathered from different sources.

“We currently have a large number of very different satellites in orbit and even more satellites about to be sent into orbit,” Dell’Acqua says. “But they are all inhomogeneous. That is, the data between the pre- and post-event is inhomogenous. If you want fast response to an event, you have to be happy with the first satellite that comes by, which might not be the same satellite that obtained the pre-event data. So the next step is to put into orbit constellations of satellites—a large set of identical satellites with orbits synchronized in such a way that in an area of interest you have very short revisit times—preferably 12 to 24 hours. If you want a rapid intervention, having to wait for the image data is useless because you need critical information such as road network accessibility in a very short time.”

Kathy Kincade | Contributing Editor

Kathy Kincade is the founding editor of BioOptics World and a veteran reporter on optical technologies for biomedicine. She also served as the editor-in-chief of DrBicuspid.com, a web portal for dental professionals.