Optical mapping 3D display takes the eye fatigue out of virtual reality

Virtual-reality (VR) headsets display a computer-simulated world, while augmented-reality (AR) glasses overlay computer-generated elements with the real world; although AR and VR devices are starting to hit the market, they remain mostly a novelty because eye fatigue makes them uncomfortable to use for extended periods. A new type of 3D display could solve this long-standing problem by greatly improving the viewing comfort of these wearable devices.

Measuring only 1 x 2 in., the new optical mapping 3D display module, developed by Liang Gao and Wei Cui of the University of Illinois at Urbana-Champaign, increases viewing comfort by producing depth cues that are perceived in much the same way we see depth in the real-world.1

Overcoming eye fatigue

Today’s VR headsets and AR glasses present two 2D images in a way that cues the viewer's brain to combine the images into the impression of a 3D scene. This type of stereoscopic display causes what is known as a vergence-accommodation conflict, which over time makes it harder for the viewer to fuse the images and causes discomfort and eye fatigue.

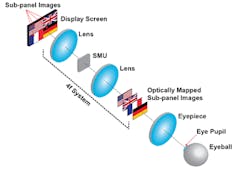

The new display presents actual 3D images using an approach called optical mapping. This is done by dividing a digital display into subpanels that each create a 2D picture. The subpanel images are shifted to different depths while the centers of all the images are aligned with one another. This makes it appear as if each image is at a different depth when a user looks through the eyepiece. The researchers also created an algorithm that blends the images, so that the depths appear continuous, creating a unified 3D image.

The key component for the new system is a spatial-multiplexing unit that axially shifts subpanel images to the designated depths while laterally shifting the centers of subpanel images to the viewing axis. In the current setup, the spatial multiplexing unit is made of spatial light modulators (SLMs) that modify the light according to a specific algorithm developed by the researchers.

Although the approach would work with any modern display technology, the researchers used an organic-light-emitting-diode (OLED) display. The extremely high resolution available from the display ensured that each subpanel contained enough pixels to create a clear image.

The researchers tested the device by using it to display a complex scene of parked cars and placing a camera in front of the eyepiece to record what the human eye would see. They showed that the camera could focus on cars that appeared far away while the foreground remained out of focus. Similarly, the camera could be focused on the closer cars while the background appeared blurry. This test confirmed that the new display produces focal cues that create depth perception much like the way humans perceive depth in a scene. This demonstration was performed in black and white, but the researchers say the technique could also be used to produce color images, although with a reduced lateral resolution.

The researchers are now working to further reduce the system’s size, weight and power consumption. "In the future, we want to replace the spatial light modulators with another optical component such as a volume holography grating," says Gao. "In addition to being smaller, these gratings don't actively consume power, which would make our device even more compact and increase its suitability for VR headsets or AR glasses."

Although the researchers don't currently have any commercial partners, they are in discussions with companies to see if the new display could be integrated into future AR and VR products.

REFERENCES:

1. Wei Cui and Liang Gao, Optics Letters (2017); https://doi.org/10.1364/OL.42.002475

John Wallace | Senior Technical Editor (1998-2022)

John Wallace was with Laser Focus World for nearly 25 years, retiring in late June 2022. He obtained a bachelor's degree in mechanical engineering and physics at Rutgers University and a master's in optical engineering at the University of Rochester. Before becoming an editor, John worked as an engineer at RCA, Exxon, Eastman Kodak, and GCA Corporation.