Full-color 3D layered holographic images via iPhone?

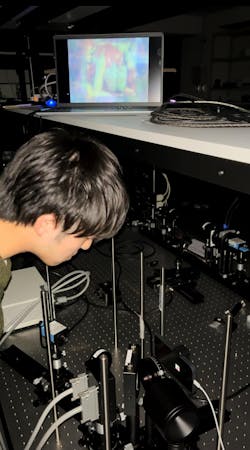

A group of researchers led by Ryoichi Horisaki and Otoya Shigematsu at The University of Tokyo’s Information Photonics Laboratory are reproducing full-color 3D images with two holographic layers, thanks to computer-generated holography (CGH), an iPhone, and a spatial light modulator (see video).

Their method eliminates the need for a laser source, which tend to make systems complex, can potentially damage vision, and are expensive. It also has the potential to enhance the performance of near-eye displays, like those ones used for high-end virtual reality headsets.

“In our laboratory, we focus on minimizing optical components and making optical systems more practical,” says Shigematsu, a master’s student. “Our research into 3D layered image reproduction using computer-generated holography was also initiated to address the drawbacks of conventional methods and to enhance the feasibility of holographic displays.”

A desired optical field is generally “reproducible by modulating coherent light, such as laser light, with holograms displayed on spatial light modulators,” Shigematsu explains. “We compute holograms by modeling incoherent light emitted from the mobile phone screen and display the holograms on an iPhone and a spatial light modulator, respectively.”

Incoherent CGH option

In earlier work, the researchers discovered that spatiotemporally incoherent light emitted from a white chip-on-board light-emitting diode (LED) could be harnessed for CGH. The downside to this setup is that it requires two extremely expensive spatial light modulators (devices to control wavefronts of light).

In their most recent work, the group came up with a less expensive and more practical incoherent CGH option that “aligns with our laboratory’s focus on computational imaging, a research field dedicated to innovating optical imaging systems by integrating optics with information science,” says Horisaki, an associate professor.

How does their new approach work? Light gets passed from the screen through a spatial light modulator to create multiple layers of a full-color 3D image. Through careful modeling of the incoherent light propagation from the screen, the data can then be applied to devise an algorithm to coordinate light emitted from the device’s screen with a single spatial light modulator.

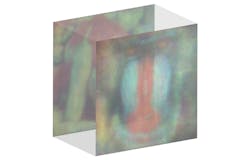

To put their method to the test, the group created a two-layer optical reproduction of a full-color 3D image by displaying one holographic layer on the screen of an iPhone 14 Pro and a second layer on a spatial light modulator—the image was a few millimeters on each side.

In numerical simulations, reconstruction of a two-layered holographic image consisting of a pepper and mandrill had already been demonstrated. “But we were worried about whether the reproduction of the two layers would work well in actual optical experiments, so we were delighted when the mandrill made eye contact with us,” Shigematsu says.

What’s next? “We hope to continue the research and make holographic displays more accessible in applications or people in the future,” Shigematsu adds. “And since the current reproduction image size is as small as a few millimeters, we’d like to proceed first in the direction of improving reproduction performance.”

FURTHER READING

O. Shigematsu, M. Naruse, and R. Horisaki, Opt. Lett., 49, 1876–1879 (2024); https://doi.org/10.1364/ol.516005.

About the Author

Sally Cole Johnson

Editor in Chief

Sally Cole Johnson, Laser Focus World’s editor in chief, is a science and technology journalist who specializes in physics and semiconductors.