Deep learning methods enable image enhancement advances

Digital image processing systems are finding extensive use across many domains, thanks to computer vision technology. Numerous fields rely on it, including healthcare, manufacturing, surveillance, intelligent transportation, monitoring through remote sensing, defense, and many more.

In low-light conditions, such as indoors, at night, or on overcast days, the surface illumination of an object may be weak, leading to significant degradation of image quality due to noise and color irregularities. After undergoing activities such as image transmission, conversions, storage, and others, the quality of these low-light images is considerably reduced. When the amount of light falling on an area is significantly lower than normal, it’s known as low light.

In practical scenarios, there is not a single universally accepted metric because it is difficult to identify the precise theoretical values that characterize a low-light situation. Underwater optical imaging procedures also commonly use imaging systems such as stereo or panoramic polarization, or spectral imaging.

However, aside from optical cameras, every other method brings its own set of drawbacks like a narrow depth range, a complex or expert operation, a limited field of vision, etc.

Light traveling through water experiences absorption and scattering due to the internal optical property (IOP) of water. Forward scattering occurs when the receiver receives illumination reflected off the target objects. Due to forward scattering, the point light source diffuses into a blur circle, which results in hazy images.

Backscattering creates fog veiling and lowers contrast in underwater images. The colors of the light diminish based on the wavelengths as the water depth increases. While it is true that artificial illumination can increase the visual range, doing so has drawbacks such as increased scattering from objects in mid-air and producing images with bright areas surrounded by dark patches. The inherent noise of the underwater imaging system is another important component that affects underwater image quality.

Furthermore, many imaging applications rely on high-quality photographs with sufficient contrast and features such as recognition, medical applications, vehicle detection, etc. The image acquisition procedure typically results in medical images with low contrast, blurriness, and intensity inhomogeneity, even whether taken with the same or different sensors. Alternately, there is a lot of scope in software algorithm improvements, and digital image processing is a focus of research aimed at enhancing low-light image and video quality.

Research into image enhancement methods to boost imaging device performance is highly relevant and useful. While avoiding noise amplification and achieving good real-time performance, image enhancement primarily aims to improve the overall image quality and local contrast, visual effect, and transformation into a form more suited for human perception or computer processing. This improvement can make images easier to study and process by computer vision equipment, make visual systems more reliable and resilient, and make images more compatible with people's subjective visual perception. The outcome of image enhancement is displayed in Figure 1.

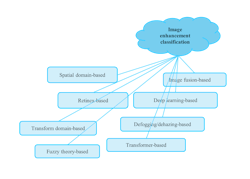

Classification of image enhancement

Several image enhancement techniques were proposed by the researchers during the past several decades. Several methods can enhance image quality. Here are some examples: spatial and transform domain-, Retinax-, image fusion-, deep learning-, fuzzy theory-, image dehazing or defogging-, and transformer-based methods (see Fig. 2).

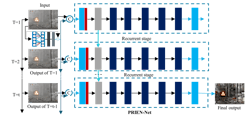

These methods use a diverse set of algorithms and are furthermore categorized into subclasses based on their fundamentally different features. Figure 3 shows the image enhancement procedure using a deep learning method.

Benchmark datasets

The literature covers many image enhancement datasets. The SYD synthetic dataset1 serves as a low-light simulation dataset with 22,656 images capturing a variety of scenes and lighting conditions. The MBARI dataset contains 666 fish images2 to train algorithms to enhance underwater images.

Three distinct categories are composed of the RUIE dataset. Image clarity, color casts, and high-level detection and classification are some of the issues that these subgroups aim to address. Approximately 4000 images of sea urchins and scallops are composed of the RUIE dataset. Li et al. used 950 actual undersea images to construct a large-scale UIEB dataset.1 The lighting conditions under which these underwater images were captured were probably a mix of natural and artificial light.

The UIEB creates a framework that allows us to evaluate several methods for underwater image improvement. An underwater remotely operated vehicle (ROV) equipped with an RGB video camera is used to gather the AFSC dataset. The large number of videos from several ROV missions are included in this dataset. Roughly 571 images make up the AFSC dataset.2 Fish and other related creatures are represented in these images. The total number of pixels in these images is 2112 × 2816. The ROV is used to peer down at the ocean floor to the composed NWFSC dataset.

Image enhancement applications

A human fingerprint is an example of a biometric object that holds an intrinsic feature of human identification. A hill or ridge dominates one side, while a valley occupies the opposite, less visible side. According to Khan et al., there are five main forms of fingerprints: arch, left loop, whorl, and tented arch.3 These varieties are distinguished by the number and position of solitary points. Image enhancement helps to enhance quality of low-quality fingerprints using enhancement algorithms.

Face recognition algorithms struggle in challenging settings like those with low light or great distances. As a result of atmospheric turbulence, long-distance imaging often experiences unwanted effects such blurring, distortion, and variability in intensity. Low-light circumstances cause images to be fuzzy and noisy. The use of face recognition algorithms to long-distance images affected by atmospheric turbulence has been examined previously. Researchers used several algorithms to enhance the quality of images captured by face recognition technologies over a range of turbulence strengths, distances, and optical factors.

Medical image enhancement is an area in which there is always room for technological improvement. Poor diagnostic image quality in some complicated imaging procedures gives erroneous information, making patient diagnosis more challenging. The goal of image enhancement techniques is to enhance the visual appearance of an image without changing any of the original information. Several methods, including blur removal and denoising, accomplish this work.

Low-light image enhancement can help with images captured within low-light conditions that are prone to limited visibility, which manifests as diminished contrast, pale color, and fuzzy scene elements due to inadequate incident radiance received by scene objects. Object identification, intelligent cars, satellite imaging, and almost all computer vision and computational imaging applications depend on high-quality images. Low-light image enhancement enhanced images captured in low-light conditions to be more readable or comprehensible.

Satellite image enhancement is possible for satellite remote-sensing data, which has seen widespread use across a wide range of scientific disciplines and practical applications, including forestry, agriculture, geology, education, military, biodiversity conservation, and the regional planning. In dry areas with little vegetation and many rock outcrops, surface geology can be viewed using a combination of multispectral data from satellites such as Landsat TM and high-resolution imagery from satellites like SPOT, which provides panchromatic imagery. The main objective of satellite image enhancement is to enhance the quality and visual appearance of images or to provide a more accurate transform representation for use in future processing.

Underwater image enhancement may enable discoveries into deep-sea ecosystems by shedding light on unexplored potential for renewable energy, food, and medicine. Underwater image processing has seen a meteoric rise in academic interest in the past several decades. Image enhancement plays a crucial role for enhancing the visual appearance of the images and increases the information richness by highlighting the underwater image information.

Image enhancements ahead

It is important to be careful while improving images to maintain a good balance between several aspects such as image color, visual effect, information entropy, etc. while focused on construction of contrast visible since state-of-the-art algorithms have their limitations.

Image-enhancing research has made tremendous strides in recent years due to fast advancements in algorithms. However, problems with data loss, color distortion, or high computing costs are inherent to all current cutting-edge algorithms. Recent image enhancement methods can’t guarantee that the vision system will function effectively in underwater, medical, and low-light settings.

A lot of room exists for advancement within the field of image enhancement. A few potential paths forward include the development of robust and adaptive capability algorithms, few-shot learning networks, unsupervised learning, establishment of standard quality evaluation metrics, development of video-based enhancement methods, more specialized evaluation parameters, and qualitative functions, which are essential to facilitate image enhancement research.

REFERENCES

1. C. Li et al., IEEE T. Image Process., 29, 4376–4389 (2020); doi:10.1109/tip.2019.2955241.

2. M. Yang et al., IEEE Access, 7, 123638–123657 (2019); doi:10.1109/access.2019.2932611.

3. T. M. Khan, D. G. Bailey, M. A. U. Khan, and Y. Kong, IEEE T. Image Process., 26, 5, 2116–2126 (May 2017); doi:10.1109/tip.2017.2671781.

About the Author

Bhawna Goyal

Assistant Professor, UCRD and ECE departments at Chandigarh University

Dr. Bhawna Goyal is an assistant professor in the UCRD and ECE department at Chandigarh University (Punjab, India), and in the Faculty of Engineering at Sohar University (Sohar, Oman).

Ayush Dogra

Assistant Professor - Senior Grade, Chitkara University Institute of Engineering and Technology

Dr. Ayush Dogra is an assistant professor (research) - senior grade at Chitkara University Institute of Engineering and Technology (Chitkara University; Punjab, India).

Gurinder Bawa

Gurinder Bawa is a senior controls ED&D engineer at Stellantis (Auburn Hills, MI).

Aman Dahiya

Aman Dahiya is in the Department of Electronics and Communication Engineering, Maharaja Surajmal Institute of Technology (New Delhi, India).

Manisha Mittal

Manisha Mittal is in the Department of Electronics and Communication Engineering, Guru Tegh Bahadur Institute of Technology (GGSIPU; New Delhi, India).