4D point cloud data puts pedal to metal in automotive innovation

The automotive industry faces a new challenge—it’s no longer about proving whether autonomous driving works but rather ensuring it’s safe, scalable, and reliable across all roads and for all weather conditions. Vehicles must now handle increasingly complex scenarios: Dense urban intersections, high-speed highways, and unpredictable environments shaped by both human drivers and automated systems. In this reality, the sophistication of a vehicle’s perception system defines its safety and performance.

At the heart of this evolution is four-dimensional (4D) imaging radar, powered by high-resolution point cloud data. But what exactly is 4D point cloud data, and why is it becoming indispensable for next-generation mobility?

4D imaging radar adds elevation and 3D spatial data

Unlike conventional radar, which has reliably provided distance and velocity measurements, 4D imaging radar adds vertical resolution (elevation) and generates a significantly larger collection of three-dimensional (3D) spatial data points, while simultaneously capturing object velocity over time. With this added dimension, vehicles gain a clearer picture of how surrounding objects are moving within a fully 3D space.

Where conventional radar struggled to separate nearby objects, particularly without elevation information, 4D imaging radar provides a much more complete picture of the driving environment—not only distinguishing between objects at different heights but also classifying what these objects are. Beyond external surroundings, this detailed spatial sensing is also applied inside the vehicle through in-cabin radar, monitoring passengers, vital signs, and safety features such as child presence detection.

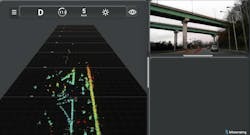

By capturing a high volume of spatial data points with elevation and motion (see Fig. 1), 4D imaging radar can identify whether an object is a vehicle, pedestrian, building, traffic light pole, or other roadside structure, and determine whether it’s a potential obstacle. This includes recognizing whether the vehicle can safely pass under structures like road signs or pedestrian overpasses, or if it must stop. With enhanced situational awareness, vehicles can better navigate complex real-world scenarios and make better-informed and safer decisions.

More point clouds allow for denser object mapping to help vehicles build a clearer view of its surroundings. Many 4D imaging radar solutions today generate ~2,000-point clouds per frame. Bitsensing’s systems also achieve more than 2,000-point clouds to enable reliable detection and tracking for diverse conditions.

This advancement is in direct response to growing demand for smarter, predictive safety features. Automatic emergency braking (AEB) is one example, which began as a system for preventing rear-end collisions but has evolved to handle complex urban scenarios, monitoring vulnerable road users like pedestrians and cyclists, and making split-second decisions.

While traditional AEB systems typically rely on cameras and conventional radars, which provide distance and velocity but lack elevation, these setups can struggle with object detection and classification for difficult conditions like occlusions, harsh lighting, or severe weather. Integrating 4D imaging radar with other radar sensors and cameras strengthens the perception system to deliver high-resolution spatial data and precise velocity measurements to track and predict the real-time movement of multiple objects.

Global regulations are accelerating this shift. The European New Car Assessment Program (Euro NCAP) expands testing to include complex scenarios like turning across oncoming traffic, crossing pedestrians, and cyclists emerging from blind spots. By 2026, Euro NCAP will raise the bar further and make high-resolution, all-weather sensing technologies like 4D imaging radar essential for top safety ratings. And the U.S. National Highway Traffic Safety Administration (NHTSA) is also pushing for stronger requirements, such as mandatory AEB and pedestrian detection for all new vehicles.

At the same time, software-defined vehicles (SDVs) are replacing traditional architectures with centralized computing platforms that continuously evolve through over-the-air updates. This shift requires perception hardware like radar to not only perform reliably from day one but also support ongoing software-driven enhancements. 4D imaging radar is built for this future, with adaptable algorithms and machine learning-based classification that can improve over time as driving conditions, regulations, and user needs evolve.

Sensor fusion

In Western markets, particularly among premium original equipment manufacturers (OEMs), we’re seeing a growing preference for high-performance 4D imaging radar to enable more advanced autonomous functions and top safety ratings. Meanwhile, in Asia, including China, demand is increasing for entry-level 4D imaging radar to complement existing camera-based systems—which provides added reliability in poor weather or low-visibility conditions while remaining cost-effective.

As vehicles adopt sensor fusion, where a blend of radar, cameras, light detection and ranging (LiDAR), and ultrasonic systems work together to create a unified view of the environment, 4D imaging radar plays a critical role (see Fig. 2). Unlike cameras, which estimate velocity indirectly and can struggle in low light, or LiDAR, which measures positions but must calculate velocity across frames, 4D imaging radar directly measures both position and velocity in real time through Doppler processing. This allows it to track multiple moving objects with high precision to ensure smoother, earlier interventions—whether adjusting speed, changing lanes, or preventing collisions.

The global automotive radar market is expected to exceed $20B by 2030, with 4D imaging radar driving most of this growth. Leading automakers are launching vehicles with 4D radar as a standard or premium feature, recognizing that it’s no longer just a competitive advantage but essential to meet evolving safety standards and customer expectations.

Advanced 4D imaging radar solutions generate high-resolution point cloud data with enhanced vertical resolution and micro-Doppler capabilities, allowing vehicles to classify objects, track motion patterns, and predict risks before accidents occur.

As the automotive industry moves rapidly toward full autonomy, and regulations, urbanization, and consumer expectations call for higher levels of safety, 4D imaging radar is becoming a core pillar of vehicle perception. The road ahead demands more than detection—it also requires prediction.

About the Author

Jae-Eun Lee

Jae-Eun Lee is the CEO of bitsensing, a startup imaging radar technology company based in South Korea.