Case Study: 3D Image Analysis

Image Analysis of a 3D Image

Several manufacturers sell 3D cameras which use 2D sensor arrays sensitive to the phase of reflected laser light. All of them spread laser light so that it permanently illuminates the complete scene of interest. Laser light is modulated synchronously with the pixel sensitivity. The phase of reflected laser light, going back to sensor pixels, depends on the distance to reflection points. This is the basis for calculating the XYZ positions of the illuminated surface points.

While this basic principle of operation is the same for a number of 3D cameras, there are a lot of technical details which determine the quality of the obtained data.

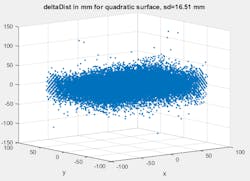

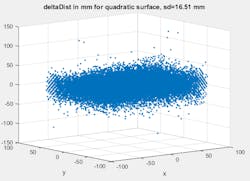

The best known of these 3D cameras is Microsoft Kinect. It also provides the best distance measurement resolution. According to our measurements, the standard deviation of distance to both white and relatively dark objects is below 2 mm. Most 3D cameras have higher distance measurement noise, often unacceptably high for even relatively high target reflectivity of 20 percent. Here we show the example of data obtained using one not-so-good European 3D camera with 2D array of laser light Time-Of-Flight sensitive pixels. We used the default settings of the camera to calculate distances to the white target at 110 cm from the camera, which is close to the default calibration setup distance for the camera. Deviations of distance from smooth approximating surface in the center of the image are shown by the point cloud here:

Here X and Y are distances from the image center measured in pixels.

Here is a histogram of the distance noise:

Both figures show that, in addition to Gaussian-looking noise, there are some points with very large deviations. Such large deviations are caused by strong fixed-pattern noise (difference between pixel sensitivities).

While the noise of this camera is at least 8 times higher than the noise of Kinect, there are more problems which become visible looking at 2D projection of the 3D point cloud. If projected to the camera plane, color-coded distances, shown in cm, do not look too bad for some simple scanned scene:

Here x index and Y index are just pixel numbers in x and y directions.

The picture becomes more interesting when looking at the projection of the same point cloud to the X-Distance plane:

We can clearly see stripes separated by about 4 cm in distance.

Nothing like these spurious stripes can be seen in point clouds from the good 3D cameras, such as Kinect.

So the conclusion of our 3D image analysis of this European 3D camera is that it is not competitive with the best available 3D cameras.