Optics replicate human vision in AR/VR display testing

DOUG KREYSAR and ERIC EISENBERG

The augmented reality (AR), virtual reality (VR), and wearable device market is growing rapidly and is expected to reach 81.2 million units by 2021, with a compound annual growth rate (CAGR) of 56.1%.1 This market growth fuels an increasing need to measure displays for visual quality that is representative of what the wearer sees while the display is being worn, which in turn requires new measurement methods.

As images in near-eye displays (NEDs) are magnified to fill the user’s field of view (FOV), defects in the display are also magnified. These defects not only detract from the user experience, but ultimately can damage a company’s brand image in this increasingly competitive new marketplace. Display testing designed specifically for AR/VR, therefore, is an emerging necessity.

Visual quality tests for traditional flat-panel displays evaluate parameters such as luminance (brightness), color, uniformity, and visual defects. Accomplishing the same measurements for NEDs poses a challenge for manufacturers due to the limitations of traditional measurement systems.

Every AR/VR device manufacturer takes a unique approach to integrating displays within headsets, and technology and hardware configurations vary greatly. Thus, an effective NED test solution must be adaptable to the geometries of each device and the different display specifications.

Near-eye display measurement challenges

For AR/VR devices, NEDs share specific characteristics that present unique visual measurement challenges. The wearer can either be viewing objects up close or across a wide field of view through the head-mounted or goggle-type display.

Unfortunately, NED projections magnify potential display defects. Nonuniformity of color and brightness, dead pixels, line defects, and inconsistencies from eye to eye become more apparent to the user when viewed up close. Resolution is also a critical factor for AR/VR displays. To create visually realistic images across the display, NEDs must have more display pixels per eye. These pixel-dense displays require high-resolution measurement devices.

Increasing the FOV of a NED provides a more immersive visual experience for the user—but the wider the FOV of the display, the more challenging it becomes to comprehensively capture and measure the full display using an imaging system. Human binocular vision covers an approximately 114–120° horizontal FOV, while several leading NED devices achieve FOVs ranging between 100° and 120° (see Fig. 1).2

Typically, NEDs are integrated within a heads-up display (HUD) or head-mounted device (HMD) such as a headset or goggles. To measure a display as it would be viewed by a human user wearing the device, the measurement system must be positioned within the headset at the same position as the human eye. The measurement system’s entrance pupil (the lens aperture) must emulate the human pupil position within the headset to capture the full FOV of the display as seen through the viewing aperture of the headset.Additional measurement criteria

Display testing for AR/VR devices demands unique image characterization data and analyses. For instance, luminance (brightness of the projection) and color uniformity are critical when combining images from eye to eye, or when images are overlaid on top of the surrounding ambient environment (as in AR).

Image sharpness and clarity are important when displays are viewed near to the eye. Characterizing image distortion caused by the viewing goggles or display FOV is also key to improving spatial image accuracy and projection alignment. An AR/VR measurement solution should include analysis functions for these common criteria, along with repeatable image accuracy to ensure consistent quality from device to device.

In essence, emerging AR/VR technologies require an innovative approach to display testing, including new methodology, software algorithms, and—of critical importance for in-headset measurement—new optical geometries. Traditional imaging solutions attempt to meet the unique testing criteria for AR/VR devices, but have significant limitations when it comes to comprehensively addressing all of the measurable AR/VR display characteristics. Above all, a measurement solution needs to closely match the visual capabilities of the human eye to evaluate the true user experience.

Replicating the human visual experience

Human visual perception should be the standard for judging the optical performance of NEDs. To objectively assess the human visual experience, there are several key elements that the display test system must provide, including photometric data, full FOV analysis, and high resolution.

An essential element for all displays is the appearance of light and color. Imaging photometers and colorimeters are best suited to evaluate visual display qualities because they are engineered with special filters that mimic the response of the human eye to different wavelengths of light (the photopic response curve).

Photometric imaging systems are commonly used for display testing, as they capture a complete display in a single, 2D image to analyze photometric data in a spatial context. This context is critical for evaluations of uniformity, distortion, sharpness, contrast, and image position. A NED measurement system should use photometric technology using photopic or colorimetric filters to evaluate light values as they are received by the human eye.Within the NED headset, the user is meant to have visual access to the entire FOV projected by the display, and therefore may notice defects at any point on the display. Imaging photometers and colorimeters that rely on image sensors (such as a CCDs) need only one image to capture the display in full. Like the human eye, an imaging system can see all details in a single view at once. Paired with wide-FOV optics, an imaging system can capture a wide-FOV display—simulating human binocular vision. An imaging system with wide-FOV optics is recommended for the most accurate and comprehensive NED measurement.

Most AR/VR displays are meant to be viewed extremely close to the eye, which is itself a high-precision imager. Therefore, NEDs are some of the highest-resolution displays, fitting the most pixels in the smallest form factor for a more seamless visual experience. The system used to measure an integrated AR/VR display needs to have sufficient resolution to capture all details that may be visible to the human eye in pixel-dense displays at close range. Using a high-resolution imaging system, each display pixel can be imaged across several image sensor pixels, enabling precise pixel-level defect detection (see Fig. 2).

Along with high resolution, imaging systems used to evaluate NED devices should also offer high dynamic range (the ability to discern a wider range of gray level values) and low image noise to simulate human visual perception with the greatest accuracy.

Aperture importance

One of the toughest challenges in measuring NEDs within headsets is positioning the measurement device in such a way as to view the entire display FOV through the headset goggle or lens. If the measurement system can obtain an image of the full display FOV as the user sees it, tests can be applied to evaluate any defects that may be visible to the user during operation of the device. The challenge is that the human eye is at a very particular position within AR/VR headsets, which many imaging systems cannot replicate.

Ideally, a NED measurement solution can capture the full display FOV in a single image for the most efficient and consistent measurement of display characteristics and uniformity. Unique optical parameters that enable imaging systems to capture the full visible FOV include the lens aperture position and geometry. In an optical system, such as the lens on a camera, the aperture or “entrance pupil” is the initial plane where light is received into the lens. A similar point exists in the pupil of the human eye.

A display measurement system that replicates the size, position, and FOV of the human pupil within the headset is ideal for capturing an image of the display to allow evaluation of all qualities that the user may see.

Replicating the human entrance pupil size is important because it enables the system to receive the same amount of light as the human eye. Capturing equivalent light means that measurement images capture equivalent detail and clarity as the human eye would see, enabling the measurement system to make relevant determinations of quality.

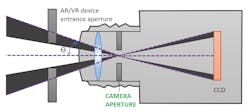

Simulating the human eye position within AR/VR headsets is also critical. A traditional 35 mm lens has an internal aperture that cannot capture the full FOV of the display because it cannot be positioned close enough to the AR/VR device entrance aperture to replicate the position of the human pupil (see Fig. 3).

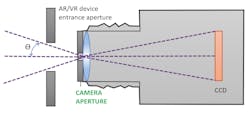

By contrast, optical components designed with the aperture at the front of the lens replicate the position of the human pupil inside the AR/VR headset (see Fig. 4). Combined with wide-FOV optics, this configuration enables the measurement system to capture the full display FOV (the entire image visible to a human user through the headset).Optimal NED measurement solution criteria

The latest display integration environments—like AR/VR and other head-mounted devices—obviously require new methods to test the optical quality of displays that are viewed close-up from a fixed position within headset hardware. Standard display measurement equipment lacks the optical specifications to capture displays within headsets to evaluate the complete display FOV as experienced by the human user.

In summary, an optimal AR/VR display test solution must include optical components that replicate the human pupil size and position within AR/VR devices to capture a display FOV across the human binocular FOV of approximately 120° horizontal, and high-resolution imaging to quickly evaluate all elements of the display FOV with the same precision as the human eye. And finally, optimal solutions must provide imaging photometer and analysis software for accurate testing of display luminance, chromaticity, contrast, uniformity, blemishes, pixel or line defects, and other indications of visual display quality. Only then can the AR/VR industry ensure that its products are delivering the viewing experience expected and deserved by the wearer.

REFERENCES

1. See http://bit.ly/ARVRRef1.

2. See http://bit.ly/ARVRRef2.

Doug Kreysar is executive vice president and chief solutions officer and Eric Eisenberg is applications engineering manager, both at Radiant Vision Systems, Redmond, WA; e-mail: [email protected]; www.radiantvisionsystems.com.