During the past several decades, silicon has undeniably been the crown jewel of the semiconductor industry’s transformation. But with the plateauing of Moore’s Law, the increasing complexity of circuits, and the explosive growth of data-intensive applications, companies need even more innovative ways to compute, store, and move data faster. As a result, scale, speed, and power have become underlying forces to handle both advanced intelligence and computing needs.

Silicon photonics has already earned a stronghold for its impressive performance, power efficiency, and reliability compared to conventional electronic integrated circuits. Overall speed requirements have become fast enough, benefiting the technology’s strengths to transfer data efficiently over ever-shortening distances. Meanwhile, artificial intelligence (AI) is pushing computing to a point where electronic components need to communicate over distances to combine and integrate multiple XPUs (application-specific processing units).

Research and commercialization in silicon photonics has witnessed a parallel surge, with markets such as datacom, telecom, optical computing, and high-performance sensing applications like LiDAR also seeing its advantages come to life. According to research by LightCounting, the market for silicon photonics-based products is expected to increase from 14% in 2018-2019 to 45% by 2025, indicating an inflection point for the technology’s adoption.1

This comes as no surprise with more companies collaborating and investing in silicon photonics to solve current electrical I/O and bandwidth bottlenecks, alongside challenges faced with existing discrete components to deliver exponential growth and performance.

This market shift and aspiration did not happen overnight.

How we got here: From vacuum tubes to interconnects

From the 1920s to 1950s, all electronic components were separate elements—primarily vacuum tubes that controlled the electric current flow between electrodes to which an electric voltage would be applied. Shortly after, the first transistor was invented, which marked the beginning of the electronics industry’s extraordinary progress. The industry then expanded even further with the inception of integrated circuits—one chip with millions or billions of transistors embedded. The development of the microprocessor soon followed suit, benefiting everything from pocket-sized calculators to home appliances.

Classical microprocessors advanced in speed throughout the 1990s, but since about 2003, mainstream processors have hit the 3 GHz clock wall. Despite the number of transistors increasing, not only were processors overheating, but even smaller transistors stopped being more efficient. That meant moving data from a computing chip to either a memory or another computing chip over copper wires was no longer sustainable, no matter how short the distance, and it added to various degrees of difficulty.

The light at the end of the tunnel became silicon photonics.

The industry began seeing the promise of harnessing the power of light and combining semiconductor lasers and integrated circuits. The rich history and evolution in electronics inspired researchers and engineers to find new ways to integrate functions on a chip and use light beams at well-defined wavelengths to be faster than electrical interconnects.

Today, a similar physics trajectory is happening with electrical interconnects for chips at 100 Gbit/s per lane (four levels at 50 Gbit/s), where a lot of equalization power must be added to push the signal over copper wires. In fact, at 200 Gbit/s per lane (four levels at 100 Gbit/s), this problem worsens.

Photonic interconnects, on the other hand, don’t suffer from the same problem because fibers can easily transmit several terabytes of data. Simply put, leveraging photonics to transfer information presents substantial enhancements in speed and energy efficiency in comparison to electronic approaches.

The race for power and speed

Every bit of speed-up comes at the cost of more power consumption. As complexity of circuits and their designs grow—be it high lane counts, dense sensing, or terabit interconnects—teams will inevitably need to pull away from discrete approaches. We are already seeing this transition within the industry, with companies moving from discrete elements to silicon photonics, and eventually to platforms that have on-chip monolithically integrated lasers for added optical gain.

In the interconnect world, there is still a heavy emphasis on the data rate per pin. Today, a 100 Gbit/s interconnect is done in four levels with 50 Gbit/s to get twice the amount of data to go through a 50 Gbit/s data link. But a 200 Gbit/s interconnect ends up pushing more power through it to get that signal over an electrical interconnect. Eventually, the amount of power consumed ends up becoming an issue, especially when pushed over larger distances. Consequently, teams can’t fit any more data through these electric interconnects.

This isn’t the case with optical fibers. Think of an optical fiber as an open thousand-lane freeway. The computing box can be designed to be as big as a datacenter with no sacrifice of going into smaller bandwidths to interconnect. But when using discrete component parts, the size of processors is limited by their interconnect.

Today, some companies are taking a 12 in. wafer and making a single, massive chip out of it, with the interconnects designed to keep all cores functioning at high speeds so the transistors can work together as one. Yet, as modern computing architectures move closer to their theoretical performance limits, those very bandwidth demands rise in complexity and size, making laser integration more costly. With standard silicon photonics, one would need to attach a laser separately, which doesn't scale well to multiple channels.

Integrated lasers: A match made for next-gen designs

Laser integration has long been a challenge in silicon photonics. The major areas of concern point to the very fundamentals of physics involved at the design level and the rising cost linked with the manufacturing, assembly, addition, and alignment of discrete lasers for the chip. This becomes a greater test when dealing with an increase in the number of laser channels and the overall bandwidth.

To date, silicon photonics has seen several photonic components embedded on a chip, but a key element missing until now is integrated gain. On-chip gain pulls away from standard silicon photonics to achieve a new level of integration and enhances overall computational and processing capabilities. This helps provide high-speed data transfers between and within chips at far greater orders of magnitude than can be achieved with discrete devices. The technology’s advanced ability to drive higher performances at lower power or reduce design cost and manufacturing processes has helped to drive their adoption.

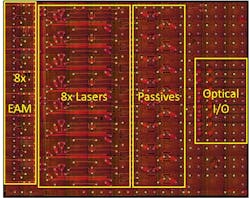

Take ultrasensitive sensing applications such as LiDAR. For coherent LiDAR, the light from the transmitter needs to be mixed with the receiver to back out information, which is why it gets better range information at lower power. With an integrated laser on a single chip, this process becomes easier because you can split off light and put it on a different part of the circuit. If you were to do this with discrete components, it would require a substantial amount of packaging. While the extent of its benefits depends on the complexity of the circuit, this is a core reason why approaches such as coherent frequency-modulated continuous-wave (FMCW) LiDAR really benefit from an integrated approach.Co-packaged optics and system-on-chip (SoC) interfaces.(Courtesy of OpenLight)

Will silicon photonics replace electrical interconnects?

Processing materials such as indium phosphide for a semiconductor laser directly onto the silicon photonics wafer fabrication process reduces the cost and improves power efficiency and gain on-chip, and also simplifies packaging. With monolithically integrated lasers, yields remain high, whereas scaling a design with discrete components results in unacceptable yields. At this point, even dozens of components in a circuit are revolutionary.

However, just like adopting any new technology, the ecosystem is going through a learning curve. Most fabrication units are still getting accustomed to bonding materials such as indium phosphide and gallium arsenide (used to make lasers) to silicon. Because of their different physical and thermal properties, some barriers to entry are relative to discrete approaches that need to be overcome. In short, fabs that have spent decades nailing 8- or 10-in wafers and different material purities now need to learn how to use newer materials and a different design space that makes the process unique.

Silicon photonics with integrated gain

With the pace at which silicon photonics technology is ramping up, companies and foundries are inevitably going to extend collaboration and R&D investments to enable a solid photonics ecosystem for components and integrated solutions. As transceivers scale to eight or 16 lanes, silicon photonics will be the only technology that can deliver the needed performance at lower power and a reasonable cost point.

Some may argue that with the complexity of each application varying and the underlying circuit being at the core, there may still be some unknowns in terms of its potential in areas such as complete autonomy or advanced driver-assistance systems (ADAS), but in no way will its benefits be invisible. At some point, silicon photonics will mature enough where certain key metrics including bandwidth, cost, and energy-per-bit will be sufficient to replace electronics. Going forward, the main value of shifting to optics will be its reach.

REFERENCE

1. See www.lightwaveonline.com/14177636.

Tom Mader | Chief Operating Officer, OpenLight

Tom Mader is chief operating officer (COO) at OpenLight (Goleta, CA).