The dramatic rise of machine learning (ML) and generative artificial intelligence (AI) large language models (LLMs) is creating explosive growth in data center traffic. While cloud computing and search tools increased the data management needs between machines by 10x as compared to machine-to-user activity, AI is expected to push machine-to-machine interactions to the next level. This stems from the fact that LLMs use billions of complex parameters to understand data (via ML) and generate answers (through generative AI).

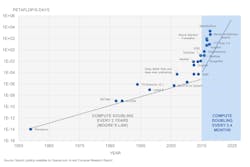

Unfortunately, the ability to meet this increasing bandwidth demand is constrained by the pace of hardware development. AI workload growth of compute operations is significantly outpacing Moore’s Law, with computational requirements now doubling every 3-4 months, compared to the previous historical average of every two years (see figure). As a result, network infrastructure within data centers must move to much higher data rates, more wavelengths, and use higher performance components just to keep up. Yet the total cost of ownership must also stay within reach, so that hyperscalers can cost-effectively sustain ongoing data center growth.

As a result, optical communications hardware providers are being challenged like never before. Hyperscalers want vendors to optimize costs for the large volume of components they urgently need. Energy efficiency is also becoming a critical priority, due to increased component count and bandwidth requirements. Components must not only operate with lower power consumption, but also lower latency and higher performance in compact and new form factors. These attributes all translate directly into higher throughput and training capabilities for AI LLMs.

Key criteria for driving optics into the data center

With new pressures now weighing on the data center ecosystem, it is imperative that hyperscalers and associated hardware providers select the right supply chain partners—not only to support their immediate needs but also to collaborate closely on the purpose-built AI data centers of the future. Key factors to consider include:

Extensive photonics industry experience. While the AI-driven data center market is still new, there are industry veterans with decades of experience providing large volumes of highly reliable, telecom-grade, physical layer components for traditional data center and communications networks, who are ideally suited to support this growth. These battle-tested vendors can apply their experience overcoming previous technology challenges to foresee potential issues and optimize data center requirements for AI.

Current toolkit of enabling technologies. Top vendors have solutions operating within existing data center implementations, supporting live AI traffic today. Their broad portfolio of products, breadth of IP, and range of resources can be tapped for new products. Soon, a step function in capabilities will be required to support the massive bandwidth requirements of AI. Having an existing product portfolio in place enables these vendors to increase capacity, reach, reliability, and performance more quickly and easily in future products.

Vertical integration. Internal ownership of product elements provides the ability to scale from the chip to module level, enabling vendors to offer the most competitive costs. Cost is not only associated with the discrete hardware components—the price of packaging is also a major issue. It’s essential to work with a vendor with capabilities already in place to address ongoing cost pressures at the component, package, and module levels.

Functional integration. A vendor with deep experience in functional integration can customize and optimize new designs, materials, and production processes. This level of customization empowers hyperscaler customers to differentiate their technology in the highly competitive data center interconnect landscape.

Ability to scale. Burgeoning growth in data centers requires a vendor that can quickly and seamlessly scale capacity to meet the expected exponential growth in demand. The right partner will have proven success at high volume manufacturing, with automation capabilities that safeguard production, calibration, and testing to ensure the highest possible product reliability and quality.

Promise of silicon photonics for purpose-built AI infrastructure

With increasing levels of data center power consumption and rising total cost of ownership resulting from the exponential growth of AI, the need for energy-efficient and cost-optimized solutions is now paramount. Silicon photonics is emerging as a critical platform for new optical products that allow data centers to meet the higher power demands of AI workloads without escalating operations costs.

Linear drive optics (LDO) is an innovative solution to tackle these challenges, because it eliminates the need for digital signal processor electronics within the pluggable optics form factor. Co-packaged optics (CPO) is another solution that combines silicon photonics transceivers with electronics in a multichip module, leveraging advanced packaging and interposer technologies developed for the silicon semiconductor industry.

With both approaches, laser sources will need to be redesigned to offer the highest power conversion efficiency in a low-cost package. Silicon photonics can integrate these laser sources, creating a highly integrated, compact package inside the LDO pluggables or external laser source front pluggables for CPO transceivers. This silicon photonics-based laser package offers competitive performance and absorbs costs via wafer-level packaging, assembly and test at scale. This solution will be crucial for addressing evolving AI challenges.

Bringing it all together

Today, approximately 20% of data center network costs originate from optics. And power consumption of data center optics has risen to 10% of total data center power expenditures, up from 3% a decade ago. Lowering the cost, power requirements, and latency of data center optics, while significantly increasing performance and capacity, will be essential for the purpose-built, AI-driven data centers of the future.

Partnering with a vendor with proven credibility, a diverse product portfolio, functional and vertical integration, silicon photonics capabilities, and manufacturing at scale will put you at a competitive advantage in the rapidly evolving AI data center market.

About the Author

Erman Timurdogan

Director of Silicon Photonics R&D, Lumentum

Erman Timurdogan, Ph.D., is the director of silicon photonics R&D at Lumentum for next-generation integrated laser and silicon photonics platforms. Prior to joining Lumentum, he was the director of photonics high-speed design at Rockley Photonics, and previously served as director of optical communications engineering at Analog Photonics. Timurdogan received his doctorate in Electrical Engineering and Computer Science from the Massachusetts Institute of Technology (MIT).