In-package optical interconnects enable new generative AI architectures

A lot has changed since we wrote about unleashing the full potential of AI with in-package optical I/O in July 2022.1 OpenAI unveiled ChatGPT in November 2022, and generative AI has been the talk of the tech and business realms since then. AI has captivated our collective interest as its potential for organizations to solve challenges and reshape our world becomes clearer.

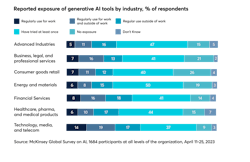

Recent surveys support this trend: a VentureBeat survey from July 2023, for example, found that 55% of organizations are experimenting with generative AI on a small scale to see if it can fit their needs.2 And a McKinsey Global Survey from August 2023 found that 79% of respondents say they have at least some exposure to generative AI—in everyday life or at work—and 22% said they regularly use it at work (see Fig. 1).3

But here’s the catch: With everyone diving into generative AI, training all of these models and processing all of these queries requires high-performance hardware—such as GPUs, TPUs, or ASICs—and large amounts of memory and storage. Moreover, the technology’s insatiable appetite for data processing continues to grow. Maintaining efficient data transmission and resource allocation is the key to quenching AI’s data thirst.

For generative AI, many GPUs must function as one giant GPU due to the large models used. This puts a higher strain on interconnections between these GPUs than a traditional network can handle. Imagine these interconnects are highways moving data from one place to another. Traditional networks are like simple surface streets, unable to carry today’s heavy AI traffic loads at speed. The solution? A superhighway of sorts—in-package optical I/O—that can keep that AI traffic moving quickly and efficiently.

These interconnects play a crucial role in determining the performance of generative AI models. They directly impact model speed, efficiency, scalability, and the ability to adapt to changing demands and user needs. Let’s dive into this world of generative AI architectures to better understand the networking challenges they pose, the limitations of traditional interconnects, and how in-package optical I/O can overcome these challenges.

Meeting the scaling challenges of generative AI

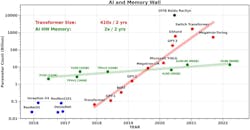

Large language models (LLMs) are deep learning algorithms that can recognize, summarize, translate, predict, and generate text using large datasets. LLMs are at the heart of generative AI and continue to grow at a rapid pace. They excel at recognizing language syntax and serve as a foundation for other applications like search and vision technologies (see Fig. 2).

A recent report from DECODER revealed that OpenAI’s latest GPT-4 language model relies on 1.76 trillion parameters, which are reportedly based on eight models with 220 billion parameters each.4 To put it in perspective, GPT-3 had 175 billion parameters in 2020, while GPT-2 had just 1.54 billion parameters in 2019. As the volume of parameters continues to increase, high throughput becomes increasingly important. This can be achieved by either adding nodes or increasing each node’s speed.

Generative AI models at this scale require massive amounts of resources: Tens of GPUs for inferencing, hundreds for fine-tuning, and thousands for training. With the continued growth in model size and complexity, these numbers will continue to grow exponentially and push the demand for efficiency even higher.

Data center operators must think strategically due to size and power constraints. Take, for example, a 100 MW data center, where operational decisions depend on the specific tasks their limited number of GPUs (roughly 100,000 per data center, assuming around one kilowatt per GPU) need to fulfill. Inference is the most common task, and these constraints would allow for up to 10,000 × 10 or 1000 × 100 GPU inference systems. Fine-tuning, on the other hand, requires 100 to 1000 GPUs per system. All of this consequently limits how many systems you can fit in a 100,000 GPU footprint. Generative AI architecture’s compute requirements point to the need for disaggregation, which allows for more flexible resource allocation to different tasks. In other words: Systems can dynamically allocate GPUs as needed.

For any of this to work, the global communication demands of generative AI systems low-latency, high-bandwidth interconnects between GPUs. These connections must enable fast, efficient data transfer across numerous racks.

While low latency itself is important, uniform latency is just as critical as organizations scale up the number of GPUs in their systems. Without uniform latency, the system’s scalability is limited by some GPUs experiencing significant communication bottlenecks or even sitting idle while others perform efficiently. Ensuring uniform latency throughout the system is critical for both scalability and efficiency, which will ultimately help shape the potential of the software or programming model.

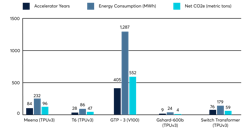

Beneath these performance challenges lies the fact that training an AI model like GPT-3 (see Fig. 3) consumes 1.287 GWh of energy and generates 552 metric tons of CO2 emissions, which comes with a cost of approximately $193,000.5 So, how can we manage the energy requirements of these LLMs’ accelerated computing architectures?

NVIDIA founder and CEO Jensen Huang addressed this challenge during a COMPUTEX 2023 keynote speech.6 Huang pointed out that $10 million can buy 960 CPU servers that would need 11 GWh to train one LLM. The same $10 million, however, can be used to purchase 48 GPU servers that need just 3.2 GWh to train 44 LLMs. Put another way, for $400,000 you can buy two GPU servers—requiring just 0.13 GWh to train a single LLM. In short, GPUs are 25x more cost-effective and 85x more energy-efficient for the same training performance.

Interestingly, Huang noted that the GPU server is no longer the computer—it’s the data center. Although he was talking about efficiency, this sentiment highlights the need for interconnects.

Navigating bandwidth density and scalability hurdles for interconnects

Organizations are now running generative AI tasks on systems that rely on traditional interconnects using electrical I/O integrated with pluggable optics. However, these interconnect solutions are becoming long in the tooth because this approach introduces latency and bandwidth bottlenecks that pose a threat to the next generation of practical, efficient generative AI tasks.

Distance is the Achilles heel of electrical interconnects, which suffer signal degradation over longer distances and are confined to a single chassis at current 100G—and even upcoming 200G—links. As connectivity demands grow beyond the chassis into cross-rack and even multi-rack scale, pluggable optic connections are required. In short, when attempting to provide global communication from every GPU to every other device in the system, traditional interconnects comprise in-rack electrical interconnects combined with cross-rack pluggable optics.

But let’s talk about the elephant in the room: As a system scales, we need to string more and more of these higher-bandwidth connections—from any one GPU to another in the system via the system fabric. With traditional interconnects, this is much easier said than done because of the sizable energy and physical footprint of pluggable optics. They don’t scale up efficiently enough for the speed and needs of generative AI.

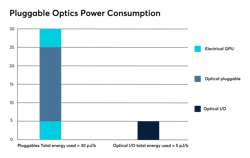

And power consumption is a stumbling block for pluggables, because a pluggable-based GPU-to-GPU link consumes 30 picoJoules per bit (pJ/b). An in-package optical I/O solution (which we’ll get to more in a bit) that directly connects to packages, however, uses less than five pJ/b. Then, too, there are the financial considerations. The current cost of pluggables is around $1–2 per Gbit/s and would need to be roughly 10x lower to make it cost-effective for generative AI (see Fig. 4).

Another drawback: pluggables are bulky modules. Their edge bandwidth density is just a tenth of that of in-package optical I/O, while their area density is 100x lower. This limits the amount of bandwidth available from the GPU to the rest of the system, creating a bottleneck capable of rendering future, high-parameter generative AI tasks unworkable.

All told, physical space and power constraints—coupled with less-than-ideal scalability—make implementing pluggable optics an uphill battle, especially when it comes to meeting the needs of generative AI models.

Dealing with the data-intensive aspects of generative AI—large data sets and even more massive parameter sets—requires interconnects up to the task. Optical I/O brings to the table the much-needed bandwidth capacity between GPUs. Ayar Labs’ optical I/O solution, an in-package optical I/O chiplet paired with advanced light source technology, can help meet this demand by providing 4 Tbit/s of bidirectional bandwidth.

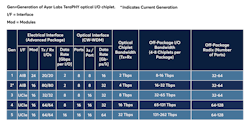

But the benefits of optical I/O go beyond a bandwidth upgrade. It also has the ability to impact package-level metrics and enable the scaling of compute-socket bandwidth. By leveraging architectures based on UCIe, CW-WDM MSA, and microring-based optical I/O technology, it’s possible to unlock 100+ Tbit/s of off-package I/O bandwidth and support connectivity with up to 128 ports per package (see table).

This combination of high radix and high bandwidth per port, coupled with low optical I/O link latency (<10 nm + ToF), provides an unprecedented level of flexibility when it comes to designing all-optical fabric connectivity solutions. This flexibility is crucial for the large-scale distributed compute system fabrics we need to fuel the future of generative AI. These features will allow for the design of large-scale system fabrics characterized by low, uniform latency and high throughput, which keeps the compute nodes fully utilized.

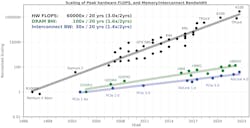

It’s worth noting the transformative impact that an optical I/O interconnect’s higher bandwidth can have on distributed systems. While peak FLOPS have skyrocketed by 60,000x during the past two decades, off-package interconnect bandwidth has increased by just 30x during that same time. To realize truly scalable distributed systems, which are essential for unleashing the power of generative AI, we need to address the interconnect bandwidth bottleneck challenge (see Fig. 5).

Generative AI has emerged as one of the most disruptive technological developments in recent years, and for good reason. In many ways, its usability and versatility rival the introduction of the internet. And for many organizations today, their future depends on integrating generative AI in bold and creative new ways to remain on the cutting edge.

Unfortunately, traditional interconnects fall short when it comes to facing the demands of generative AI architectures. In-package optical I/O, however, is a powerhouse technology that unlocks the urgent need for high bandwidth, low latency, high throughput, and energy efficiency. Most importantly, optical I/O allows generative AI to scale—opening the doors to a world of new possibilities to revolutionize industries on a never-before-seen magnitude.

REFERENCES

1. See www.laserfocusworld.com/14278346.

2. See http://tinyurl.com/yc3vt76h.

3. See http://tinyurl.com/2w6wbxez.

4. See http://tinyurl.com/4a8wjs2z.

5. D. Patterson et al., arXiv:2104.10350v3 [cs.LG] (Apr. 23, 2021); https://doi.org/10.48550/arXiv.2104.10350.

6. See https://youtu.be/i-wpzS9ZsCs.

Vladimir Stojanovic

Vladimir Stojanovic is chief technology officer (CTO) of Ayar Labs (San Jose, CA). Prior to founding Ayar Labs, he led the team that designed the world’s first processor to communicate using light. Vladimir is also a Professor of EECS at UC Berkeley, and was one of the key developers of the Rambus high-speed link technology. He holds a PhD from Stanford University.