Neurophotonics: Photonics demonstrate brainlike behavior useful in machine learning

Photonics may be set to push the emerging field of neuromorphic engineering—the building of systems with brain-like properties and architectures—to new levels of computational and power efficiency over the next decade or two. Recent papers from researchers at the University of Strathclyde (Glasgow, Scotland) and Princeton University (Princeton, NJ) not only show the theoretical advantages of using light instead of electricity to communicate neural activity—namely a speed-up of up to nine orders of magnitude—but include the clearest experimental evidence yet that such systems could be used in practice.

An enabling technology for power-efficient deep learning and machine intelligence, neuromorphic systems (in theory) have two properties: analog operation and massive parallelism. IBM's TrueNorth chip, for example, processes information in the analog domain, making it massively more power-efficient than comparable digital processors.

However, it is difficult to directly connect one neural circuit to tens of others, nevermind the hundreds or thousands that would replicate the architecture of a biological brain. This is because of packaging (routing the hundreds of wires), power loss (the smaller the wire, the more heat produced), and crosstalk (because signals from nearby wires will mix). On top of that, electrical communication is slow. Though there are ways of getting around some of these issues electronically (such as time-multiplexing of signals), this connectivity bottleneck represents an opportunity for photonics.

In an important review paper on the subject, Thomas Ferreira de Lima and his colleagues at Princeton show how some of the photonics developed for the telecommunications industry—particularly wavelength-division-multiplexing (WDM) devices—may be ideal for distributing multiple distinguishable signals around an artificial brain at speed.1 The paper provides a detailed analysis of the different ways that neurons can be implemented, which includes both all-optical and optical/electronic/optical configurations, and explains the kind of functionality required.

First, the synapses should work with pulses (or spikes) that carry information in their timing. Second, the incoming optical signals need to be weighted and summed (the learning is stored in these weights), and the resulting signal must be nonlinearly thresholded to "decide" whether the neuron should fire (the neural response).

The Princeton solution to the problem uses microring resonators to read the different components of a WDM signal, turning them into electrical outputs that are weighted and then summed. Thresholding happens electronically, too, with the neural output controlling a Mach-Zehnder interferometer. This, in turn, modulates a laser beam at a signature wavelength that can then be multiplexed with the output of other neurons and feed into a new synapse. The team has recently built these neurons into a demonstrator with 24 nodes that shows a speed boost of almost 300 against conventional networks.2

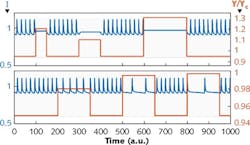

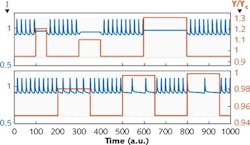

The Strathclyde team, led by Antonio Hurtado, has taken an all-optical tack and, for the first time, demonstrated inhibition in a photonic neuron. The team uses a polarized beam from a tunable laser to supply an incoming signal, injecting it into a vertical-cavity surface-emitting laser (VCSEL). Here, the neural behavior is produced within the laser itself. Essentially, the injected signal modulates the laser output based on complex dynamics. This can lead to a steady set of normal output pulses (at low amplitude) or a period of weak continuous output (inhibited output, which occurs with high amplitude). More interestingly, if operated in the right regime, the incoming signal can be used to modulate the frequency of the output (see figure).

Hurtado says this is the demonstration of a "versatile photonic neuronal model enabling the use of a single VCSEL for the emulation of excitatory and inhibitory neuronal dynamics at subnanosecond speeds... and also has provided proof of the controllable interconnectivity between different VCSEL-neuronal models."

However, there is much work to be done. "Researchers in the field still need to create a proof-of-concept demonstration of a full network of dozens or hundreds of spiking lasers," Ferreira de Lima says. "Second, we need to make sure that this device connects well with existing computers and/or network cards. Third, we must show how to basically configure the neural network to do what we want." Finally, he points to an area that he says is often overlooked. "There needs to be a large collaboration between hardware and software engineers to make this technology accessible to developers," he notes.

REFERENCES

1. T. Ferreira de Lima et al., Nanophotonics, 6, 3, 557–599 (2017).

2. J. Robertson et al., Opt. Lett., 42, 8 (Apr. 15, 2017).

About the Author

Sunny Bains

Contributing Editor

Sunny Bains is a contributing editor for Laser Focus World and a technical journalist based in London, England.