AR/VR Displays: Engineering the ultimate augmented reality display: Paths towards a digital window into the world

JANNICK P. ROLLAND

The ultimate augmented reality (AR) display can be conceived as a transparent interface between the user and the environment—a personal and mobile window that fully integrates real and virtual information such that the virtual world is spatially superimposed on the real world. An AR display tailors light by optical means to present a user with visual (symbolic or pictorial) information superimposed on spaces, buildings, objects, and people.

These displays are powerful and promising because the augmentation of the real world by visual information can take on so many forms. For example, augmentation can add identifying and highlighting features on moving targets in the environment, zoom in on critical information to aid decision-making, or provide hidden information not visible to the human eye, but instead available from text, data, or model databases.

Data may be acquired via a wide range of technology, mimicking super-human characteristics, such as polarization sensing or infrared and terahertz imaging. By allowing us to paint anything we know on the spaces, objects, and people in the world, these displays extend our capability to see beyond our natural senses and bring any information to bear on a user’s context and actions.

Unfortunately, optical and optoelectronic engineers differ in their approaches to developing the ultimate AR display that is physically comfortable, prevents eye fatigue, presents the desired augmented information without obscuring reality, and does it all at a price point bearable to the average consumer or to the industrial user as dictated by the application. Fortunately, designers are meeting these challenges in step with new optics and photonics advances that are enabling AR displays to mature and thrive.

Applications

Consider several emerging applications of AR. Boeing (among others) has been building a better future for their technicians—setting up a roadmap since the 1990s—by providing them smart AR glasses while installing electrical wiring in aircraft. Superimposing text of the internal schematics of the plane on their AR displays during repair and maintenance boosts both worker productivity and the likelihood of error-free work.1, 2

While the right to privacy of information is making news headlines, consider superimposition of personal information made voluntarily public in the cloud. As you enter a space, your display interface may communicate high-level visual information about the people around you. This information could potentially facilitate stimulating personal discussions and interactions.

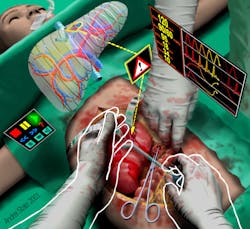

Another compelling example of augmentation is in the medical domain. Practitioners can see the superimposition of data or models from databases revealing the internal anatomy of patients acquired via medical devices (see Fig. 1). Using tightly superimposed spatial information about the human tissues of interest, surgeons of the future have the potential to reduce the length of surgeries and critically improve outcomes. By guiding the scalpel with data, the augmentation may lower the risk of malpractice associated with cutting unwanted structures. Consider brain surgery, where a small mistake may permanently damage the facial nerves—a tragic spatial error that AR displays could one day eradicate.3

The road to the ultimate display

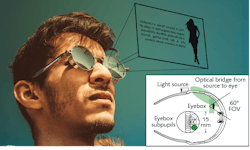

It was back in the 1960s that Ivan Sutherland conceived and prototyped what he called the ultimate display—the first AR stereoscopic head-worn display (HWD) that was tethered and mechanically tracked (see Fig. 2). The next generation of developments focused on military helmet-mounted displays (HMDs), whose increasingly higher levels of sophistication continue to the present. In parallel, new applications called for the birth of fully mobile HWDs that were first targeted in the 1990s for maintenance and medical augmentation and continue to develop and advance to this date.Now, AR is coming to the individual user spurred by the billion-dollar market potential of applications supporting a wide range of consumers. The race is on to create a robust, untethered, and aesthetical AR display that acts as a window into the world. The ultimate goal is to make that mobile window larger by achieving a wide field of view (FOV)—60° or larger—with full color and perfectly embedded in a trendy eyeglass or sunglass format.

Challenges for optical designers

Optical designers of wearable displays understand the daunting challenge of meeting the demanding specifications of a large FOV together with a large eyebox, while at the same time satisfying the constraints for robustness, packaging, low cost, and the high-yield manufacturing processes that are essential for the viability of a consumer product. In addition, the foregoing features must be power-efficient to maximize the use time from the AR display battery.

The optical concept of the Lagrange invariant (or etendue, in the case of the generalization to three dimensions) establishes that the mathematical product of the FOV angle and eyebox size will dictate the complexity of the optical system. Furthermore, the size of the first optical element with respect to the eyes is set by the distance between that element and the eye multiplied by the FOV angle.

The eyeglass or sunglass format sets a range of acceptable sizes for that component. Then, a range of FOVs and distances can help determine a range of solutions. If the FOV is non-negotiable in terms of achieving ≥60°, then achieving the eyebox requirements will be the challenge. Optical designers should carefully consider their knowledge base and assumptions before setting off on a particular design path.

Approaches to expanding the eyebox include eyebox multiplication or replication techniques. The designer might also leverage the integration of eye-tracking methods to dynamically adjust the location of the display’s eyebox in real time to the current position of the user’s iris as the eyes gaze around the merged virtual and physical world. The latter approach must be accurate and robust to accommodate a wide diversity of users. These approaches to creating an effective larger eyebox can add significant complexity to the engineering challenge of seamlessly tailoring light in an AR display.

The intersection of AR displays and human factors

The ultimate AR display is part of the user’s body. The interface is a window to interacting not only with objects and things, but also with other people. While knowledge of human factors is central to creating a visual experience that is as natural and intuitive as possible, a wearable display will only gain social acceptance if it encompasses both aesthetics and usability to support social interaction.4 In 2016, R. E. Patterson and I discussed the intention behind the use of information displays as it relates to cognitive engineering, which involves the meaning of display content.5

A ubiquitous AR system design for cognitive augmentation arouses considerations of cognitive and social-technical issues. In our paper, we describe one identified shortfall in the path to develop these AR displays: the lack of collaboration between engineers and cognitive scientists. This trend has started to change, and collaboration will no doubt increase in the future. Cognitive engineering will help guide the required interaction between the optical and human factor specifications for any wearable display product.

Questions that guide display development

Provided an optical specification where certain parameters and performance metrics are seen as a target, other specifications may be set as a goal. For example, what type of light source will be adopted? Will an extended microdisplay (with or without illumination optics) be used with custom optics to image in one shot the full-color image onto the retina, or will a point-like light source coupled to a scanning device such as a microelectromechanical systems (MEMS)-based scanner or a scanning fiber tip be used to sequentially draw point by point the image at high speed on the retina in multiple colors? For the auxiliary optics, will thin waveguides be stacked to confine light in a thin sheet in front of the eyes, and if so, are there approaches to curved waveguides that improve the aesthetic form factor? How will the light from each color be coupled into the waveguide and output to the eyes of the user, and will the system suffer from crosstalk or stray light that may add unacceptable fog to an otherwise-sharp image across the full FOV?6 Can the waveguide be manufactured at high yield, as it has been a common issue with various approaches adopted to this date? How authentic will the view of the real world be through the waveguide? Or will the approach leverage the sunglass interface with a conventional or holographic-like combiner as an optical element that combines the real and digital world?

Ultimately, the answers to these questions sketch a view of the holy grail of the ultimate AR display. A challenge is to establish a seamless geometry of all optical components involved to yield the desirable image quality within the targeted 60° FOV in an eyeglass or sunglass format that a large pool of users will absolutely want to wear.

Enter freeform optics

This is where the emerging technology of freeform optics is expected to revolutionize the AR solution space. The phrase ‘freeform surface’ has been used in illumination optics for some time, where complex, continuous, or segmented surfaces have been designed to tailor light. In the 1980s, Polaroid Corporation was the first to adopt a freeform prism in the viewfinder of its SX70 Single Lens Reflex camera. The freeform prism was then further developed in the 1990s for virtual reality (VR) headsets, but unfortunately these prisms were unable to create wide FOVs for immersion.

In AR for the consumer market, however, one must truly assess whether looking at the real world through a thick piece of plastic (a low-cost and lightweight molded prism) will ever be conducive to consumer adoption. It is also worth noting that the most common thinner geometry is in fact not compliant with optical aberration correction over moderate to large FOVs. This issue was recently discovered when applying advanced lens design methods for freeform optics.7 At a time when users seek high-definition image quality with a large FOV in an aesthetic display format, a different pathway is sought.

Freeform optics expands well beyond the freeform prism since freeform surfaces may be combined in multiple geometries, providing the ability to fold optical systems in three dimensions while simultaneously providing for a finite range of viable folding geometries together with the ability to correct for both blurring and warping optical aberrations.7 On a different scale when engineering complex surfaces, sub-wavelength periodic nanostructures provide another pathway to creating optical components that have been shown to provide unique means to shape the light field.8

While warping aberrations may be mitigated in software, iris location behind the optics may cause space distortions if not accounted for. Naturally, eye-movement recording within an AR display tracks the convergence of the eyes to provide in-focus data at the gaze point together with appropriately blurred contextual information that more naturally combines with real-world vision.9-10 Beyond this function, eye tracking can also extract more information about the user, such as understanding what a user looks at while providing a means to measure other user states such as awareness and fatigue.

The ultimate AR display seeks to be seamless, always accompanying the user, merging virtual and physical spaces, and becoming as common, necessary, and ubiquitous as smartphones. As we learn more about human vision and cognitive processes associated with a wide range of applications, it is certain that optical technologies will continue to emerge and combine in unique ways to seamlessly guide the light while widening the window of the ultimate AR display of the future.

REFERENCES

1. A. Tang et al., “Comparative effectiveness of augmented reality in object assembly,” Proc. CHI, 73–80, ACM Press, New York, NY, (2003).

2. C. M. Elvezio et al., “Remote collaboration in AR and VR using virtual replicas,” Proc. SIGGRAPH ’17, 13, New York, NY (2017).

3. T. Sielhorst et al., J. Display Technol., 4, 4, 451–467 (Dec. 2008).

4. R. E. Patterson, “Human interface factors associated with HWDs,” Handbook of Visual Display Technology, Springer 4, 10, 2171–2181 (2012); second ed. 2016.

5. R. E. Patterson and J. P. Rolland, “Cognitive engineering and information displays,” Handbook of Visual Display Technology, Springer, 4, 10, 2259–2274 (2012); second ed. 2016.

6. D. Cheng et al., Opt. Express, 22, 17, 20705–20719 (2014).

7. A. Bauer, E. M. Schiesser, and J. P. Rolland, Nat. Commun., 9, 1756 (2018).

8. N. Yu et al., Science, 334, 6054, 333–337 (2011).

9. H. Hua, “Enabling focus cues in head-mounted displays,” Proc. IEEE, 105, 5, 805–824 (2017).

10. G. Koulieris et al., “Accommodation and comfort in head-mounted displays,” Proc. SIGGRAPH ‘17, 36, 11–21 (2017).

Jannick P. Rolland is the Brian J. Thompson Professor of Optical Engineering in the Institute of Optics at the University of Rochester, Rochester, NY; e-mail: [email protected]ester.edu; www.optics.rochester.edu.