Using separate detectors for each color, three-chip cameras offer better images and lower system-development and maintenance costs than alternative systems.

Douglas R. Dykaar, Graham Luckhurst, and Neil J. Humphrey

At first glance, three-chip common-optical axis "beamsplitter" color cameras may seem overly complex, but they can provide image acquisition that is simultaneous in time and space, is perfectly registered, and that covers 100% of three color bands. Alternative schemes, such as mosaic filters or single-chip trilinear sensors cannot match this feat, nor can they match a good beamsplitter's image fidelity or ease of system integration. The three-chip cameras offer several advantages over the alternatives because of better images, reduced system-development and maintenance costs, and increased ease of use.

Color cameras

Color imaging can be accomplished in several ways, but in all cases the goal is to focus polychromatic light onto pixels to obtain a wavelength-dependent photoresponse. Conventional silicon-based photodetectors cannot simultaneously distinguish different colors in a single pixel without color filters, so color imagers must offset or interleave pixels for capturing separate color bands (see Fig. 1). These approaches to separation all create the opportunity for misalignment and misregistration, which must then be corrected when the color channels are recombined. In addition, patterned color filterscommon in traditional area arraysbring a reduction in resolution that is impractical for linear arrays. And in some applications, spatial or temporal separation of the image acquisition cannot be tolerated.

RIGHT. FIGURE 1. Color images captured with interdigitated or mosaic filters (left) are "non-isotropic." At any one point, they capture information for only one color band. Trilinear sensors (middle) require ideal object motionif the object shifts horizontally or vertically during imaging, the images won't line up because the imagers are spatially separated. Only the common optical axis approach (right) offers 100% coverage for each color channel from a single object point resulting in perfect registration.

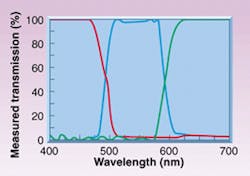

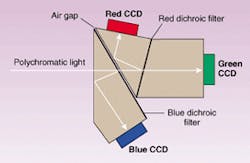

A common optical axis or "beamsplitter" camera offers a way around these problems. Its prism assembly uses wavelength-selective filters and total internal reflection (TIR) to separate the red, green, and blue components of polychromatic light and direct them to separate charge-coupled-device (CCD) detectors (see Fig. 2).

It should be noted that the impact of placing a large path length of glass between a lens and sensor can be significant, and most systems have not been designed to account for this altered path. To use a single lens, the path length must be the same for all three colors and the system should be optimized to compensate for the chromatic aberrations introduced by its various optical elements.

Chromatic aberration

When a ray of light encounters a boundary between media of differing indices (n1, n2), the light is refracted at the boundary and "bends" toward the higher-index material as described by Snell's law:

null

This is due to the velocity of light slowing down compared to moving in the higher-index material. In addition, the index n2, in Eq. 1 is larger for blue light than for red light (that is, red light travels faster than blue light), so the incoming ray is bent closer to the interface normal.

The situation is further complicated by a curved lens surface. The refraction that occurs at the two surfaces of the lens causes the rays to focus. The wavelength dependence of the index of refraction results, however, in a wavelength-dependent focal length (axial chromatic aberration; see Fig. 3). When the shorter wavelengths have the shorter focal length, the lens is called undercorrected.

For an image formed with such a lens, different wavelengths will form images of different sizes (lateral chromatic aberration). The farther a point is from the optical axis, the more chromatic aberration it exhibits.

A lens designed to focus through aira material with a constant index as a function of wavelengthis likely to have problems focusing through a large block of glass with a wavelength-dependent index of refraction. Lens design is always a case of minimizing the impact of all aberrations, chromatic and others. Careful choice of materials and greater emphasis on minimizing chromatic aberration are required to produce a lens design for color imaging. Matched lenses, such as those available with the Dalsa Trillium cameras, are specifically designed for given prisms and provide superior imaging performance.

Measuring color-imaging performance

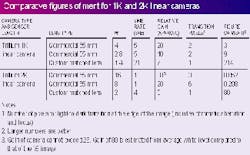

The goal of a three-chip camera is high-speed, high-quality color imaging. We evaluated the impact of lens choice on performance of a camera imaging a black and white target under a variety of test conditions using a Trillium camera and both a commercial 55-mm lens and a custom matched lens (see table on p. 166). The black and white target represented the worst casewhite is made up of signals from all three sensors, while black is made up of null signals from all three sensors. Errors in either sensor alignment or optical system performance result in smearing of the image, which is particularly noticeable at the black-to-white transition. To compare performance we defined a figure of merit (FoM) as

null

where P = pixel count in thousands of pixels, L = line rate in kHz, G = electronic gain, # = -number, and T = width of black-to-white transition, in pixels.

FIGURE 2. Prism-based color beamsplitters separate the red, green, and blue components of polychromatic light. Although the beamsplitter is a prism, the colors are not separated by refraction, but by dichroic coatings, which offer high in-band and low out-of-band transmission with sharp transitions (see inset). The green channel is obtained by subtraction, so the blue/green and green/red transitions are ideal. At steep angles (>20°), the dichroic transmission is sensitive to polarization, so the prism is designed for total internal reflection (TIR) that allows the light to be directed out of the glass assembly and onto the CCD sensors. For the blue light, the glass-air interface at the front of the prism produces a TIR bounce. The prism requires an internal air gap at the second (red) dichroic to produce TIR.

Even with the noncustom lens, the results are actually better than one might expect because the Trillium camera uses a low chromatic dispersion prism assembly. While the effects of chromatic aberration can be reduced by restricting the aperture (higher #), this reduces the available light, and the decrease in camera speed plus increase in gain would be reflected in Eq. 2. The sensitivity of Eq. 2 to sensor length is also apparent. The limitations of a lens, such as vignetting or intensity roll-off, are more pronounced for pixels that are further from the center axis. Compared to the commercial lens, the custom lens had less distortion with a 2048-pixel linear sensor, even with a smaller # (1.4 vs. 2.8), and the matched beamsplitter has a larger aperture to work with the low--number lenses. The benefit of a custom lens matched to the beamsplitter is clear. Even at four times the line rate and with lower illuminationhence lower system costa matched lens gives better image quality.

FIGURE 3. In an "undercorrected" lens, blue rays are refracted more than red causing axial and lateral chromatic aberration.

The table also highlights the relative unimportance of sensor alignment. The data show that the best result an image can express is a black-to-white transition over a single pixel, regardless of how accurately the sensors may be registered. In fact, poorly matched optics have a much larger impact on image quality than does extreme sensor alignment accuracy. Sensor alignments much less than half a pixel have negligible impact on image quality compared to the chromatic aberrations in the optical path.

Calibration and color-balancing issues

While there is no question that superior optics make better images possible, the final image quality is influenced by a range of factors, including illumination intensity variations across the object plane, illumination color temperature variations, lens vignetting effects, photo response non-uniformity (PRNU) of each color channel from system to system and pixel to pixel, offset variations between systems, and pixel-to-pixel fixed-pattern noise (FPN) of each color channel. Lens and illumination effects generally account for more than 90% of total response variation.

RIGHT. FIGURE 4. Comparison of uncorrected (top) and corrected (bottom) color images shows advantages of image correction achieved with in-camera image processing algorithms.

To produce valid data, a camera must have consistency over time and between installations; it must maintain resolution, brightness, and color content for similar objects. If the factors listed above are large enough to degrade consistent performance, the systems engineer will need to consider image correction prior to executing the core image-processing algorithms. This can be achieved through a calibration process using black and white references that determine correction coefficients for pixel-by-pixel gain and offset adjustment.

Two-point correction process

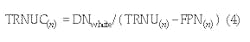

If the image system exhibits linear characteristics for the responsivity of each pixel, a two-point algorithm can correct for variations caused by any number of sources, including lighting, lens effects, and camera PRNU and FPN. Figure 4 shows the comparison between uncorrected and corrected color images. In this case

null

where n = pixel number, Vos(n) = image system output for each specific pixel (measured), Vact(n) = actual response for each specific pixel (calculated), FPN(n) = Vos(n) under dark conditions with fixed-pattern noise for each pixel, TRNU(n) = Vos(n) under flat white-light conditions with total response non-uniformity for each pixel, and DNwhite = desired color-channel value for a white object.

In the Trillium camera, the correction process described by Eq. 3 is executed in real time, pixel by pixel, for each color channel. Each pixel location has an associated correction value, which is predetermined during the calibration process, as follows:

- Under dark conditions, the Vos(n) values that represent FPN(n) for each pixel are captured, averaged and stored. Thus, under dark conditions each pixel has a corrected output of zero.

- With the camera exposed to a flat field of white light, Vos(n) data are captured, averaged, and the TRNUC(n) correction values are determined and stored. The relationship is as follows:

null

The values for FPN(n) and TRNUC(n) are determined for each color channel.

In industrial settings, it is not necessarily practical to execute calibration on a regular basis with a black reference (or the illumination off) as well as a white reference. To address this issue a camera such as Trillium can extrapolate a second point for the algorithm from a reduced light level sampled using iris or exposure control.

In summary, overall image quality in a color imaging system is limited by both the ability of the optical subsystem to form an accurate image on the sensors and the ability of the electronic subsystem to control the color balance. A properly designed common-optical-axis camera can provide better images at lower illumination cost. A matched lens-prism system with dichroic color filters and electronic color balancing provides measurably superior images with less light at much higher line rates.

Lighting can be one of the largest maintenance costs of a system. With a larger-aperture matched lens and electronic color calibration, a beamsplitter requires less-intense and less-uniform lighting. Even ignoring the higher image quality and increased speed, the savings from lower lighting requirements over the lifetime of an application can far exceed the initial cost of the matched lens.

An image capture subsystem that includes correction algorithms reduces or eliminates the need for a dedicated DSP-based frame grabber or H/W processor. Preprocessing frees the main system from image capture subsystem issues and can significantly reduce system cost, complexity, and development time. Three-chip beamsplitter cameras such as the Trillium provide features and benefits not possible with other imaging schemes, opening new possibilities for high-quality color imaging. Applications that were previously considered prohibitively complex, unreliable, or expensive (such as 100% color inspection for printing presses) can now become affordable and even commonplace.

DOUGLAS R. DYKAAR, GRAHAM LUCKHURST, AND NEIL J. HUMPHREY are in the Research, Camera Engineering, and Marketing departments, respectively, at Dalsa, 605 McMurray Rd., Waterloo, Ontario, Canada N2V 2E9; e-mail: [email protected].