Augmented reality (AR) glasses are continuously evolving into powerful tools with real-world applications. Recent releases of mixed-reality headsets like Apple’s Vision Pro and the Quest series from Meta showcase the potential of AR to overlay digital information on the real world—and enhance everything from gaming and entertainment to work tasks and education.

If we look back at the past decade, AR glasses have come a long way. Google presented and released “Glass” in 2013, which is a wearable and interactive monocular display that set the tone for future augmented and virtual reality (VR) devices—both in terms of required features and how to make such devices socially acceptable.

Many of the early-generation AR and VR devices relied on tethering to a personal computer (PC) to provide content and graphical power, or the insertion of a phone (think Samsung’s gear VR or Google’s Daydream) to provide everything—including the display itself.

Today's AR/VR glasses are much sleeker and more powerful. They often have their own built-in displays and processors, which frees users from tethers. The notion of wearing glasses that display directions as you walk, translate languages in real time, or even help you diagnose a car problem doesn’t sound like science fiction anymore—it’s a near-term reality.

Technical hurdles

To achieve mass adoption of AR glasses and unlock their full potential, device makers still need to overcome a few technical hurdles. These include limitations of display technology, battery life, size and comfort, user interface and interaction, privacy and security, and price. Compromises must often be made between performance and user comfort. For example, a device could be made lighter by using a smaller battery, but a smaller battery reduces use time and increases charging frequency. Likewise, form factor and materials must also be considered to balance quality and longevity with cost and ease of manufacture.

The ultimate goal is for AR devices to look and feel just like normal eyewear—to be lightweight and truly see-through, without cameras or integrated displays. Unlike typical VR headsets that block out the real world, see-through AR glasses let you directly see and interact with the real world around you while enhancing it with overlaid digital content. Therefore, it is important to develop smaller, lighter, and more advanced display and optical components and efficient projection methods.

Fixed-focal length and vergence-accommodation conflict

Most AR devices work along the same principle. A light engine or display (essentially a tiny projector) projects light that’s guided and focused into the user’s vision. Commonly, this is achieved via an optical waveguide—a see-through structure that guides light from a display into your visual field and therefore projects the image onto the real-world view. This method reduces weight and allows for a more glasses-like form factor.

One major challenge is achieving focus consistency between the physical world and virtual image overlay. Most devices today have a fixed focal distance at which virtual objects are rendered, typically between 1 to 2 meters from the user, which defines a “zone of comfort” in which virtual objects and the real world can be naturally focused on by the user without causing too much discomfort.

The compromise here is that fixed focus is simpler to achieve, whereas adding more focal levels increases the complexity (and likely add cost, size, and weight) to the device optics. But the absence of dynamic focus control of the virtual object will limit how the device can be used, especially for extended periods. For example, a headset with fixed focus may be fine if the intended use is as a virtual monitor. However, for interaction with virtual objects that need to be both in the far distance and within touching distance, the absence of dynamic focus will cause user discomfort.

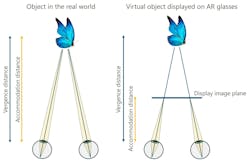

With a fixed focal length, users can experience vergence-accommodation conflict (VAC) when trying to focus on close-up or moving objects. “Vergence” is the inward or outward rotation of the eyes to focus on an object at a certain distance, while “accommodation” is the adjustment of the lens of the eye to produce a sharp image on the retina. These two mechanisms instinctively work in tandem to create a clear and coherent image of the real world. With virtual objects that originate from a display, if there is a mismatch or conflict, it can affect the user’s ability to focus correctly and cause visual fatigue, eye strain, or even headaches. Such a mismatch in AR can occur without dynamic focus because the virtual image is displayed on a fixed two-dimensional (2D) plane at a fixed focal length, yet the size and rendered position of the virtual implies a different depth and results in a conflict (see Fig. 1).

Methods to reduce vergence-accommodation conflict

Currently, few approaches exist to reduce VAC in in fixed-focal length devices. Many AR device manufacturers provide extensive guidelines for application developers, which often include advice on object placement and focal zones to ensure user visual comfort in a fixed focus system. Typically, there is an area to avoid (usually less than 1 m away) and a ‘comfort’ area (usually 1 m to infinity). For example, Microsoft guidelines for the HoloLens 2 suggest a distance from 1.25 to 5 m as ideal (see Fig. 2).

Adjustable focus

In 2023, Meta’s Reality Labs research division presented the Butterscotch Varifocal system mounted inside a prototype VR device. This used a mechanical system coupled with eye tracking to move the entire display screen closer or further from the user, to control the focal length of the image, but it added unwanted weight and volume to the headset.

For VR, other kinds of tunable optics can be used such as polarization-based optics, because for VR the entire view is generated by the display, which usually means the light is polarized. This allows other types of lenses to be used such as Pancharatnam–Berry phase lenses that can be stacked together the create a set of discrete focal lengths. This approach has also been shown by Meta in their Half Dome VR prototypes, but it’s less suited to AR because these types of optics only work with polarized light.

There are also other architectures being developed by companies such as Creal and PetaRay, which use light fields to provide multiple depth images to the eye simultaneously (or nearly simultaneously) that either trade resolution or frame rate or increase image processing requirements.

Liquid crystal lenses

Meta’s Butterscotch Varifocal project clearly shows how adjustable focus reduces the effects of VAC, albeit with a mechanical system. It is possible to replicate this adjustable focus function without any moving parts using tunable liquid crystal (LC) lenses (see Fig. 3).

In conventional fixed optics, light passing through a simple lens is focused because the physical curvature of the lens changes the path length for different light rays so that they all converge on a point. At any point on the lens, the optical path difference (OPD) within the lens can be calculated as the product of the refractive index (n) of the material and thickness (d) of the lens at that point. Usually in a conventional lens, n is the same everywhere, and d is varied as a function of radius, giving the curved shape of the lens.

In liquid crystal optics it is possible to vary the refractive index, n, instead of the thickness, d of the lens. The optical path difference is therefore d*∆n instead of ∆d*n. The net effect is the same, but is now dynamically controllable. This mechanism relies on the anisotropic properties of liquid crystals: when an electric voltage is applied across a layer of liquid crystal, the LC molecules rotate, which changes the refractive index seen by light passing through the layer. With careful design of the electrode pattern, a tunable lens can be formed.

Tunable push-pull lens solution

In AR devices, both real world and the virtual must be at the correct focus, so any lens that controls the focal length of the virtual object must do this without disturbing the view of the real world. To solve this, two lenses are required—one sits “eye side” of the waveguide (or equivalent), while the other sits “world side” of it (see Fig. 4).

The eye side or “pull” lens is responsible for focusing the virtual object (by applying +N diopters). Since this also unavoidably changes the focal length of the real world, a second lens on the world-side of the waveguide combiner must be included. This “push” lens is then driven to an equal and opposite lens power (-N diopters) to return the real world to its original focal length. The net effect of these two lenses operating in unison to equal and opposite lens powers is only to adjust the focal length of the virtual object. By combining this with eye tracking, for example, an efficient and comfortable way to adjust focus and reduce VAC can be achieved.

FlexEnable’s manufacturing processes and architectures allow for the creation of tunable-focus LC lenses on optically clear ultrathin plastic. This means that unlike glass LC cells, they are extremely thin (each cell is under 100 µm), lightweight, and can be biaxially curved to fit complex AR optics.

The road ahead

Increasing visual comfort is as important as increasing physical comfort, and improvements in one must not result in a tradeoffs. In particular for AR, improvements in visual performance are a product of continuous advancements in all of the optical components in the optical system, in particular the light engine, waveguide, and optics. Improvements in visual performance while simultaneously reducing the size and weight of headsets to resemble a pair of regular glasses will be essential for comfortable and sustained long-term use that will unlock many use cases yet to be explored.