At Global Quantum Intelligence (GQI), we see a lot of roadmaps from quantum providers and fully expect continuing advances in the capabilities of both quantum hardware and quantum software during the next several years. In fact, we believe we’ll start seeing organizations using quantum technology for production purposes within the next few years. Some call it Quantum Advantage while others call it Quantum Utility, but in this article, I’ll call it Quantum Production to distinguish it from one-off proof-of-concept experiments vs. those running the use cases on a repeated, regular basis.

Although we initially did not expect to see fault-tolerant quantum computers (FTQC) until the 2030s, recent advances lead us to believe that we’ll start seeing what we call early FTQC processors available during the second half of this decade. One way we measure the capability of a quantum computer is a measure we call Quops, which stands for successful quantum operations. And we classify quantum evolution according to the following eras: Intermediate, Early, Large Scale, and Mature based upon how many Quops machines of that generation can process. Quops is a function of both the number of qubits available in a quantum processor and the logical error rate (LER) for these qubits.

For noisy intermediate-scale quantum (NISQ) processors, which don’t have any error correction, the logical error rate will be the same as the physical error rate (PER). But in FTQC machines, which implement error correction codes that group together physical qubits to create a logical qubit, the LER will be much better than the PER. This is the purpose of error correction technology.

FTQC: Fault-tolerant quantum computers

We expect to see Early FTQC machines available within the next five years that can achieve capabilities within the MegaQuops or GigaQuops regimes. These initial machines may contain a few thousand physical qubits that will translate to roughly a few 100 or so logical qubits. This should be enough to run a few useful applications, but still won’t be powerful enough to run intensive quantum applications like Shor’s algorithm that will require machines with TeraQuops capabilities. We don’t expect those Large Scale FTQC machines to be available until the 2030s and they will provide thousands of logical qubits for calculations with potentially millions of physical qubits.

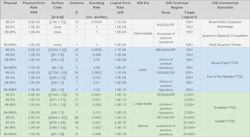

The chart below demonstrates how error correction can improve the LER using one specific error correction code called the surface code as a function of the initial PER. As you might anticipate, the better the PER that the code starts with, the better the resulting LER will be. But another factor affects the physical qubits grouped together to create the logical qubit: we call it the physical-to-logical ratio. Codes that have a larger physical-to-logical ratio will provide better error resistance. In the chart below, the third column describes the code being implemented with the notation [[n,k,d]]. The “n” indicates the number of physical qubits used within a group, the “k” represents the number of logical qubits that it creates, and the “d” stands for the distance between codewords within the encoding. The larger the distance, the more able that code is to detect and correct errors. Beyond the surface code used within this chart example, many other codes are being researched that may be more efficient, depending upon the code and the specific quantum processor it’s being implemented on.

NISQ: Noisy intermediate-scale quantum

On the other hand, we also see advances occurring in more capable NISQ processors and associated algorithms that may also be able to run useful applications within the 2025 to 2029 timeframe. We’ve already seen a few companies demonstrate two-qubit fidelities of greater than 99.9% for their physical gates. And we’ve also seen advances in algorithms to get the most out of these physical gates. This software includes hybrid classical/quantum architectures, variational quantum algorithms, error mitigation and suppression techniques, circuit knitting, zero noise extrapolation, probabilistic error cancellation, and other classical post processing to improve quantum results. Moreover, the roadmaps we’ve seen indicate we may have available NISQ processors with 10,000 of physical qubits during the second half of this decade.

End users may have an interesting choice soon—within the next five years. Do they want to use an Early FTQC machine that provides about 100 logical qubits with two-qubit gate fidelities of greater than 99.9999%, or do they want to use a NISQ machine that contains around 10,000 physical qubits with two-qubit gate fidelities of 99.9% or perhaps 99.99%?

Many quantum researchers are skeptical anyone will ever run useful applications on a NISQ quantum computer. Beyond the fact that these machines still have noise issues, one of the other reasons is that many of these applications would rely on heuristic algorithms such as QAOA or VQE, which no one can theoretically prove will work. People will need to try them out to see if they work or not. On the other hand, theoretical proof exists that certain algorithms, such as Shor’s algorithm, can run on a FTQC and provide an accurate answer. But we would remind our readers that many of the classical artificial intelligence (AI) algorithms that have become popular in recent days are also heuristic and computer scientists do not yet have a theoretical proof that they should work. Yet, of course, these AI algorithms do work.

A particularly interesting paper we recently saw posted on arXiv is titled “A typology of quantum algorithms,” authored by researchers at the Université Paris-Saclay and Quantinuum. In the paper, they classified 133 different quantum algorithms by a number of different factors, including whether the algorithm could be implemented on a NISQ processor or required a large-scale quantum (LSQ) processor. Of the 133 algorithms shown in the summary Classification Table at the end of the paper, a total of 50 were classified as potential candidates for using a NISQ processor, while the remaining candidates require a LSQ machine. It’s possible one of these NISQ algorithms can indeed provide a usable commercial quantum production before the FTQC quantum computers are available.

We aren’t at the point yet where we can definitely say which quantum applications will be able to provide commercially useful results on which machines. But the one thing that makes us optimistic is the diversity of innovative approaches and rapid advances organizations are making in both hardware and software to get us to the point where the systems can be used for quantum production for useful applications. Although some of these innovative approaches will fail, we fully believe that others will work and start delivering within the next few years on the promise of a quantum computer. The applications in production may only be a handful for the next few years, but this initial small number will grow substantially in the 2030s as more TeraQuop large-scale FTQC systems become available, which will enable many more algorithms to be run successfully.

While some may expect to see a hard demarcation between the end of the NISQ era and beginning of the FTQC era, in reality these eras will overlap, and we’ll see a gradual transition.

About the Author

Doug Finke

Doug Finke is chief content officer at Global Quantum Intelligence (New York, NY), a business intelligence firm for quantum technology. He is also the managing editor of the Quantum Computing Report by GQI, an industry website he founded in 2015.