In modern lidar systems, software plays a starring role

Lidar systems have become a standard method for efficient collection of high-density topographic data for a wide range of applications. Lidar systems, whether on terrestrial or airborne/unmanned aerial vehicle (UAV) platforms, typically include imaging sensors (lidar and optional cameras) along with a position and orientation system, including a global navigation satellite system (GNSS) receiver and inertial navigation system, to provide georeferencing information.

Lidar technology has undergone significant progress since its introduction. One of the most prominent advances is the increase in pulse repetition frequency (PRF). This advance has significantly increased the possible achievable data density while still ensuring project productivity, and therefore has expanded the applications for lidar technology.

The limiting factor faced by traditional lidar systems for achievement of high PRFs was the flying-height restriction associated with the laser pulse travel time. More specifically, traditional lidar systems were based on “single-pulse” technology—that is, they had the limitation of having a single pulse in the air at a time. For instance, for a flying height of 1500 m, the laser pulse travel time (to ground and back) is approximately 10 µs. In such a case, the PRF would have to be less than 100 kHz in order to ensure a single pulse in the air. Therefore, for single-pulse systems, a higher PRF would result in a lower operational altitude—which is not a desirable scenario, as this goes against the main intent of higher PRFs, which is gains in efficiency.

Increasing pulse repetition frequency

The increased PRF in modern commercial systems has been achieved through the use of two main solutions (or the combination of both). The first solution uses multiple laser sensors/channels: that is, multisensor or multichannel systems. The resulting PRF for these systems is the combined PRF of the individual channels/sensors. Another solution, denoted as multiple pulses in air (MPiA) and also known as “multipulse” technology, consists of emitting the next laser pulse without waiting for the return of the previous pulse, letting several pulses simultaneously travel from the sensor to the mapped object and back.

The multipulse approach poses several challenges for the lidar designer. First, the lidar needs to resolve the ambiguity of the measured ranges, correctly associating each incoming pulse with an earlier outgoing pulse. Second, the multipulse scenario must deal with what is called “blind zones,” or the inability to emit and receive lidar pulses simultaneously. Each time a laser pulse is fired, the lidar receiver is momentarily blinded by the scattering of the outgoing pulse as it is directed out of the sensor and into the atmosphere. This makes it a challenge to record an incoming pulse that arrives during this period.

Teledyne Optech (Vaughan, ON, Canada) has put considerable effort into solving the problems created by a high PRF. One result is the Optech Galaxy’s PulseTRAK technology, which has taken the multipulse route and overcome the associated challenges (ambiguity and blind zones). Another Teledyne Optech approach is the Optech G2 sensor system, which consists of dual Galaxy sensors working in tandem and is an example of a system that utilizes both the multisensor and multipulse approaches to increasing the system PRF.

Self-calibration software capability

Both solutions for increasing lidar system PRF should be accompanied by robust/efficient software solutions. When dealing with multisensor/multichannel systems, it is critical to have robust software that allows for accurate co-registration between data collected from the different sensors/channels to ensure a homogeneous dataset. This entails not only accurate system calibration—the determination of the intersensor geometric relationship between all system sensors (mounting parameters calibration)—but also accurate calibration of the individual sensors and channels.

Teledyne Optech solves this challenge with its LMS (Lidar Mapping Suite), which is data processing software that allows the user to refine the data further and provides tools to check/verify the data quality. LMS has a self-calibration engine that is based on a rigorous lidar geometric model, allowing it to estimate corrections to the sensor/channel calibration parameters, the system mounting parameters, and the trajectory from the GNSS/INS system.

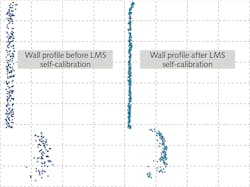

The self-calibration process consists of a block adjustment using overlapping lidar data collected by different coverage passes/sensors/channels and control information where available. Correspondence among overlapping coverage passes is established using tie planes. Figure 1 shows the profile of a point cloud collected by a mobile system that integrates a 32-channel lidar. One should note that the point-cloud noise level (precision) will depend on the quality of the calibration of the individual channels because the resulting point cloud combines the data from all the channels. The image on the right shows the improvement in the point cloud’s precision when using the Optech LMS, which allows further refinement of the calibration of the individual channels.

Postprocessing software capability

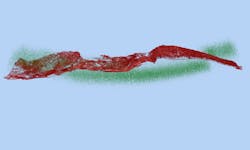

Robust and efficient postprocessing software is also key when dealing with multipulse technology. Although modern multipulse technology ensures large and continuous operational envelopes, a shortcoming is the possible projection of atmospheric returns close to ground returns or even the mixing of atmospheric returns with the objects of interest (see Fig. 2). This happens when the altitude of the aircraft is such that the ground returns from the previous laser pulse are arriving at the lidar receiver at approximately the same time as the atmospheric returns from the current laser pulse. As one could expect, as PRF and flying height increases, the problem gets more pronounced because there are more pulses in the air (PIA) and therefore shorter and more numerous PIA zones.Noise-filtering routines are required to classify and remove these atmospheric returns. Traditional filtering routines were based on heuristic approaches, which required the data processor to adjust parameters for particular sensor parameters and environment characteristics or even remove the noise manually, making the process very time-consuming and labor-intensive.

Teledyne Optech uses artificial intelligence (AI) for resolving this challenge, specifically a deep-learning approach that classifies atmospheric returns without user intervention or expertise. The approach is adaptable to varying environments and sensor parameters and scalable (via GPU or cloud processing) to reduce processing time for large projects.

Figure 3 shows classification results using Teledyne Optech’s deep-learning AI—classified atmospheric returns (noise) are shown in green while the ground returns are shown in red. A confidence level is assigned to every classified point, which facilitates the inspection of the classification results by the user because they can focus their inspection on the points with a low confidence level. A time savings between 50% and 80% can be observed using this approach.In addition to the capabilities outlined in this article, software will continue to play a starring role in further automating the extraction of features, detection of objects, and generation of final deliverables. This will be critical for increasing project productivity and expanding the application of lidar technology into even more areas.

Ana Kersting | Product Manager, Teledyne Optech

Ana Kersting is product manager at Teledyne Optech (Vaughan, ON, Canada).