Quantum-safe cryptography on the horizon

When you transfer money with your smartphone, you assume to do a safe operation. The same holds for a hospital sending medical records to another institution, and for governmental agencies transferring secret or classified information. Even when using an instant messaging service on a smartphone, the expectation is that it cannot easily be intercepted.

The technology that safeguards all these data transactions is encryption—the more precious the data, the stronger the encryption. Since the days of Alan Turing, computers have been used to decipher codes, and ever since encryption strength evolved with computing power. Now, quantum technology promises a new generation of computers with unprecedented capabilities, which threatens many encryption methods currently used. So, how do you protect valuable data in a post-quantum era?

Where encryption is executed

For a better understanding of quantum-safe cryptography, we will first introduce some basics of encryption. It starts with the question, where and how it is executed? Encryption can be applied on different points of the data transmission. Ideally, it would be done between the end points, which are usually within the application on the computer where data is generated—for example, between a web browser and the web server, or at the router connecting the computer with the local area network. Next could be a switch, where data from several routers is collected and sent through a network (e.g., fiber).

Again, end-to-end encryption is the most secure since there is no chance to intercept the actual message or data along the line. But the further down the transmission line the data goes, the smaller the effort for encryption per bit becomes. Obviously, balancing effort and security is crucial. For the user of an instant messaging service, end-to-end encryption does not add much effort. The situation is quite different in a large datacenter where end-to-end encryption would lead to a high technical effort if every application on every server contained an encryption unit, generating lots of overhead and leading to a poor performance. Security would not benefit much compared to encryption on switch level for data that is sent out of that well-protected building.

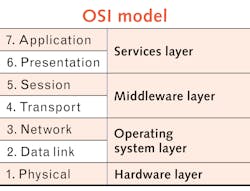

Since data transport is an essential process in the digital economy, it is well specified by various standards. For technical communication on different levels, the Open Systems Interconnection (OSI) model has been developed (see Fig. 1). It characterizes and standardizes the communication functions of telecommunication or computing systems without regard to its underlying internal structure and technology. It defines several layers, starting with the application level, closest the user, on level 7. The final physical device that converts data signals into electrical or optical signals for transmission sits on layer 1. Data can be encrypted on every layer—on level 7, it might be very specific data formats such as text, audio, or video. Along its way, some un-encrypted information such as IP numbers would be attached to the encrypted information from level 7. Additional encryption on lower levels can be implemented to hide such information, too. This is often referred to as the multilayer Swiss cheese model. While one or the other layer of security may fail, they will never fail all at once.Nothing is attached to level 1 encryption—it is just bits and bytes, or data packages. It is also where bytes are converted to optical signals for fiber transmission. Any interception of the data transmission behind level 1 gives nothing but so-called ciphertext—completely encrypted data including all other layers, as well as their metadata.

The story of private and public keys

One of the most basic principles of encryption is in asymmetric and symmetric cryptography. The main principle of symmetric encryption, like the Advanced Encryption Standard (AES), is quite simple. The same key is used to encrypt data on one end and decrypt on the other end. This method is common for encrypting data streams in more or less no time and scales perfectly. However, asymmetric cryptography is needed to establish a common key on both sides of the link automatically using an unencrypted link.

It relies on mathematical problems, which must be simple to encrypt data, and it should be difficult to decrypt (one-way function). Think of a house door—it is easy to open with a key, but hard to get in without it. Beyond that idea, there are some basic principles for modern encryption technologies.

In an asymmetric-key procedure, the key for encryption differs from the key for decryption. Just imagine a treasure chest with a two-parted key. One part of the key, the public key, can close the box—and the other part of the key, the private key, can open the box. For instance, Alice can send open boxes to Bob with a public key. Bob puts in a message and uses the public key to close the box. Nobody but Alice can open the box after it was locked.

Now, imagine a similar trick in the digital world using a two-part key or a “public-private key pair.” If Alice wants to receive an encrypted message from Bob, she sends her public key to Bob who can use it to encrypt a message to Alice. Alice can then decrypt it using her private key.

The public key can be made available to everyone, sent via public, unencrypted networks—and everybody who wants to send a message to Alice can use this key. The private key is mandatory for decryption but never shared, ensuring that Alice is the only person who can decrypt the message.

The best of both worlds

Encryption procedures for terabytes of data have to be as fast as possible; therefore, common encryption technology combines the secure key establishment via asymmetric methods with subsequent symmetric encryption. A typical method for symmetric encryption is given by the AES with key and block lengths, nowadays using 256 bits. AES has been approved by U.S. authorities for top-secret information. At present, there is no known practical attack allowing someone without knowledge of the key to read data encrypted by AES, when correctly implemented.

A typical standard for asymmetric encryption is RSA—named after its inventors Rivest, Shamir, and Adleman. The algorithm was developed in the early 1970s and relies on the mathematical problem of large prime number factorization. While it is easy to calculate the product of two large primes, it is difficult to derive the factors from the product. Currently, the typical size for an RSA key is between 2.048 and 4.096 bits. The full RSA algorithm is more complex and coupled with other methods, but this introduction should show why factorization is such an important problem.

The strength of encryption is a typical parameter where we can compare the different methods. According to the National Institute of Standards and Technology (NIST), the strength of 2048-bit RSA is ~112 bits, where AES-256 offers 256 bits of security. Increasing RSA key sizes does not help a lot, as 3072-bit RSA only offers 128 bits of security. As such, the weakest part is always the asymmetric encryption.

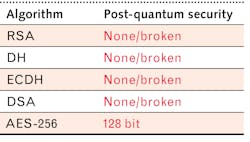

What a big-scale quantum computer changes

So far, encryption safety relies on the assumption that it takes too much time to find a key through brute force decrypting attempts. With a big enough quantum computer running dedicated algorithms, the security strength of crypto algorithms can weaken. Lov Grover and Peter Shore published algorithms that could be used for decreasing security strength in various crypto systems once a big quantum computer is available. Symmetric encryption is only partially affected. Asymmetric encryption as used today, like RSA or Diffie-Hellman, would be broken (see Fig. 2).‘Harvest now and decrypt later’

Since affordable data storage capacities are easily available these days, potent agencies can apply a policy of “Harvest now and decrypt later,” essentially storing large amounts of encrypted data now and hoping for decryption in the future. Quantum computers are expected to become game-changers with their superior power to solve particular mathematical problems. A way to break 2048-RSA encryption using a quantum computer in 8 hours has been discussed in a scientific paper in 2019. The authors assume a 20 million qubit quantum computer to do this.

Cryptography vs. quantum computer development

For quantum save cryptography, two ways are possible: 1) the use of a physics-based, quantum key distribution (QKD) and 2) the use of stronger asymmetric encryption.

Describing the first approach, the use of quantum methods, a number of suppliers have developed QKD devices. The main principle is using very weak light pulses, ideally only a photon for transmitting information. There are well known algorithms like BB84, which can be used to establish common secrets on both sides of the link. Tapping or altering those signals will destroy the information. In addition, the system can detect possible attacks based on statistics, but those systems are complex to operate, costly, and usually restricted to the unamplified optical transmission, which limits the transmission distance to some tens of kilometers. QKD systems also require a separate, physical fiber for maximum performance.

For stronger asymmetric encryption, “Post-Quantum Cryptography” (PQC) algorithms like the McEliece algorithm are suggested. It uses a key matrix that is about 1000X larger than a regular RSA key. So far, McEliece counts as secure against quantum attacks.

The quantum thread for cryptography has led to severe efforts to prepare standards for PQC. In the U.S., standards for encryption have been provided by NIST. At first, there were Federal Information Processing Standards (FIPS) specifying requirements for cryptography modules. Typical standards are known within the FIPS 140 series, where FIPS 140-3 represents the latest security requirements for cryptographic modules. Details for AES procedures are fixed in FIPS 197.

In October 2021, NIST added a schedule to the DHS roadmap. Accordingly, they will publish valid post-quantum cryptography standards in 2024 at the latest. Until then, they plan to review existing cryptographic technologies and the data to secure. They expect the actual quantum computer capable of cracking existing keys to be developed by 2030.

The researchers of IBM’s quantum computing branch published their QC roadmap in September 2020. They claim to build a first quantum computer with more than 1000 qubits as early as 2023. In November 2021, they shattered the 100 qubit mark with their “eagle” 127 qubit system. They confirmed the goal of 1000 qubits for the end of 2023, and they are on track with their plans to ‘frictionless’ quantum computing in 2025. Assuming a tenfold increase in qubit numbers every two years, it would take eight more years from 2025 to get to code cracking QC.

Post-quantum cryptography (PQC)

Building modern and secure data transmission encryption systems is one thing, but there is more to be taken into account. The following principles summarize what it takes to help governments and industry build trustworthy and easy-to-operate networks:

Trust. Technology, as well as the manufacturer must be reliable. Evaluation by third parties for hardware or software ensures proof of design and implementation.

Automation. Provisioning of encrypted data transmission services should be intuitive and as easy to deploy as non-encrypted service.

Balance. There must be a balance between security and operational aspects (costs).

Agility. Hardware and software must be upgradeable to counter future threads.

European telecommunications vendor ADVA has developed encryption technology for about 15 years. It started with encryption transponders for banks and governmental institutions early in the 2010s, then the cloud providers adopted ADVA products. In 2014, ADVA presented its first 100G encryption unit. Encryption was applied to ever faster systems (2017: 200G; 2021: 400G) and the systems were certified according to standards of the German Federal Office for Information Security (BSI) and according to FIPS 140-2.

In December 2021, ADVA received approval for classified data transport from the German BSI for the first optical transport module with Post-Quantum Cryptography (PQC; see Fig. 3). A typical BSI approval process goes beyond pure technical specifications as defined by FIPS. The BSI demands security checks for people and for processes in the production and development structures of cryptographic technology to ensure no additional backdoors are included into an otherwise secure technology.ADVA has developed a solution with built-in PQC. The communication systems (FSP 3000) on both sides encrypt the data on OSI level 1 and transmit nothing but ciphertext, which is a set of completely encrypted bits and bytes. It has built-in capability for symmetric AES 256 encryption, an asymmetric solution for key exchange using McEliece quantum-safe key exchange protocol along with the traditional Diffie-Hellman, or it can use key material from an external QKD device. All algorithms could be altered without changing the hardware, prepared to overcome future threads.

The BSI approved technology will be applied in a range of ADVA products that will enter the market in the first months of 2022. Further developments will be driven by ADVA’s brand-new security unit, ANS (ADVA Network Security), based in Berlin, Germany.

Road ahead

According to IBM’s roadmap for the development of quantum computers, operational quantum computers with more than 1000 qubits should arrive by 2025. In the early 2030s, they could be able to break conventional asymmetric encryption. This puts severe pressure on the development of post-quantum encryption technology for professional application and large data volumes. ADVA has developed a first unit for both, quantum encryption and advanced asymmetric encryption. Given the agility of the encryption design, this can be upgraded when needed. Now it remains to be seen, how fast quantum computers catch up in the race between encryption strength and computation power.

Uli Schlegel | Senior Director, Product Management Network Security at ADVA

Uli Schlegel is ADVA’s technical expert for network security and encryption. He has over 18 years of experience in wavelength-division multiplexing (WDM) technology and optical networking systems. His work has mainly focused on enterprise networks, solutions for storage, and server connectivity. Currently, he is driving product development for storage networking, on-the-fly encryption, and various security topics spanning the company’s product lines.

Andreas Thoss | Contributing Editor, Germany

Andreas Thoss is the Managing Director of THOSS Media (Berlin) and has many years of experience in photonics-related research, publishing, marketing, and public relations. He worked with John Wiley & Sons until 2010, when he founded THOSS Media. In 2012, he founded the scientific journal Advanced Optical Technologies. His university research focused on ultrashort and ultra-intense laser pulses, and he holds several patents.