Software turns spotlight on illumination design

While both imaging and illumination design problems obviously depend upon the same basic principles of optical physics, the overall nature of illumination problems tends to make them much more calculation intensive. With the increase of computer speeds by about four orders of magnitude during the past four decades, software developers are finally entering an era in which capabilities similar to those that automate the number crunching aspects of imaging design are coming available for a burgeoning array of illumination design applications.

Both imaging and illumination systems are often analyzed using ray-tracing software, according to Bill Cassarly, a principal of Optical Research Associates (Pasadena, CA). But a primary difference between the two is that imaging systems generally map a defined source image onto a target, while illumination systems generally seek to create light distributions that may have no direct relationship to the light source (see Fig. 1).

"Basically we're looking at point images and at various ways of assessing the quality of our point images, and in an ideal world of course we take a point image into a point," said Richard Pfisterer, president of Photon Engineering (Tucson, AZ) in describing the general imaging design problem. "And our image would be the collection of all of those points that emerge together. In an illumination system we are not generally interested in a point— in fact it is quite the opposite."

Illumination problems generally involve uniformity of rays or intensity, he said. "If we're looking at a spotlight we might be interested in having even intensity over the entire area. Or in the case of irradiance, we might be looking at optimizing power at some locations. So it's very much apples and oranges. We are asking for different figures of merit. We are asking for different criteria that we're going to use to say whether this illumination system is good or bad."

Another common design wrinkle in illumination problems is that the detection system in many cases is the human visual system, which often introduces the subjective criteria of whether or not an illumination pattern is pleasing to the eye. "Imaging usually is driven by technical aspects, but illumination, while driven by technical aspects, a lot of times is also driven by marketing aspects like, What do those taillights on your car look like? What do those headlights look like?" said John Koshel, manager of optical design group at Breault Research Organization (Tucson, AZ).

"When you're designing a system you have to understand what the detector is," Koshel said. "With illumination systems it's typically the human eye. However the eye just detects the radiation and the brain processes it. So you have to worry about how people perceive illumination."

For example, given both incandescent and fluorescent sources providing similar illumination, people tend to the "warm" lighting and improved color rendering of incandescence. The limitations of the human visual system can simplify design problems also. "We do not respond to very slow variations in illumination so there is no reason to over specify the uniformity of illumination," Koshel said. "If light broadcast on a wall is varying over the whole extent by 50% the human observer will barely see it."

These types of issues are increasing in importance with increasing interest in nonimaging optical design applications that range from bar-code scanners to theatrical lighting and solar simulators, according to Pfisterer. "These aren't classic lens design problems," Pfisterer said. "So we have to do different things now. That is why you're seeing tools like Lighttools (Optical Research Associates), TracePro (Lambda Research; Littleton, MA), ASAP (Breault Research Organization; Tucson, AZ), or our program FRED."

Optimizing illumination design

"What's happening in the industry is that some companies, like Optical Research Associates, are doing some illumination optimization, and we're all very interested to see how successful this is," Pfisterer said. "We don't have any experience with it yet because it's a brand-new tool. But people who don't have that tool are looking for other ways of doing it."

Optimization for imaging design became a standard in the late 1960s, Cassarly said. "You get optimization as part of the imaging design process," he said. "But illumination design has been lagging for many years, primarily because the default method of analyzing illumination systems uses Monte Carlo simulations." The more complex illumination geometries are likely to be specified by a 10-Mbyte CAD file rather than the 15-line lens deck that might specify an imaging system.

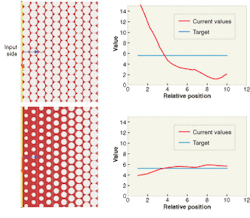

Improvements in algorithms and design subtleties are helping to make optimization for illumination design a reality, Cassarly said. For instance, parameterization strategies have been developed to reduce the number of variables needed to describe complex shapes from say 10,000 points within a CAD file to a relatively small number of variables, say between 10 and 100. Another strategy for reducing variables involves taking advantage of periodic or chirped patterns in lens arrays or back lit displays (see Fig. 2)."But to be honest, not to take too much from illumination software developers, the primary thing that has changed is that the software community is benefiting from faster computers," Cassarly said. "You can sit there and do parametric studies, and you can vary one parameter from 0 to 1 by steps of 0.1, and you can do that for two or three or four variables," he said. "But at some point you just get buried in the details of looking at all of the different cases.

"So as someone who has done illumination design for many years, it's absolutely wonderful to finally be at a time frame when you don't have to sit there and do all of this tedious little work. You can actually let the computer crunch it away for you."

Despite the impressive progress that is taking place, illumination design remains in its very early stages, Pfisterer said. "There's nothing elegant with what we're doing right now. Eventually there will be. Eventually we are going to develop all of these new techniques. There will be a combination of faster computers and techniques for very quickly analyzing how successful a reflector is going to be or how successful a lenslet array is going to be."

One thing that won't change even with improvements in number-crunching capabilities, however, will be the need for the designer of imaging and nonimaging systems to adapt the technical solutions to the overall nontechnical requirements.

When George Eastman was designing the imaging system for what turned out to be a very popular consumer camera with a singlet lens, he knew that the best technical design would place the aperture out in front of the singlet, "but he realized that there was a marketing issue," Koshel said. "People would not buy a camera where they could not see the lens readily. With that little aperture in front, they might have thought at that time that they were buying a pinhole camera. So his marketing ploy was to put the lens out in front. It wasn't going to work as well as it might have, but it sold a lot more."

Hassaun A. Jones-Bey | Senior Editor and Freelance Writer

Hassaun A. Jones-Bey was a senior editor and then freelance writer for Laser Focus World.