Efficient design of image pixel sensors improves performance

RICARDO BORGES and RYAN SCHNEIDER

Click, snap, hum. You just took a picture of that mountain vista or a digital video of baby’s first steps. In a symbiotic twist of engineering, the graphics processing unit (GPU) technology—which consumers continue to drive to new heights for “visualization”—is now being applied to the image pixel-sensor technology that captures most of those visuals. The result is a 20× increase of the finite-difference time-domain (FDTD) solver performance, allowing more efficient design and manufacturing of higher-resolution devices and faster time-to-market for image-sensor products. In the very near future, when your digital camera’s megapixels increase, as they’re bound to do, modeling software and computers employing massively parallel processors (including GPUs) may have had something to do with it.

Over the past two decades, a quiet revolution in solid-state imaging has occurred: image sensors built on complementary-metal-oxide-semiconductor (CMOS) technology have permeated mainstream consumer products such as mobile phones and PC cameras. By leveraging the economies of scale of CMOS technology, along with the integration of signal amplification and processing circuitry on the same chip, CMOS image sensors (CISs) now represent a growing, $4 billion annual market. As in digital CMOS, CISs have benefited from the continual scaling of device feature sizes yielding higher pixel count with each new generation.

Yet shrinking dimensions have brought significant technical challenges, prompting designers to resort increasingly to simulation as a way to better understand and overcome these challenges. From an optics perspective, modern pixel dimensions, hovering around 2 to 3 µm on a side, are now diffraction-limited and therefore require rigorous electromagnetic simulations. Furthermore, as pixel sizes shrink, carriers can meander to nearby pixels, leading to crosstalk. The impact of manufacturing variations on performance is also becoming critical as pixel dimensions decrease; the position of the microlens, which is susceptible to manufacturing variations and sensor performance over a range of light incidence angles, needs to be assessed.

These design and manufacturing considerations have motivated the increased use of Technology Computer-Aided Design (TCAD) in CIS design, encompassing the propagation of light through the microlens, color filter, and dielectric tunnel; light absorption in the photodiode; and the subsequent transient buildup of the photodiode charge and the conversion to output voltage. The optical analyses typically require full-wave solutions of Maxwell’s equations on state-of-the-art computers to correctly model diffraction and interference effects. These analyses are supported by the TCAD Sentaurus software tools, including the FDTD solver Sentaurus device electromagnetic-wave (EMW) solver, on single- and multipixel designs. The physical insight, design optimization, and manufacturability assessment enabled with TCAD provide designers with an effective tool for reducing development time and producing higher-performance products. The value of simulation is inextricably linked to how quickly complex cases can be analyzed.

Too-long runtimes

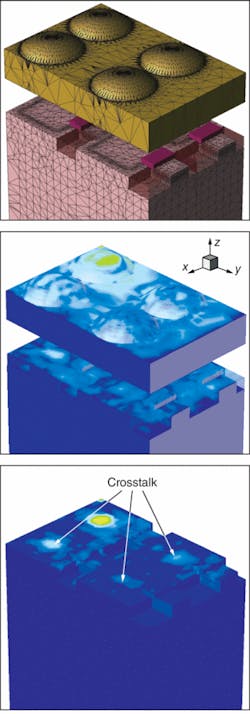

Rigorous electromagnetic analyses can easily take hours or even days on today’s top-end, multicore workstations and servers. A single pixel is decomposed into millions of smaller cubes, which represent the behavior of the light in that three-dimensional region. Each cube solves the local physical equations, performing numerous mathematical calculations, and all cubes are updated for a given instant in time. This whole process is repeated to model the behavior as the light propagates through the pixel over time.

To investigate and optimize for crosstalk and pixel sensitivity, designers need to simulate more than a single pixel—typically, simulation is done on a 2 × 2 or a 3 × 3 array (four or nine times the size of a single pixel, respectively; see figure). This increase in simulation size compounds the runtime even further. For a higher-performance, more-efficient pixel design, designers will also consider running multiple scenarios (incidence angles, microlens placement, and so on), as well as computer-aided optimization. Hundreds to thousands of runs may be required.

The final challenge is that the CIS sector is highly competitive—companies that are third or fourth to market will likely lose money on their product. Given the simulation constraints described above, designers are forced to compromise and do the best they can within the schedules they have.

Parallel computing

Finite-difference time-domain electromagnetic-wave software should enable CIS designers to express their design sweep parameters and model the impact of manufacturing tolerances. When it comes to the numerical solution of a given pixel simulation, a computational solver can and should be capable of 20× performance gains over traditional high-end computers.

These large performance gains are achieved in two ways: through parallel computing and the use of high-performance GPUs, especially suited for computationally intensive applications. In parallel computing, the application is broken up into many tasks or streams, which will later be solved in parallel with each other before the results are collated back together. Not only are the tasks run in parallel, they also run on different processors, where they are best suited. Today, GPUs from NVIDIA (Santa Clara, CA) offer both higher memory bandwidth and more floating-point-capable “cores” than numerous alternatives, coupled with economies of scale, which make them the best price-performance option for numerically and computationally intensive algorithms, like image-pixel-sensor physics.

One solution to the long-runtime problem is the ClusterInABox Q30—a turnkey, accelerator-equipped workstation configuration that contains propriety software libraries, four Intel/AMD cores, and four NVIDIA GPUs all attached to one workstation. The libraries of ClusterInABox Q30 first divide the problem into eight cooperating tasks. At the next layer, four of those tasks are further divided and optimized to run on the 128 cores of the NVIDIA (G8x series) GPUs. Once a set of results is computed, the various tasks exchange information with each other because each needs boundary information from its neighbors. This process is repeated as the simulation timeline progresses and the light propagates within the pixel structure.

The impact of the new hardware-accelerated solution is gauged by the “speedup factor” over a software-only simulation. A realistic, modern, one-pixel design for crosstalk assessment fits entirely in the memory of the GPUs and exhibits a speedup factor of 37 times (see table). The larger, four-pixel design has a memory footprint larger than the ClusterInABox Q30 can accommodate at full acceleration: the GPUs effectively must share memory with the main CPU, reducing the speedup factor. Nevertheless, significant speedup factors are still obtained relative to software-only simulations.The TCAD software and GPU processing solution for accelerating FDTD simulations offers the benefits of parallel computing on a single machine, significantly speeding up electromagnetic analysis of image sensors. Addressing this computational bottleneck enables process and device engineers to expand the use of TCAD in image-sensor design and optimization through virtual experimentation, resulting in shorter design cycles and more robust designs with higher fabrication yields.

Ricardo Borges is senior manager, product marketing, Silicon Engineering Group, Synopsys, 700 East Middlefield Road, Mountain View, CA 94043; and Ryan Schneider is chief technology officer and cofounder of Acceleware, 1600 37th Street SW, Calgary, Alberta, Canada T3C 3P1; e-mail: [email protected]; www.acceleware.com and www.synopsys.com.