Photonics Applied: Transportation: Datasets accelerate integration of thermal imaging systems into autonomous vehicles

Advanced driver-assistance systems (ADAS) increase vehicle safety through features such as adaptive cruise control, lane departure warning, automatic parking, and collision warning avoidance (see Fig. 1). Automobile manufacturers rely on the advancement of ADAS to achieve the Society of Automotive Engineers (SAE) automation levels of 4 (high automation) and 5 (full automation). However, the sensor suite that enables ADAS—typically visible-light cameras, light detection and ranging (lidar), and radar sensors—have shortcomings in darkness and through fog, dust, and sun glare. These shortcomings are slowing the development of SAE level 5 completely autonomous vehicles (AVs).

Recent accidents involving ADAS and AV systems highlight the need for improved performance and redundancy of the current sensor suite as well as the convolutional neural networks that perform image-recognition-based data processing. By integrating affordable thermal sensors that are already deployed in more than 500,000 vehicles for nighttime driver warning systems, manufacturers can improve vehicle safety.

Thermal or longwave-infrared (LWIR) cameras and object detectors can detect and classify pedestrians, vehicles, animals, and cyclists in darkness, through most fog conditions, and are unaffected by sun glare. Integrating thermal cameras into the ADAS sensor suite delivers improved situational awareness that results in a more robust, reliable, and safe ADAS or AV system. However, the additional sensors produce more data, presenting a frustrating processing problem for ADAS and AV developers.

ADAS sensor suite overview

For ADAS to work reliably at SAE levels 4 and 5, three critical technologies are required: 1) the sensors themselves, 2) computing power, and 3) algorithms that deliver intelligence. A suite of sensors (including cameras, lidar, and radar) that deliver dynamic data about a vehicle’s surroundings produce a vast amount of data that requires adequate computing power to perform high-performance image processing. With graphics processing units (GPUs) performing most of the computations, robust software algorithms are critical to delivering processed data used for decision making. The most popular deep-learning frameworks are TensorFlow and Caffé, while the most popular convolutional neural networks (CNNs) are Inception, MobilNet, VGG, and Resnet.

As ADAS and AV prototypes hit the road and accumulate miles, the limits of current sensor technologies have become more apparent—sometimes with tragic results. On March 18, 2018, an Uber autonomous development vehicle struck and killed a pedestrian at night. In another incident, a Tesla Model S running its autopilot system crashed into a truck when it failed to distinguish a white tractor-trailer crossing the highway against a bright sky.

These are not corner cases and thermal imaging is increasingly viewed as an important sensor solution to increase situational awareness in challenging lighting, nighttime, and multiple poor-visibility driving conditions.

Deep learning

A CNN functions as the “brain” of ADAS. What is unique about the CNN is that it is not programmed—it must be trained using large datasets of images in the case of image processing. These CNNs are also used for pattern recognition, natural language processing, speech recognition, and other real-time systems.

Deep learning quickly became an enabling technology when large training datasets of annotated visible images, including COCO and ImageNet, became available. The challenge for automotive industry developers interested in integrating thermal imaging has been the lack of a thermal imaging dataset. Developers need datasets with thousands, or hundreds of thousands, of thermal images to train CNNs and create classifiers that work in the far-infrared (far-IR) spectrum.

FLIR Systems, having already delivered thermal imagers into automotive pedestrian and animal-detection systems, has created a free thermal-imaging training dataset and camera development kit to help ADAS and AV developers. This introductory training dataset encompasses five classes of objects (cars, people, bicycles, other vehicles, and dogs) in approximately 14,000 annotated images.

In addition, synthetic image tools are becoming more widely used in ADAS-focused training dataset creation. After a synthetic object is generated, it can be placed in any environment and viewed from any perspective. It vastly speeds up the creation of significantly richer datasets that cover a wider range of environments and dynamic driving situations.

Thermal-imaging tools of this nature can introduce developers to environmental conditions like fog, rain, and snow, and various levels of electronic noise that accurately simulate actual thermal-imager output. This technology promises to bring an order-of-magnitude improvement in the time and resources needed to create diverse training datasets for CNNs.

Training a CNN with thermal images

Thermal images in datasets come from multiple sources, including .zip files of images, H.264 videos, or .SEQ files. Complete datasets can be locked and used as standardized training datasets or testing datasets. Each dataset produces statistics such as image count and per-class annotation count (see Fig. 2).ADAS developers measure performance of CNNs quantitatively to expected outcomes. The standard metrics for CNN performance evaluation is like a database query evaluation, but adapted for CNNs. For example, MS-COCO evaluation metrics (Microsoft Common Object in Context), which evaluates the CNN performance for both precision and recall, has become an industry standard.

FLIR has adopted and enhanced these metrics by providing specific and targeted information on false alarms and missed detections for each object of interest, along with the precision and recall performance. This helps to better understand the required datasets for training CNNs to enhance and improve their performance. In addition, we gather computational and memory (for both CPU and GPU) resource requirements for running CNN models, while also estimating the number of frames-per-second the CNN model is capable of handling.

ADAS with thermal imaging

While lidar provides range and radar provides both range and velocity information, the acquired resolution or point-cloud density is not adequate to generate object classification, making visible cameras a critical component in ADAS solutions where their high spatial detail, low latency, and deep learning provide identification of objects around the vehicle. But what happens at night or in foggy conditions? Delivering high image quality to the brains of AVs in low light and under poor driving conditions is a major challenge for ADAS developers.

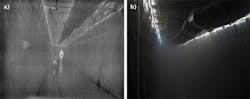

Thermal cameras boost the performance of ADAS at night and in inclement weather, including dust, smog, fog, and light rain conditions. Specifically, LWIR thermal sensors are completely passive—a key advantage over visible-light sensors, lidar, and radar.

Target reflectivity and atmospheric effects can create variables in sensor performance, particularly at the limits of their operating envelope. Visible-light camera sensors depend on light from the sun, streetlights, or headlights reflected off objects and received by the sensor. Lidar sensors emit laser light energy and process the reflected energy by measuring the time of flight of the illumination source. And radar emits radio signals and processes the return signal.

Thermal imaging takes advantage of the fact that all objects emit thermal energy and, therefore, eliminates reliance on an illumination source. Passive sensing benefits thermal over visible sensors in light-to-moderate fog where a thermal camera can see at least four times farther than a visible camera (see Fig. 3). In short, thermal sensors deliver capabilities crucial to ADAS and AV system safety.Above all else, safety

The Governors Highway Safety Association states that the number of pedestrian fatalities in the United States has grown substantially faster than all other traffic deaths in recent years and now accounts for a larger proportion of traffic fatalities than it has in the past 33 years. Pedestrians are especially at risk after dark, where 75% of the 5,987 US pedestrian fatalities occurred in 2016.1

Far-IR or LWIR imaging is an important part of an ADAS and AV system suite for reliable pedestrian, animal, and vehicle detection in every driving condition. The cost and performance of thermal camera sensors is improving rapidly, and the large scale of the automotive market will lead to increasingly cost-effective camera hardware. FLIR’s focus on training dataset development will enable ADAS developers to evaluate far-IR imaging quickly and at very low cost.

REFERENCE

Arthur Stout | Director of AI Product Management,Teledyne FLIR

Arthur Stout is the director of AI Product Management for Teledyne FLIR’s OEM Infrared Camera division in Santa Barbara, CA. He is a 30-year veteran in business development, marketing, and product management in the IR imaging industry.