Ronald Hawkins

Dramatic advances in low-cost, high-performance general-purpose microprocessor and digital-signal-processor (DSP) chips over the last few years have resulted in a sea of change in video and image processing. Once confined to the realm of exotic, special-purpose hardware, many video and image-processing functions are now easily achieved through software-programmed algorithms running on state-of-the-art processors. However, there are still classes of video and image-processing problems, particularly those of high-resolution images running at video frame rates, having bandwidths that exceed what is achievable by even the latest microprocessors. Fortunately, the emergence of modern electronic-design-automation techniques and field-programmable gate arrays (FPGAs) are offering the combination of true hardware acceleration with software-like programmability.

The need for image processing at video frame rates is increasing, spanning markets such as semiconductors, medicine, and military surveillance, to the emerging Internet and broadcast-media industries. Semiconductor capital-equipment manufacturers have long relied on computer-vision equipment for tasks such as silicon-wafer inspection and optical alignment in wire/ball-bonding equipment. Their video-processing requirements have become more demanding as the need for accuracy and throughput has increased. Electronic assembly equipment also requires real-time processing for pick-and-place and optical inspection machines. In medicine, increasing use of video sensors in surgical procedures frequently necessitates real-time video processing.

Real-time video and image processing continue to find applications in defense and aerospace, including extensive use in unmanned aerial-vehicle-surveillance systems. And, the rollout of high-definition television services, which have a potential video bandwidth an order of magnitude greater than standard definition television, is creating an emerging market for intensive video-processing technology solutions.

Reconfigurable technology

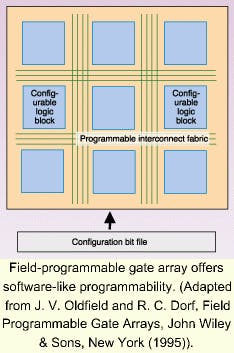

Field-programmable gate arrays are prefabricated semiconductor devices consisting of an array of logic blocks and an interconnect fabric that provides connections between the logic blocks (see figure). Both the logic blocks and interconnect are configurable to replicate a particular hardware circuit. The instructions for how to configure the FPGA are contained in a binary "bit file" that is loaded into the FPGA at run time; that is, the hardware function implemented by the FPGA can be changed by replacing the bit file and does not require any changes to the physical hardware. Thus, the FPGA is reprogrammable (or reconfigurable) much as a microprocessor would be.

Field-programmable gate array offers software-like programmability. (Adapted from J. V. Oldfield and R. C. Dorf, Field Programmable Gate Arrays, John Wiley & Sons, New York (1995)).

The architecture of modern FPGAs supports the design of logic circuits that implement complex video and image-processing algorithms. A single modern FPGA can achieve video-processing performance equivalent to that which would typically require multiple DSP chips, thus providing a very cost-effective solution.

Originally, FPGAs were suitable only for implementing small amounts of "glue logic"the logic circuits that interconnect more complex chips on a circuit board. As with microprocessor technology, however, FPGAs have conformed to Gordon Moore's "law," which states that integrated-circuit density doubles every 18 months. As a result, modern FPGAs have multimillion-gate capacities and are capable of running at clock rates as high as 300 MHz. These high-capacity FPGAs are suitable for implementing very complex video and image-processing algorithms.

It is now possible to envision "system-on-a-chip" designs using FPGAs. The array-based architecture of FPGAs can be exploited to implement parallel processing algorithms, a benefit because many video and image-processing algorithms are highly amenable to parallel processing to improve performance.

Soft hardware

Advances in electronic design-automation tools and methodologies now work in concert with FPGA technology to facilitate the concept of "soft hardware." The traditional method of designing hardware used schematic capture; that is, drawing the electronic components and their interconnections in a graphical depiction of the physical circuit. However, schematic capture for logic-circuit design has increasingly given way to the use of hardware description languages, or HDLs. These HDLs are high-level, software-like languages used to describe logic circuits. Much as computer-programming languages are compiled into a binary executable file containing the instructions for the processor, HDL programs are compiled into a binary file that provides the configuration instructions for an FPGA. (HDLs can also be used in application-specific integrated-circuit and board-level design.)

There are two predominant HDLs currently in use: Verilog and VHDL (very-high-speed integrated-circuit description language). Verilog uses a C-like syntax, and VHDL is modeled after the Ada programming language. The HDLs bring the powerful features of modern software programming languages, including object-oriented design principles, encapsulation, data hiding, strong typing, and parameterization, to hardware design.

For example, suppose an 8-bit adder circuit were created using schematic capture. To implement a 16-bit adder, it would be necessary to draw a new schematic diagram that showed the 16-bit data path. Alternatively, using VHDL or Verilog, the adder function could be "parameterized;" that is, the 8- or 16-bit adder could be implemented by simply passing the correct parameter to a common HDL-encoded function describing the adder. The implications of using modern software-design principles are obvious in terms of efficiency, verification, and code reuse.

Such FPGAs should not be considered an exclusive approach to real-time video and image processing. In fact, one of the more powerful applications of this technology is the implementation of FPGA coprocessors for general-purpose microprocessors or DSPs. In this role, the software-programmed CPU is used to provide overall algorithm execution and control while the FPGA accelerates specific sections of the algorithm.

This technique permits the bulk of the algorithm to be coded in a software programming language, and only the most performance-critical sections are coded in an HDL and mapped to the FPGA. The best technologies are therefore brought to bear on tough video processing problems: the software-programmable processor for ease of algorithm development and the FPGA for addressing the performance-critical areas. Of course, the system engineering function in which the algorithm is partitioned between the CPU and FPGA becomes critical.

Hardware description languages still address only part of the problem: hardware design. Numerous efforts are under way to create "system-level design languages" that provide a common syntax for defining both the hardware and software components of a system. Programming languages such as C, C++, Java, and hybrids are among the candidates for a system-level design language. Gate-array vendors such as Xilinx (www.xilinx.com) are promoting the integration of high-level software tools such as MATLAB (www.mathworks.com) for implementing signal and image processing algorithms in FPGAs.

The appetite for video and real-time image processing in increasingly diverse markets and applications is growing. Sensor resolutions are increasing from the traditional 640 x 480 to "megapixel" formats, and digital progressive-scan cameras with 60-Hz and higher scan rates are being designed into the newest applications. As resolutions and frame rates increase, the processing bandwidth demands only become greater, ensuring a role for hardware-accelerated video processing for the foreseeable future. Fortunately, electronic design-automation techniques and semiconductor technologies are responding to bring the desirable features of software programming to hardware-based processing.

RONALD HAWKINS is vice president of business development at VisiCom, 10052 Mesa Ridge Court, San Diego, CA 92121; e-mail: [email protected].