Self-driving vehicles: Many challenges remain for autonomous navigation

In August 2016, the Ford Motor Company (Dearborn, MI) confidently announced that by 2021, it would be mass-producing fully autonomous robo-taxis without steering wheels, accelerators, or brake pedals for use by ride-hailing services in geofenced areas. Others expressed similar hopes, but nobody will meet that target. Ambitious plans for rapid deployment of fully autonomous cars have run into unexpected problems, among them stopped fire engines, big white trailers, and highway barriers.

Autonomy still is coming, “but not what everybody expects,” says Jason Eichenholz, cofounder and chief technology officer of Luminar Technologies (Orlando, FL). Don’t expect a personal robotic chauffeur to drive you to work in the morning, wait all day in the garage, then take you home at night. Instead, transportation will become a service, provided on call by fleets of sophisticated robotic taxis and trucks, each costing hundreds of thousands of dollars and earning their keep by carrying people and goods all day long. Within five years, Eichenholz expects such vehicles to reach “level four” autonomy (see “Levels of automotive autonomy”), allowing passengers to stay “out of the loop” during their entire trip over well-maintained roads in reasonable weather.

Good, but not good enough

Self-driving cars have come a long way in a decade. Today, says Eichenholz, “it’s easy to make a self-driving car that works 99% of the time, but consumers want cars that work at 5 or 6 nines” [99.999% or 99.9999%]. Getting those extra nines is tough and will be expensive because driving difficulty differs tremendously from a sunny day on a quiet side street to an icy nighttime snowstorm that has obscured all the markings on a curvy highway. Today’s prototype robo-cars can handle the easy cases, but not the hard ones.

Many of today’s cars include driver-assist features such as automatic braking, lane keeping, and parallel-parking assistance. These features rely on suite of sensors as well as information downloaded from stored maps and sent by other cars in the area, which are combined by computers and artificial intelligence software to help the driver. Autonomous cars go a step further, with more sensors and more software to take more responsibility and ultimately take the driver out of the loop.

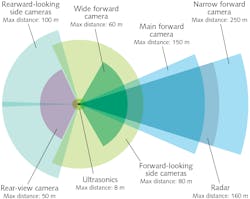

The most sophisticated driver assist system for personal vehicles is the Autopilot system developed by Tesla Motors (Palo Alto, CA). Figure 1 shows its suite of sensors. Cameras play the most important role; a narrow-angle camera in front looks forward up to 250 m, a main forward camera looks up to 150 m at a broader angle, and forward-looking fisheye cameras on both sides capture traffic lights and other objects that might move into the car’s path. A microwave radar in the front measures ranges forward to 160 m. Three cameras look to the rear, one on each side, and a third in back. A dozen ultrasonic sensors with 8-m range ring the car to aid in parallel parking and watch for vehicles that might cause a collision.

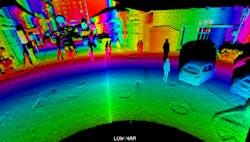

Conspicuous by its absence in Tesla’s system is lidar. Eichenholz and many others consider lidar vital because it can produce point clouds recording the local environment (see Fig. 2). Several types of lidars have been developed and are being evaluated. Yet in 2019, Tesla CEO Elon Musk called lidar “a fool’s errand...expensive sensors that are unnecessary” because they can’t see through fog and precipitation.That attitude reflects a crucial difference in marketing strategy. Tesla sells elegant electric-powered cars for personal use by technophiles who see Autopilot as a driving aide. Most other developers are developing robo-taxis designed for autonomous driving of passengers who don’t take the wheel. Developers also are working on a wide range of other autonomous systems, including air taxis, commercial drones, and systems for use in factories, mining, and agriculture.

New technology for autonomy

The sensors needed depend on the application. GPS is widely used for location, but it sometimes can give spurious results. Some systems require accelerometers, magnetometers, barometers, and ship-scale atomic clocks to improve positioning, says Sabrina Mansur, automotive business manager at Draper Laboratory (Cambridge, MA). Autonomous vehicles also use preloaded maps that can be updated with sensor data. Sensors monitor cellphones as “a signal of opportunity, giving location, trajectory, and speed” to locate pedestrians or bicyclists even if they are hidden by parked cars or other vehicles, says Eric Balles, director of the automotive group at Draper.

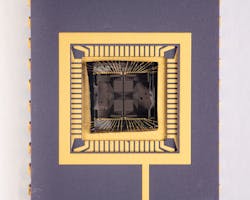

New types of lidar are under development; Figure 3 shows a microelectromechanical-systems (MEMS)-based lidar developed by Draper. Other optical sensors can join cameras and lidars. Laser sensors mounted in wheel wells record how fast wheels are moving to measure real vehicle speed. Fiber gyroscopes measure vehicle rotation for three axes: roll, pitch, and yaw. Their high performance has led to their use in test vehicles, but Mansur says they cost too much for mass-produced vehicles. Less-costly MEMs gyros are likely to be used instead, once their performance is improved. The overall goal is to locate autonomous vehicles on a centimeter scale, better than the tens of centimeters in cities for real-time-kinetic GPS systems. Gyros also can back up against dropouts and multipath errors in GPS. “Almost any sensor has an Achilles heel,” adds Balles.Sensor fusion is needed to combine and analyze data from the various types of sensors in autonomous vehicles. Conventional systems rely on Kalman filtering, which statistically combines multiple measurements to derive results that grow more accurate with the volume of data. Autonomous vehicles generally use machine learning and artificial intelligence, which work in similar ways but usually start with known data sets, then build up data to improve results. Machine learning is highly effective in well-defined cases, such as driving along a well-marked road on a clear day, but its weakness is unusual or poorly defined situations, such as a construction site, an unmarked or unpaved road, or a snowstorm.

Tesla Autopilot

Tesla has set a high standard for driver assistance with its Autopilot feature included in most, but not all, of the more than half a million Teslas sold in the United States since 2014. Drivers are supposed to keep their eyes on the road and their hands on—or very close to—the wheel while using it. Yet virtually every Tesla driver has taken their hands off the wheel to see how well the car steers itself. Some go further. One inebriated California driver fell asleep at 3:30 a.m. while driving home, and his Tesla cruised on along Highway 101 in Palo Alto until highway patrol officers spotted it and brought the car safely to a stop.

Tesla claims its cars have only one accident every three million miles with Autopilot engaged, compared to the overall U.S. rate of a half-million miles per accident. Yet an Apple engineer was not as lucky two years ago in Mountain View, CA. Autopilot failed to navigate a split in the highway and smashed the car into a barrier at the dividing point, killing him. The National Transportation Safety Board found the driver had his hands off the wheel, apparently playing a game on his phone, at the time of the crash. The NTSB ruled the key causes were the driver’s inattention and the Tesla’s failure to navigate the split, and said the driver might have survived if the barrier had been fixed after an earlier crash.

Four other fatal Tesla crashes have been blamed on Autopilot, one in China and three in Florida. Two Florida crashes occurred when trucks pulling white trailers turned left in front of Teslas traveling at highway speed and neither car nor driver slowed before smashing into the trailer. The forward-looking cameras evidently could not tell a bright white trailer from a bright blue sky. That suggests the cameras may have been monochrome, which is used because the images can be processed much faster than color images, a potential advantage for highway driving. Causes of the other two fatal accidents have not been reported.

Transportation as a service

Robo-taxis are a fundamentally different business—selling autonomous vehicles to big companies providing transportation as a service. As envisioned by companies including Waymo, Uber, and Lyft, the service would start in “geo-fenced” areas particularly amenable to self-driving cars, then expand to broader areas as the technology improves. The cars would have expansive high-performance navigation systems with a large suite of sensors, including lidars. Like Waymo’s test vehicles, they would be designed for comparatively slow city traffic, not for highway speeds, and likely would be used in urban areas. That would reduce the chance and severity of accidents because of lower collision energy and shorter stopping distances. It also makes better use of the limited range of pulsed 900 nm lidar.

Eichenholz thinks further developments including longer-range 1550 nm lidars could take more human out of the loop in about five years by making it possible to reach level-4 automation (see “Levels of automotive autonomy”). The sensors and other systems providing that automation will raise the cost of the robo-taxis to hundreds of thousands of dollars, but the savings in having robots rather than humans shuttling passengers all day would offset the costs. In contrast, most private cars are used only a few percent of the time. “When am I going to buy my own self-driving car?” says Eichenholz. “Probably never, because the costs would be too high for that level of use.”

Other forms of driver assist might be used for personal cars. Eichenholz imagines having a traffic-jam pilot. When a driver got stuck in a jam, they could push a button and the car would take over as the traffic continued to crawl, allowing the driver to take their eyes off the road and relax. When the traffic jam started to break up and speeds increased, the car would tell the driver to take over. “That could lead to what we call highway autopilot for major highways like I5 and I95 that are well mapped and can be geo-fenced,” he says. Highway autopilot could take over when cars were moving freely, and drive to within a few miles of the destination before turning control back to the driver for leaving the highway for surface roads.

Trucks could take advantage of a higher level of highway automation, with a human driver bringing the truck to a limited-access highway entrance, set its destination at an exit, then send the highway autopilot on its way to where another human would pick it up and deliver it.

Limits of robo-taxis

Some safety experts have talked of replacing all human drivers with robots, but that’s more dream than reality. Humans are more adaptable to difficult situations, like rough dirt roads, farms, or logging trails. Developing robo-taxis to go anywhere under any conditions would be horribly expensive. “There’s no economic incentive” to build them, says Eichenholz. “You’re still going to have people driving F150 [pick-ups] on their farms.”

Difficult conditions are not limited to rural areas. Lane markings often aren’t replaced when they begin wearing away, and side roads often lack markings altogether. Cars with lane-keeping systems can weave so much where markings are uneven that drivers worry they might be arrested for drunk driving. Construction sites often are unpredictable, and maintenance is uneven. The NTSB blamed the California Tesla fatality partly on “systematic problems with...repair of traffic safety hardware” at the site. Fog, snow, ice, and other foul weather can make roads undriveable.

Public trust is a crucial issue for self-driving cars. Early on, the public seemed optimistic that robots could outdrive the proverbial “nut behind the wheel.” However, a series of unsettling minor accidents like hitting a stopped fire truck slowly eroded trust, and highly publicized fatal crashes scared the public. Most unsettling was a March 18, 2018 accident in which a self-driving Uber car failed to recognize a woman walking a bicycle across an Arizona road at night, struck her, and killed her. The car alerted the safety driver after a few seconds, but it was too late; as the driver looked up from her phone, the car struck the pedestrian. By January 2019, 71% of Americans polled by the AAA Auto Club said they were “very” or “fairly” scared of riding by a fully self-driving vehicle. It will take time, and safer self-driving cars, to change that.

Outlook

Both driver-assist and robo-taxi approaches to self-driving cars have promise, but they need to mature. That will require improvements in sensors and in artificial intelligence, and in our understanding of their limits, particularly AI’s weakness at dealing with the unexpected. That means state and local governments need to invest in better road maintenance and management so self-driving cars aren’t confused by things like construction sites or stretches of roads where markings have worn away.

Meanwhile, a new frontier in autonomy is emerging as the Federal Aviation Administration begins plans for autonomous drones and other aerial vehicles. In January, the FAA said six companies are “well along” in pursuing certificates for “urban air mobility” vehicles to fly human passengers, and four have said their aircraft will be autonomous. Uber has talked about urban air taxis, but may start with human pilots. On February 3, 2020, the FAA proposed rules for certifying the small-package delivery drones being developed by Amazon, UPS, and other delivery firms. Those vehicles will pose a new generation of navigational challenges.

Levels of automotive autonomy

0: Humans control everything

1: Simple driver assistance for one function (cruise control, lane keeping)

2: Multiple features are automated (lane centering, acceleration/deceleration), hands can be off steering wheel, but human must be paying attention and ready to assume control

3. Vehicle in charge of safety-critical functions, but human must be ready to take control at any time

4. Vehicle designed to perform all safety critical driving functions and monitor roadway conditions for whole trip, but car cannot drive in all conditions or all roads

5: Vehicle is fully autonomous in all driving scenarios

Jeff Hecht | Contributing Editor

Jeff Hecht is a regular contributing editor to Laser Focus World and has been covering the laser industry for 35 years. A prolific book author, Jeff's published works include “Understanding Fiber Optics,” “Understanding Lasers,” “The Laser Guidebook,” and “Beam Weapons: The Next Arms Race.” He also has written books on the histories of lasers and fiber optics, including “City of Light: The Story of Fiber Optics,” and “Beam: The Race to Make the Laser.” Find out more at jeffhecht.com.