Autonomous vehicle technology shifts to a more realistic gear

When it comes to autonomous vehicles, industry is shifting gears. Late in 2020, Honda said that this March it would introduce so-called “Traffic Jam Assist” in its Japanese Legend model; it’s autonomy in the slow lane, a feature that takes over control of a personal car when highway traffic slows to a crawl. Then, says the manual, it uses radar and cameras to automatically follow the car ahead at a safe distance, as long as the road is nearly straight and with detectable lane markers.1 This frees the human driver from having to worry about traffic until it picks up to highway speed, at which point the driver has to take the wheel again. Robo-taxis lacking any controls for human drivers have slipped further into the future.

A bigger shift in the works is putting autonomous systems in control of long-haul trucks. Big rigs spend long hours on the road, and typically some 95% of their mileage is on well-maintained, limited-access highways—a well-controlled environment that is friendly for autonomous vehicles. In the near-term, developers envision keeping a human in the truck to take the wheel when needed to go off-highway to depots or to steer through difficult situations like temporary detours directed by road crews. Full Level 4 autonomy would come in a few more years as the technology is refined and verified. Big rigs are big investments, and the savings of having robots able to drive straight through without rest stops or sleep could pay for the new technology.

A bumpy road toward autonomy

These shifts in direction partly reflect economic and technological limits, but the most important limit may be the capabilities of human drivers. Developers originally envisioned that automotive autonomy would shift control from human drivers to robots by progressing through the levels shown in the sidebar (see “Levels of autonomy”). The original target was Level 3 autonomy, with the robot driving the car and the human driver with eyes on the road and hands on the wheel, ready to take over if needed with little or no warning.

However, in reality, people are easily distracted even when they are driving a car. If they are just watching the car drive, their eyes are likely to wander to their phone or some other screen than to stay on the road. Tesla drivers not looking ahead have been killed when their cars failed to recognize white trucks crossing the road ahead of them against a blue sky. An Uber vehicle killed a pedestrian walking a bicycle across a road at night because it could not recognize the pedestrian; when it turned control over to the safety driver, she just had time to look up from her phone when the car smashed into the pedestrian.

These fatal accidents have led many developers to think that Level 3 is a bad idea; many companies are expected to jump straight from Level 2 to Level 4, which requires the car to be able to handle most conditions without human assistance.2 The public has also grown wary, especially after embarrassing nonfatal accidents such as a self-driving car crashing into a bright-red fire truck stopped in the road with lights flashing. The pandemic slammed the brakes on development in early 2020, but companies had already been slowing development to refine technology and reduce costs.

Personal cars vs. robo-taxis

Tesla Motors (Palo Alto, CA) delivered almost half a million personal cars in 2020, a 35% increase over 2019.3 All Teslas since March 2019 come with the Autopilot feature, and enhanced software called “Full Self-Driving Capability” is available as an option offering extra features. Among the extras are the ability for the car to drive from highway on-ramp to off-ramp, navigating interchanges, changing lanes, and taking the correct exit. However, the company emphasizes that the self-driving features “require active driver supervision and do not make the vehicle autonomous.”4 In other words, it still expects the driver to have eyes on the road and hands on the wheel.

Like Honda’s traffic-jam pilot, Tesla’s control system uses radars and cameras to sense the environment, but not lidar. Two years ago, Tesla CEO Elon Musk proclaimed “anyone relying on lidar is doomed,” and that radar and cameras are good enough for navigation.5 It might be fairer to say that lidars are too expensive for Tesla to make a profit selling personal cars with self-driving features.

Honda’s Traffic Jam Assist appears to be using similar technology, but only for following the car ahead through traffic moving so slowly that serious injuries are unlikely. The manual is cautious, warning that the feature will not work properly when following a motorcycle, entering a toll booth, or if the car being followed shifts to another lane. Some other companies have developed similar systems for personal cars.

Robo-taxis being developed by Google’s Waymo division, GM’s Cruise, and other companies are a different market: companies offering personal transport as a service. These vehicles would be on the road much of the time, not sitting in garages like personal cars, helping to offset costs of lidar needed for autonomy. Level 4 autonomy would be desirable. However, those plans have slowed, and Uber Technologies (San Francisco, CA), an early pioneer, sold its autonomous car group to Aurora (Bozeman, MT) in December.6

Nobody is delivering cars with true Level 4 autonomy now, says Jason Eichenholz, cofounder and chief technology officer at Luminar Technologies (Orlando, FL). However, in 2022 Volvo will begin distributing cars with an optional system called Volvo Highway Pilot that combines Luminar’s Iris 1550 nm lidar with other sensors and autonomy software from Zenseact (Göteborg, Sweden). The lidar will be the first in a consumer vehicle.

On controlled-access highways, Eichenholz says the Volvo offering will be “a true driver out of the loop” system with Level 4 autonomy. Highway traffic can be fast, but the environment is much more predictable than city or suburban streets, with no cross traffic, pedestrians, traffic signals, or bicycles. Level 4 autonomy with high-performance lidars provides long sensing ranges and high point density to identify objects in the highway environment and safely steer around them. The car would turn driving over to the human in other environments, so a typical driver would guide the car from home to highway, the car would drive from there to the off ramp, and the human would resume control when leaving the highway to drive to their destination.

“Over time, you will see the operational domain of that Level 4 experience expand” to cover more of the driving experience as technology improves, says Eichenholz. But don’t expect a rapid transition. “Getting to Level 5 with the driver fully out of the loop in all operational domains is really hard,” he says. “It’s easy to get to 99% coverage [of roadways], but regulators want five nines.” There’s good reason for that. Rural America is full of unmarked and unpaved roads, and foul weather can cut visibility to a car length and transform highways into slippery messes.

A boom in robo-trucks

As in personal cars, Tesla aims to be first to market with large trucks with autonomous features. In January, the company announced it will begin manufacturing Tesla Semi trucks this year, and they will include autonomous driving features similar to those in Tesla autos.7 However, the main focus of the Semi appears to be its all-electric “zero emission” power system, which led Walmart to order 130 of the trucks in September 2020.8

Other companies see long-haul trucking as potentially the biggest near-term market for autonomous vehicles. It’s an $800 billion per-year business in the U.S., and it runs up most of its mileage on Interstate highways. The trucking industry says it needs 60,000 more truckers now, and the shortage is expected to grow as veteran drivers retire, making autonomy attractive. Luminar has teamed with Daimler Trucks and Torc Robotics to develop Level 4 autonomous truck systems. The size of big rigs is an advantage because it gives them room to space sensors more widely and to carry other automation systems.

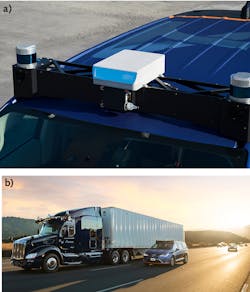

Much technology used in autonomous cars can be transferred to trucks. However, “the dynamics and functional behaviors of trucks are very different” from those of passenger cars, says Chuck Price, chief product officer at TuSimple (San Diego, CA).9 Trucks are big, and fully loaded they have a huge momentum at highway speeds, so they need long-range sensing and object recognition to stop them safely. TuSimple equips its trucks with 10 high-definition cameras that can identify vehicles up to 1000 m away (day or night, rain or shine), giving them plenty of room to steer around other vehicles or stop when needed (see Fig. 1). Five microwave radars can detect and identify objects up to 300 m away, important if fog or rain impairs vision. A pair of lidars with 200 m range give detailed views of objects inside that range.

So far, TuSimple trucks have been driving test runs with human safety drivers on board between Arizona and Texas. Sometime this year, they plan to have a truck drive the route in autonomous mode without active human supervision, although a safety driver and a test engineer will be in the vehicle monitoring its performance. TuSimple’s goal is demonstrating Level 4 driving between terminals located a short distance from highway exits. The company has teamed with Navistar, the maker of International trucks, to begin producing Level 4 autonomous trucks by 2024 for highway use. Several other companies have similar plans including Embark, Google’s Waymo subsidiary,10 Plus Technology, and Aurora, a Silicon-Valley area company which bought Uber’s autonomous vehicle group in December 2020 and plans to introduce autonomy in trucks before cars.

Meeting manufacturing needs

A key challenge for the photonics industry is building manufacturing technology on the size and scale needed for automotive use, says Eichenholz. Industry requirements are tough. They insist on volume production at an affordable cost to withstand temperatures from -40° to 80°C. Automotive customers need time to test the safety and quality of new products, and they then need a couple of years to get a new product on the production line.

Building a supply chain and automating production takes human and financial resource. Luminar started by building products internally, then developed partnerships with contract manufacturers and others. They began by building from the chip level up, doing die placement, wire bonding, and other steps. As their products evolved, they put more and more functions into application-specific integrated circuits (ASICs) to drive down costs and improve performance. For example, they put signal processing into ASICs to avoid the capacitance of wire bonds to detectors and the inductance of laser circuits.

The company’s lidar expertise helped them balance tradeoffs in their pulsed 1550 nm time-of-flight (ToF) lidar. Eichenholz says they chose mechanical scanning because it “is really reliable, with none of the resonance or temperature problems you have with MEMS.” Mechanical polygon mirrors scan fast in the horizontal plane; galvanometers scan slowly in the vertical direction. The technology was used 40 years ago in bar-code scanners, and now it can be very cost-effective in volume. Scanning both the output beam and the receiver makes the field of view very narrow, blocking interference from the sun and other sources.

Photonic integration promises to improve lidar cost and performance. Luminar is working to reduce costs of driver-assistance lidars to $500, and of the more precise lidars needed for autonomous cars to $1000.11 Those are huge drops from earlier levels in the tens of thousands of dollars, and they helped attract deals with Volvo and Daimler Trucks. In November, Mobileye, an Israeli subsidiary of Intel, chose Luminar’s lidars for its first-generation Level 4 autonomous driving system for robo-taxis.12 Luminar has plenty of competition in ToF lidar, including Velodyne Lidar (San Jose, CA), which began offering lidars in 2010, and several other companies.

Competing lidar technologies

An alternative lidar technology also is emerging. In January 2021, Mobileye announced plans to build future versions of its automated driving system by 2025 to a new chip-scale frequency-modulated continuous-wave (FMCW) lidar being developed at Intel.13

FMCW lidar chirps the frequency of the outgoing beam and mixes return signals coherently with part of the outgoing signal. Comparing the frequencies determines target distance and can directly measure velocity; ToF systems cannot measure velocity directly. Advocates cite other advantages of coherent lidars, including relative immunity to interference from sunlight and other lidars, higher sensitivity, and lower peak power.14

J. K. Doylend and S. Gupta of Intel’s Silicon Photonic Product division reported at SPIE Photonics West 2020 that the FMCW lidar’s ability to directly detect both range and velocity was very attractive for autonomous cars. Achieving that performance required dense integration of optics including lasers, amplifiers, phase and amplitude control, low-noise photodetectors, mode converters, and waveguides, but integration can produce compact lidars in high volume.15

Current lidar systems combine information from cameras, ToF lidars, and radars to steer vehicles. Mobileye’s new system will combine FMCW lidar data with radar to create one viewing channel, and use camera data to produce a second independent channel. They believe that combination will improve safety and performance when commercial versions arrive in 2025. “This is really game-changing,” Mobileye CEO Amnon Shashua said in January at the virtual Consumer Electronics Show (CES).16

Aurora is already testing FMCW lidars from Insight Lidar (Lafayette, CO) on its developmental autonomous trucks (see Fig. 2). FMCW lidar offers long detection range and higher sensitivity, important for long-range detection needed at highway speeds, says Greg Smolka, Insight Laser’s vice president of business development. They target having early versions in use by 2023, significant volume by 2025, and production use in autonomous cars in 2026.Another FMCW maker, Aeva (Mountain View, CA), says its lidar has a 300 m range, high pixel density at all ranges, and a 120° × 30° field of view available now. In January, Aeva announced a partnership with TuSimple to deploy its “4D” lidar on their Level 4 autonomous trucks.

Another potential competitor is flash lidar, originally developed to avoid moving parts in docking or landing spacecraft. Laser pulses are spread across a range of angles, and receiver optics focus returns onto a two-dimensional detector array. Processing the returned signals yields a three-dimensional point cloud, with distance gathered from the return time. It has the advantage of simplicity, but the dispersion of pulse energy across a wide range limits received power, which usually has constrained range to under 100 m.17

Outlook

Autonomous vehicles have made amazing progress in the past several years, but challenges remain.

Lidar must solve some technical issues to meet the needs of autonomy. Near the top of the list is the inherent tradeoff between lidar range and the density of the point cloud it can measure at a distance. Lidars are very good at mapping objects a few tens of meters away, but the points spread further apart with distance. At 100 m, points spaced at 0.025° intervals are 8.7 cm apart. That’s not going to put enough points on a pedestrian to tell if they are about to cross the street or are looking in the opposite direction. Even the speed of light is an issue because lidar pulses must be spaced far apart for light to make a round trip: 1.3 μs for an object at 200 m, limiting repetition rate to 770,000 Hz.

Other technologies used in autonomy and the road environment pose other challenges. So does the unusual and unexpected. Can we count on an autonomous car to slam on the brakes for a person carrying a bag, but not a bag blowing in the wind?

Photonic integration and good engineering will help overcome these challenges. (It may even help with artificial intelligence; watch for an upcoming article.) We also may have to accept limitations. I’ve lived in New England long enough to have learned that the best way to get through foul weather is to wait for it to blow away.

REFERENCES

1. See http://bit.ly/HechtAV-Ref1.

2. “SAE self-driving levels 0 to 5 for Automation: What they mean,” Autopilot Review; see http://bit.ly/HechtAV-Ref2.

3. See http://bit.ly/HechtAV-Ref3.

4. See http://bit.ly/HechtAV-Ref4.

5. T. B. Lee, “Elon Musk: Anyone relying on lidar is doomed. Experts: Maybe not,” Ars Technica (Aug. 6, 2019); see http://bit.ly/HechtAV-Ref5.

6. S. Szymkowski, “Uber ditches self-driving car plans, sells business to Aurora” (Dec 12, 2020); see http://bit.ly/HechtAV-Ref6.

7. See http://bit.ly/HechtAV-Ref7.

8. See http://bit.ly/HechtAV-Ref8.

9. E. Ackerman, “This year, autonomous trucks will take to the road with no one on board,” IEEE Spectrum (Jan. 4, 2021); see http://bit.ly/HechtAV-Ref9.

10. See http://bit.ly/HechtAV-Ref10.

11. See http://bit.ly/HechtAV-Ref11.

12. “Mobileye selects Luminar lidar for autonomous vehicle pilot,” Optics.org (Nov. 24, 2020); see http://bit.ly/HechtAV-Ref12.

13. “Intel's Mobileye outlines auto lidar plan,” Optics.org (Jan 13, 2021); see http://bit.ly/HechtAV-Ref13.

14. J. Hecht, Laser Focus World, 55, 5, 22–25 (May 2019); see http://bit.ly/HechtAV-Ref14.

15. J. K. Doylend and S. Gupta, Proc. SPIE, 11285, 112850J (Feb. 26, 2020); doi:10.1117/12.2544962.

16. See http://bit.ly/HechtAV-Ref16.

17. Y. Li and J. Ibanez-Guzman, IEEE Signal Processing Magazine, 37, 4, 50–61 (Jul. 2020); doi:10.1109/msp.2020.2973615.

Levels of autonomy

Level 0: No automation (Model T, stick shift 1957 Chevy)

Level 1: Minimal automation (Automatic transmission, cruise control)

Level 2: Driver assistance (backup camera, lane monitors, collision warning)

Human driving with eyes on the road, hands on the wheel

Level 3: Autonomy with human monitoring to take over instantly if needed

Human driver fully aware of situation

Level 4: Full autonomy, but can’t drive everywhere, human on board vehicle if needed

Human can be napping; time to transfer control safely

Level 5: Fully autonomous, can go anywhere

No human needed in car

Jeff Hecht | Contributing Editor

Jeff Hecht is a regular contributing editor to Laser Focus World and has been covering the laser industry for 35 years. A prolific book author, Jeff's published works include “Understanding Fiber Optics,” “Understanding Lasers,” “The Laser Guidebook,” and “Beam Weapons: The Next Arms Race.” He also has written books on the histories of lasers and fiber optics, including “City of Light: The Story of Fiber Optics,” and “Beam: The Race to Make the Laser.” Find out more at jeffhecht.com.