PHOTONIC FRONTIERS: OPTICAL COMMUNICATIONS: High-speed fiber transmission reaches 100 Gbit/s on a single channel

Fiber-optic transmission systems reached a milestone last December when Verizon switched on a 100 Gbit/s second link running 893 km between Paris and Frankfurt. It's carrying live traffic on a standard step-index single-mode fiber that also is carrying live 10 Gbit/s traffic at other wavelengths. The 100 Gbit/s link occupies the same 50 GHz optical channel as a 10 Gbit/s signal.

It's an impressive milestone, but it's been a long time coming. Deployments of 10 Gbit/s long-haul transmission in wavelength-division multiplexed (WDM) networks began in 1996, and developers were working on 40 Gbit/s systems during the heady years of the "bubble." But fiber transmission impairments including chromatic dispersion and polarization-mode dispersion (PMD) proved so severe above 10 Gbit/s that it was cheaper and easier to add new 10 Gbit/s channels than to upgrade to higher speeds.

Commercial 40 Gbit/s systems finally reached the market a few years ago, but they have yet to gain wide acceptance for backbone transmission. Carriers want systems that can transmit the same distances as 10 Gbit/s systems using standard 50 GHz channels on installed fibers, but fiber impairments get in the way. The first 40 Gbit/s systems could tolerate only 2.5 ps of PMD, compared to 12 ps for 10 Gbit/s systems, so they couldn't be overlaid on existing systems unless the fibers had low PMD, says Glenn Wellbrock, director of network backbone architecture for Verizon. Improved versions can tolerate more PMD, but not as much as 10 Gbit/s systems, so fibers have to be tested before installation.

With the new 100 Gbit/s systems, "it doesn't matter," Wellbrock says. Thanks to new technology, "if 10 Gbit/s runs, 100 Gbit/s will have no problems at all." That's giving 100 Gbit/s technology a big push.

High-speed optical modulation

The higher speed requires new transmission technology to replace the binary amplitude modulation standard at data rates to 10 Gbit/s. Dispersion management is needed for long-distance transmission in the 1550 nm band, but dispersion-compensating fiber can offset chromatic dispersion well enough to span 4000 km at 10 Gbit/s, more than the distance from Atlanta to Los Angeles.

However, the impact of chromatic dispersion increases with the square of distance at higher speeds, where pulse durations are shorter, so a 40 Gbit/s system using 10 Gbit/s technology could transmit only 1/16 as far. Higher speeds also reduce a system's ability to tolerate PMD, which can scramble signals enough to briefly interrupt transmission. That led developers to turn to new modulation techniques.

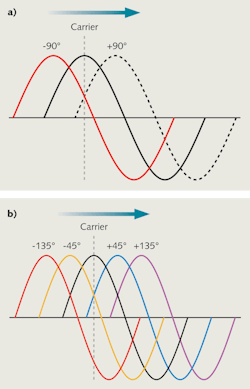

Phase-shift keying modulates a carrier signal by shifting its phase. The simplest form is binary phase-shift keying (BPSK), which typically either shifts the signal 90° forward or 90° backward in phase to code a binary 0 or 1 (see Fig. 1a). Phase-shifting in smaller increments can code more bits per modulation interval. Quadrature phase-shift keying (QPSK) codes two bits per modulation interval by shifting phase –135°, –45°, +45° or +135°, encoding the two-bit codes 00, 01, 10 and 11 (see Fig. 1b). This allows QPSK to send the same number of bits in half as many modulation intervals, so only 25 Gbaud can encode 50 Gbit/s, reducing dispersion effects.

Quadrature phase-shift keying is compatible with polarization multiplexing, which can double the number of bits per symbol (or signal interval) to four, so 25 Gbaud can encode 100 Gbit/s. The combination is called dual-polarization QPSK, or DP-QPSK. Field tests at 100 Gbit/s with direct detection were promising, but they could span only 500 to 700 km, not the 1000 to 1500 km Verizon wanted for its backbone network. "The majority of traffic in the US is 1500 km or less," says Wellbrock, and distances are even shorter in Europe. Those distances are good enough for Verizon, he adds. "We don't want to spend a lot of money to get into the corners" of the country.

Coherent communications and digital processing

Developers decided coherent detection would reach the goals of network operators, stimulating activity. Fewer than 10 papers described 100 Gbit/s technology at the 2008 European Conference on Optical Communications, says Dimple Amin of Ciena (Linthicum, MD), but more than 100 did at ECOC 2009.

The textbook example of coherent detection is a broadcast radio receiver, in which difference-frequency mixing of an input signal with a local oscillator output yields a signal. Coherent optical communications were first developed in the 1980s, but phase-locking the local oscillator to the input signal proved daunting. Erbium-fiber amplifiers and wavelength-division multiplexing in the 1550 nm band left coherent systems in the dust.

Now coherent communications is staging a comeback at 100 Gbit/s. Instead of trying to phase-lock the local oscillator at optical frequencies, the new generation of coherent systems uses a control loop to rapidly scan the local oscillator frequency back and forth near the frequency of the incoming laser signal. This senses the phase of the incoming signal, and generates the information needed to decode the input signal and separate the two component polarizations. The approach offers the best signal to noise tolerance available at 40 or 100 Gbit/s, Nortel engineers report.1

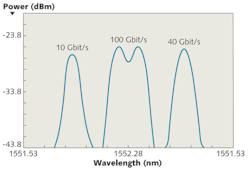

Nortel, which built the Verizon system, uses a coherent transmitter with two laser carriers spaced 20 GHz apart, says John Sitch, senior advisor for optical R&D. Both fit into a single 50 GHz channel (see Fig. 2). Adding the second carrier doubles the number of bits in a symbol to eight, allowing the symbol rate to be reduced to about 14 Gbit/s (including overhead for forward error correction) with total rate 112 Gbit/s. Other designs use a single carrier.The new 100 Gbit/s systems also require high-speed analog-to-digital converters and digital signal processing chips, which use sophisticated algorithms to compensate for fiber impairments and clean up the received signal.2

At data rates up to 10 Gbit/s chromatic dispersion can be managed by adding coils of special fibers with dispersion tailored to offset the dispersion of the transmission fiber. Higher speeds require electronic dispersion compensation, using digital signal processors to analyze the received signal and remove dispersion effects. Digital processing allows high-speed transmission through standard step-index single-mode fiber without compensating fiber; Sitch says Nortel's system can compensate for 32,000 ps/nm of chromatic dispersion. That's an important benefit because about 1 km of coiled compensating fiber is needed to balance the dispersion of each 6 km of transmission fiber, so eliminating the compensating fiber reduces transit time about 16% and reduces attenuation. Transit time adds to the latency that slows the response of remote "cloud" computers, where every millisecond counts.

Digital processing also can clean up the effects of polarization-mode dispersion, which becomes troublesome above 10 Gbit/s. With electronic processing, Wellbrock says, 100 Gbit/s systems can tolerate twice as much PMD as 10 Gbit/s systems. The digital processors also include powerful forward-error-correction, which increases the raw data rate to about 112 Gbit/s but greatly reduces bit error rate.

Commercial 100 Gbit/s systems

The Verizon system, supplied by Nortel, is the first 100 Gbit/s system to carry live long-haul traffic, but more are in the pipeline. Ciena has a contract to install a metro 100 Gbit/s link for the New York Stock Exchange, which hopes the reduced latency will generate more trades and higher commissions. (Ciena is also buying the Nortel group.) Alcatel-Lucent and other companies have 100 Gbit/s systems in the pipeline.

The Optical Internetworking Forum (www.oiforum.com) has established a framework for developing 100 Gbit/s DWDM systems transmitting through 50 GHz optical channels (OIF).3 One goal is to allow carriers to plug the new systems into the same optical channels now handling 10 Gbit/s without overhauling or requalifying the transmission plant. Another is to develop components that will serve as standard building blocks, so component makers can manufacture the large quantities needed for low unit costs, and system developers can design around those standard modules. The common technology will be coherent DP-QPSK, but the standards don't specify rigid details of system performance, allowing a variety of designs.

The 100 Gbit/s Optical Networking Consortium at Georgia Tech (Atlanta, GA) provides a common technology testbed for 12 member companies, says director Stephen Ralph. A near-term goal is sharing resources to test coherent QPSK technology for 100 Gbit/s transmission, but Ralph says a broader goal is looking at "things down the road, which may not be in the next generation of deployed equipment, but in the one beyond including things that no one is looking at now." Examples include investigation of modulation formats that may enable data rates above 400 Gbit/s in a 50 GHz channel.

Challenges and outlook

Fitting a 100 Gbit/s signal into the same 50 GHz optical channel as a 10 Gbit/s signal does come at a cost of limited transmission reach. The high-speed systems aren't ready for a key part of the global telecommunications network, ocean-spanning submarine cables, some of which are nearly full of 10 Gbit/s channels. Shifting to binary phase-shift keying at 40 Gbit/s can go much greater distances, Sitch says, and Nortel is developing that for submarine applications.

Economics are another concern. Today's 100 Gbit/s hardware costs about 10 times more than 10 Gbit/s systems, so the only savings from the higher speed come from avoiding the need to install new fibers. Costs need to come down to make 100 Gbit/s more attractive for routes where adequate capacity is available.

The near future for 100 Gbit/s systems is on long-haul backbone routes where more capacity is urgently needed. Streaming audio, streaming video, graphics-intensive web sites, and social networking have kept Internet traffic growing steadily. New services including high-definition video downloads and cloud computing will further increase traffic. 100 Gbit/s could be the right technology at the right time.

REFERENCES

- K. Roberts et al., "Performance of Dual-Polarization QPSK for Optical Transport Systems," J. Lightwave Technol. 27, 3546 (Aug. 15, 2009).

- B. Beggs, "Microelectronics Advancements to Support new Modulation Formats and DSP Techniques," OFC 2009, paper OThE.

- Optical Internetworking Forum, "100G Ultra Long Haul DWDM Framework Document"; http://www.oiforum.com/public/documents/OIF-FD-100G-DWDM-01.0.pdf.

What about 100G Ethernet?

Question: When is 100 Gbit/s not transmitted at 100 Gbit/s?

Answer: When you're considering line rates and Ethernet standards.

Ethernet standards are based on overall transmission rate, not on the line rate, and are intended mainly for local or access networks, rather than backbone systems. Standards now in development for 100 Gbit/s Ethernet envision transmission in the 1310 nm window either over four CWDM (coarse WDM) channels at 25 Gbit/s each, or over 10 CWDM channels at 10 Gbit/s.

Jeff Hecht | Contributing Editor

Jeff Hecht is a regular contributing editor to Laser Focus World and has been covering the laser industry for 35 years. A prolific book author, Jeff's published works include “Understanding Fiber Optics,” “Understanding Lasers,” “The Laser Guidebook,” and “Beam Weapons: The Next Arms Race.” He also has written books on the histories of lasers and fiber optics, including “City of Light: The Story of Fiber Optics,” and “Beam: The Race to Make the Laser.” Find out more at jeffhecht.com.