Single-photon avalanche diode detector enables 3D quantum ghost imaging

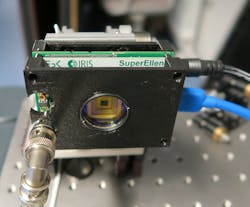

A team of researchers at Fraunhofer Institute of Optronics, System Technologies, and Image Exploitation and Karlsruhe Institute of Technology are exploiting single-photon avalanche diode (SPAD) arrays to enable three-dimensional (3D) quantum ghost imaging (see Fig. 1 and video).

Dubbed “asynchronous detection,” this new method yields the lowest photon dose possible for any measurement and can be used to image tissues sensitive to light or drugs that become toxic when exposed to light—without risk of damage.

“Our institute specializes in remote sensing, and when the Fraunhofer Society initiated its quantum sensing lighthouse project, known as ‘QUILT,’ in 2018, we wanted to explore whether we could enable remote sensing by quantum ghost imaging,” says Carsten Pitsch, a researcher at both Fraunhofer and Karlsruhe Institute of Technology.

His colleague Dominik Walter came up with the idea to use the timestamping capabilities of a SPAD camera to perform imaging instead of relying on a complex optical setup.

“For imaging at longer distances, we had to come up with an alternative to the conventional setups for quantum ghost imaging, and an image reconstruction algorithm using only timestamps as input was a challenge but the best answer to all the concerns at the time,” says Walter. “From a parallel project, I already had the right tools at hand for a quick proof of concept of this algorithm and was able to counter any skepticism our new approach might not work.”

Quantum ghost imaging meets SPAD

Quantum ghost imaging is a spooky method to create images via entangled photon pairs, in which only one of the photons actually interacts with the object. The researchers rely on photon detection time to first identify entangled pairs, which allows them to reconstruct images via the properties of the entangled photons. As a bonus, this method enables imaging at extremely low light levels.

Previous setups for quantum ghost imaging weren’t able to handle 3D imaging, the team points out, because it uses intensified charge-coupled device (ICCD) cameras. ICCD cameras provide spatial resolution, but are time-gated and don’t permit the independent temporal detection of single photons.

To maneuver around this problem, the researchers built a setup based on SPAD arrays borrowed from the light detection and ranging (LiDAR) and medical imaging realms. These detectors have multiple independent pixels with dedicated timing circuitry to record the detection time of every pixel with picosecond resolution.

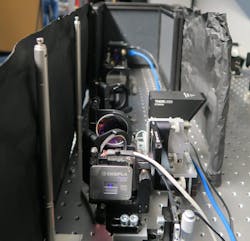

Their setup relies on spontaneous parametric down-conversion (SPDC) as a source of correlated photon pairs, with special periodically poled crystals. Potassium titanyl phosphate (KTP) crystals, which are nonlinear optical crystals highly transparent for wavelengths between 350 and 2770 nm, create the entangled photons (see Fig. 2).

“It enables highly efficient quasi-phase matching for nearly any triplet or pump-signal-idler—providing a wide range of wavelength combinations for entangled photon pairs,” says Pitsch. “This lets us adapt our setup to other wavelengths or applications.”

And it makes it possible, for example, to create one green photon and an infrared photon from a blue pump photon. “The wavelength/color combination is given through energy conservation constraints,” Pitsch adds.

The researchers illuminate a scene with the infrared photon and detect the backscattered photons with a single-pixel single-photon detector (also a SPAD). Meanwhile, the green photon is recorded with a SPAD array that acts as a single photon camera. By using the properties of entanglement, they can reconstruct the illuminated scene from the green photons detected via camera.

How exactly does this part work? Two entangled photons—a signal and idler—can be used to obtain 3D images via single-photon illumination. Idler photons get directed onto the object and its backscattered photons are detected, registering their time of arrival. Signal photons are sent to a dedicated camera that detects as many photons as possible in both time and space. To reconstruct entanglement, the detection time of every pixel gets compared with the detection of the single-pixel detector. This makes it possible to determine the time of flight of the interacting idler photons, from which the depth of the object can be calculated.

“This method is known as quantum ghost imaging, and it allows imaging within a broad spectral regime without needing a camera in the spectral range we want to image—but we still need a simple bucket detector to register the arrival of the idler photons,” says Pitsch. “For imaging, we can usually tailor the system so that the signal photons are optimal for silicon detection—the most mature camera and single-photon detection material.”

Timestamping

In-pixel timing circuitry enables SPADs to not only perform regular intensity imaging, but also timestamping of single photons. “This is a big advantage for every system relying on the time of flight of photons, like LiDAR,” Pitsch says. “But it’s also very good for many quantum applications because they tend to rely on identification of photon pairs via their time of flight. We use this to register both infrared and green photons temporally, then identify the pairs after the measurement to obtain the image of the infrared scene.”

The timestamps of the entangled partner photons “give us a kind of secure quantum key in time that helps us decide if a detection event is part of the 3D image or just mere noise,” says Walter. “This increases the signal-to-noise ratio quite a bit. But keeping the ‘clocks’ of the two SPAD detectors running at the same rate—allowing referencing of the detections without any synchronization signal—was a big challenge. Every time we lost synchronization due to some unknown error, we had to automatically correct it, which wasn’t easy.”

Maintaining this synchronization for every frame of the array was another challenge along the way for the team. “We did this by analyzing the temporal behavior of the camera and correcting/estimating the missing timestamps for individual frames,” says Pitsch.

And discovering how well-tuned the source needs to be to actually perform imaging came as a surprise, Pitsch adds, because it was their first quantum imaging setup.

Proof-of-concept demonstrations

The team ran demonstrations of their asynchronous detection method using two different setups. Their first setup resembles a Michelson interferometer and acquired images using two spatially separated arms, which allowed them to analyze the SPAD performance and improve the coincidence detection. Their second setup uses free-space optics and instead of imaging with two separate arms, they imaged two objects within the same arm.

Both setups worked well as a proof-of-concept demonstration, Pitsch says, noting that asynchronous detection can be used for remote detection and may be useful for atmospheric measurements.

The researchers are currently working on improvements to their SPAD cameras, focusing on pixel numbers and duty cycle. “For a current project, we’re getting a custom upgrade for our setup to make it easier to ‘maintain’ synchronization between detectors,” says Pitsch. “And we’re exploring the spectral entanglement properties for applications in both spectroscopy and hyperspectral imaging in the mid-infrared—a regime of high interest for biology and medicine.”

Their method may also find security and military applications because asynchronous detection has the potential to be used to observe without being detected—while simultaneously reducing the effects of over-illumination, turbulence, or scattering.

“It shares some of these benefits, stemming from the use of single pixel detectors, with classical ghost imaging, while some further advantages come from the use of quantum light,” Pitsch adds. “The system is, for example, highly resistant against jamming due to the randomized continuous-wave illumination by SPDC.”

FURTHER READING

C. Pitsch, D. Walter, L. Gasparini, H. Bürsing, and M. Eichhorn, Appl. Opt., 62, 6275–6281 (2023); https://doi.org/10.1364/ao.492208.

Sally Cole Johnson | Editor in Chief

Sally Cole Johnson, Laser Focus World’s editor in chief, is a science and technology journalist who specializes in physics and semiconductors.