A research team from Pohang University of Science and Technology (POSTECH) and Sungkyunkwan University in South Korea is tapping metasurfaces to create a solid-state LiDAR sensor with a 360-degree view of its surrounding environment.

Metasurfaces are 2D arrangements of designed nanostructures that derive their properties—such as resonant and waveguide effects—from their building blocks.

LiDAR sensors serve as eyes for autonomous vehicles by projecting light onto objects to help identify the distance to them, as well as the speed and direction of the vehicle. These sensors ideally should “see” from the sides as well as the front and rear of the vehicles, but the rotating LiDAR sensors currently in use today make it impossible to see both front and rear simultaneously.

The metasurface the team uses for its sensor is a flat optical device that greatly expands the viewing angle of the LiDAR sensor to 360-degrees. It extracts 3D information of objects in 360-degree regions and scatters more than 10,000 laser dot arrays from the metasurface to objects, then photographs the irradiated point pattern with a camera.

“This type of LiDAR sensor is used for the iPhone’s Face ID,” says Gyeongtae Kim, a Ph.D. candidate working with Professor Junsuk Rho in the Departments of Mechanical Engineering and Chemical Engineering at POSTECH, as well as Yeseul Kim and Jooyeong Yun, Ph.D. candidates at POSTECH, and Professor Inki Kim at Sungkyunkwan University. “It uses a dot projector to create the point sets, but has several limitations: the uniformity and viewing angle of the point pattern are limited, and the device is large.”

Diffractive optical elements are normally used to form laser dot arrays for LiDAR, but tend to result in limited field-of-view (FOV) and diffraction efficiency because of their micron-scale pixel size.

So that’s why the team opted instead for a metasurface-enhanced structured-light-based depth-sensing platform that scatters high-density 10K dot array over the 180-degree FOV by manipulating light at subwavelength scale.

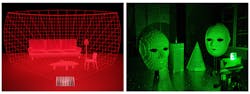

As their proof of concept, the researchers placed one facemask on the beam axis and another one 50 degrees away, within a distance of 1 meter, and estimated the depth information using a stereo-matching algorithm (see figure).

One of the big challenges to overcome is the sensor’s working distance, which is limited to a few meters due to the power dispersion of a high number of diffraction beams. “Such limitations can be overcome by using a higher-power laser and increasing the diffraction efficiency of the metasurfaces,” says Kim.

A nanoparticle-embedded-resin imprinting method enables the team to print their new device onto any substrate—including various curved surfaces such as glasses or substrates for AR glasses. “We’ve shown we can control the propagation of light in all angles by developing a technology more advanced than conventional metasurface devices,” Rho says. “This original technology will enable an ultrasmall and full-space 3D imaging sensor platform.”

The team’s full-space diffractive metasurfaces may soon enable an ultracompact depth-perception platform for face recognition and automotive robotic vision applications.

FURTHER READING

G. Kim et al., Nat. Commun., 13, 5920 (2022); https://doi.org/10.1038/s41467-022-32117-2.

Sally Cole Johnson | Editor in Chief

Sally Cole Johnson, Laser Focus World’s editor in chief, is a science and technology journalist who specializes in physics and semiconductors. She wrote for the American Institute of Physics for more than 15 years, complexity for the Santa Fe Institute, and theoretical physics and neuroscience for the Kavli Foundation.